Practical Solutions for Evaluating Large Language Models (LLMs)

Assessing Retrieval-Augmented Generation (RAG) Systems

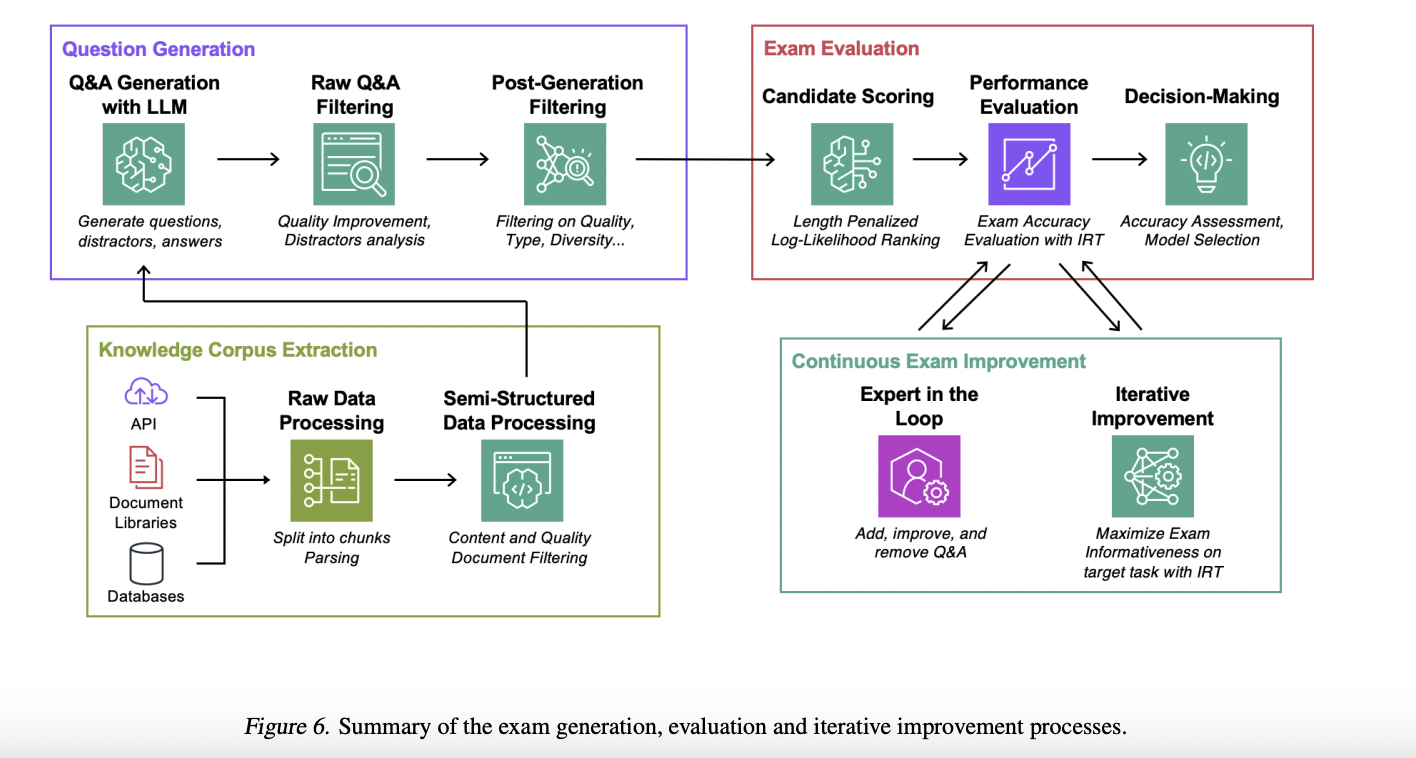

Evaluating the correctness of RAG systems can be challenging, but a team of Amazon researchers has introduced an exam-based evaluation approach powered by LLMs. This method focuses on factual accuracy and provides insights into various factors influencing RAG performance.

Fully Automated Evaluation Technique

The team has developed a fully automated, scalable exam-based evaluation technique, eliminating the need for costly human-in-the-loop evaluations. This approach utilizes LLMs to create exams and assess RAG systems’ performance on multiple-choice questions.

Enhanced Evaluation Process

An automated exam-generating process, optimized using Item Response Theory (IRT), ensures reliable and informative assessment metrics. It allows for ongoing improvements and benchmark datasets for assessing RAG systems across various disciplines.

Value of AI Solutions in Business

AI Implementation Strategy

AI can redefine the way businesses work by identifying automation opportunities, defining measurable KPIs, selecting customized AI solutions, and implementing them gradually to gather data and expand usage judiciously.

AI KPI Management and Engagement

For AI KPI management advice and insights into leveraging AI for sales processes and customer engagement, connect with us at hello@itinai.com or stay tuned on our Telegram and Twitter channels.