The Allen Institute for Artificial Intelligence AI2 has Released OLMo, an Open Language Model Framework

The OLMo framework provides comprehensive access to data, code, and evaluation tools for researchers, fostering collaborative AI research.

The initial release includes 7B and 1B parameter models trained on 2+ trillion tokens, aiming to empower the AI community.

OLMo offers resources such as full training data, model weights, and 500+ checkpoints per base model, enhancing research capabilities.

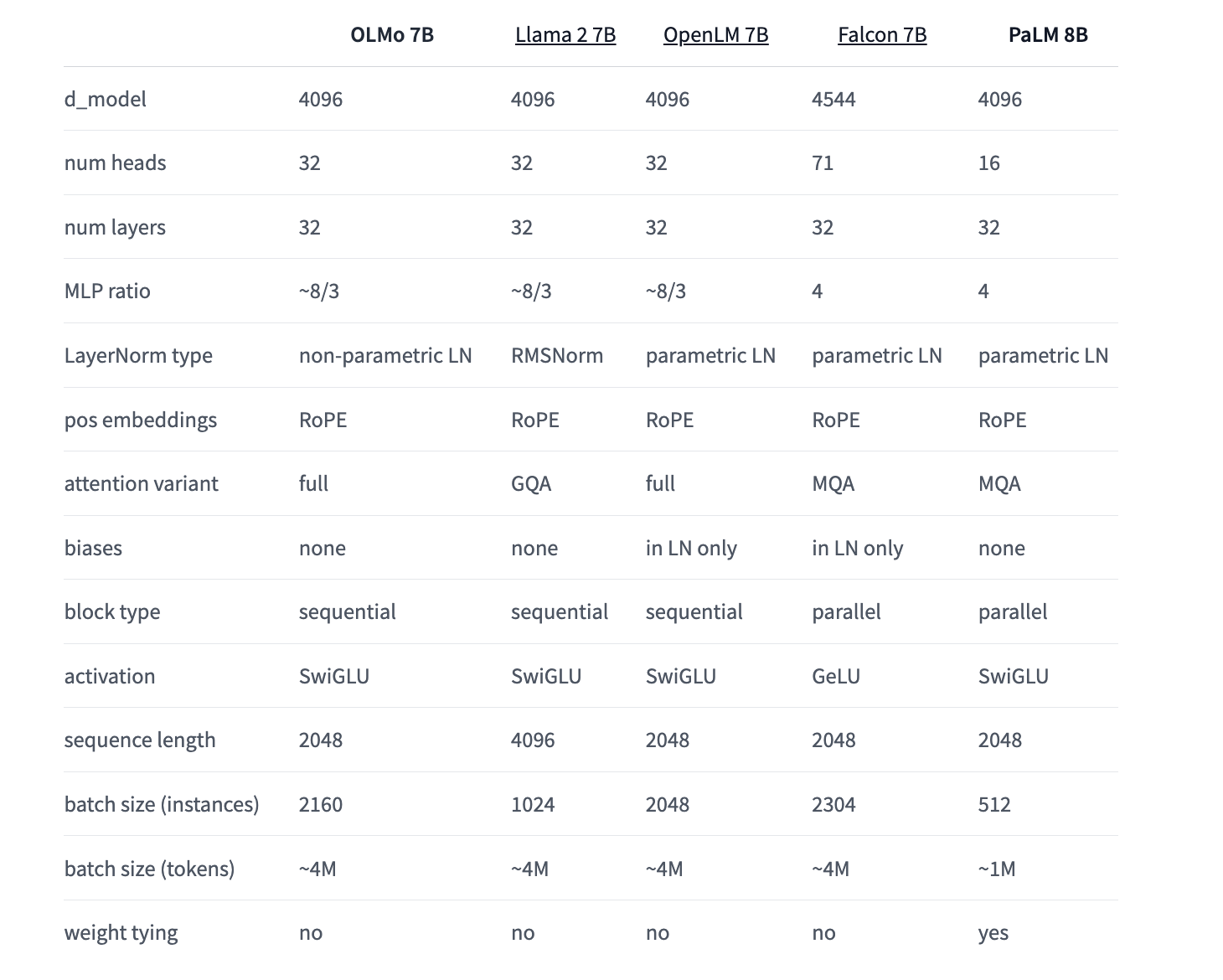

OLMo 7B demonstrates competitive performance when benchmarked against other open models, showcasing its potential in AI research.

Structured release processes ensure regular updates, with recent improvements in the July 2024 release marking the beginning of AI2’s ambitious plans for OLMo.

Discover OLMo Assets and Stay Updated

Explore OLMo 1B July 2024, OLMo 7B July 2024, OLMo 7B July 2024 SFT, and OLMo 7B July 2024 Instruct to access the latest research and developments.

Follow AI2 on Twitter and join their Telegram Channel and LinkedIn Group for continuous insights and updates.

Don’t forget to join the 47k+ ML SubReddit and find upcoming AI webinars to stay informed and connected with the AI community.

Arcee AI Released DistillKit: A Tool for Model Distillation

Arcee AI introduced DistillKit, an open-source and user-friendly tool that transforms model distillation for creating efficient, high-performance small language models.

Leverage AI for Business Advancement

Staying competitive with AI can redefine your company’s way of work, identify automation opportunities, define KPIs, select suitable AI solutions, and implement gradually.

Connect with itinai.com for AI KPI management advice and stay tuned on Telegram and Twitter for continuous insights into leveraging AI.

Discover how AI can redefine sales processes and customer engagement at itinai.com.