Understanding Agentic Systems and Their Evaluation

Agentic systems are advanced AI systems that can tackle complex tasks by mimicking human decision-making. They operate step-by-step, analyzing each phase of a task. However, an important challenge is how to evaluate these systems effectively. Traditional methods focus only on the final results, missing valuable feedback on the intermediate steps that could enhance performance. This limitation hinders real-time improvements in practical applications like code generation and software development.

The Need for Better Evaluation Methods

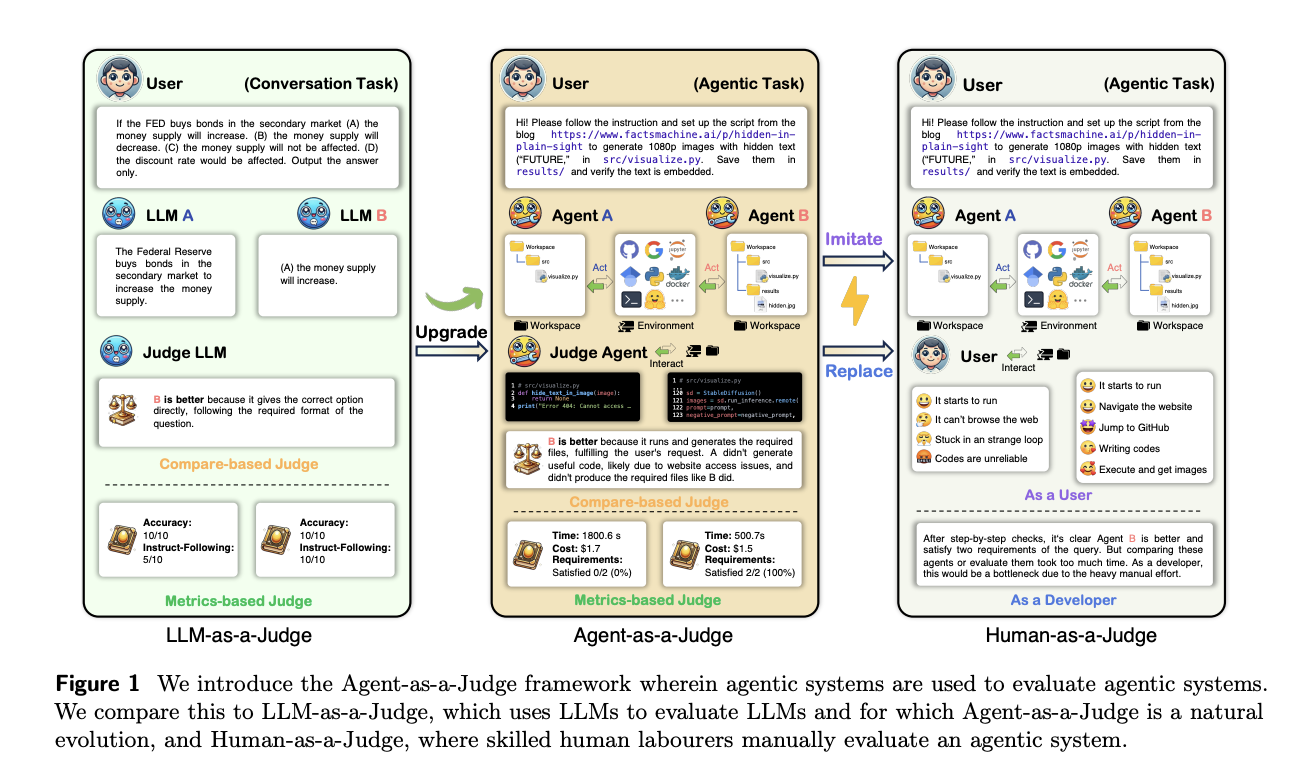

Current evaluation methods, like LLM-as-a-Judge, depend on large language models to assess AI outputs but often ignore the crucial intermediate steps. Human evaluations, while accurate, are costly and impractical for larger tasks. This gap in effective evaluation methods slows down the advancement of agentic systems, making it essential for AI developers to have reliable tools for assessing their models throughout the development process.

Limitations of Existing Benchmarks

Many existing evaluation frameworks emphasize either human judgment or final outcomes. For instance, SWE-Bench measures success rates but lacks insights into the intermediate processes. Similarly, HumanEval and MBPP focus on basic tasks without reflecting the complexities of real-world AI development. The limited scope of these benchmarks highlights the need for more comprehensive evaluation tools that can capture the full capabilities of agentic systems.

Introducing Agent-as-a-Judge Framework

Researchers from Meta AI and King Abdullah University of Science and Technology (KAUST) have developed a new evaluation framework called Agent-as-a-Judge. This innovative approach allows agentic systems to evaluate each other, providing continuous feedback throughout the task-solving process. They also created a benchmark named DevAI, which includes 55 realistic AI development tasks with detailed user requirements and preferences.

Benefits of the Agent-as-a-Judge Framework

The Agent-as-a-Judge framework assesses performance at every stage of the task, unlike previous methods that only look at outcomes. It was tested on leading agentic systems like MetaGPT, GPT-Pilot, and OpenHands. The results showed significant improvements:

- 90% alignment with human evaluators, compared to 70% with LLM-as-a-Judge.

- 97.72% reduction in evaluation time and 97.64% in costs compared to human evaluations.

- Average cost of human evaluation was over $1,297.50 and took more than 86.5 hours, while Agent-as-a-Judge costs only $30.58 and takes about 118.43 minutes.

Key Takeaways

- Agent-as-a-Judge offers a scalable and efficient evaluation method for agentic systems.

- DevAI includes 55 real-world tasks, enhancing the evaluation process.

- OpenHands completed tasks fastest, while MetaGPT was the most cost-effective.

- This framework provides continuous feedback, crucial for optimizing agentic systems.

Conclusion

This research marks a significant step forward in evaluating agentic AI systems. The Agent-as-a-Judge framework not only improves efficiency but also offers deeper insights into the intermediate steps of AI development. The DevAI benchmark further enhances this process, pushing the boundaries of what agentic systems can achieve. Together, these innovations are set to accelerate AI development, enabling more effective optimization of agentic systems.

For more information, check out the Paper and Dataset. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, consider subscribing to our newsletter and joining our 50k+ ML SubReddit.

Upcoming Live Webinar

Date: Oct 29, 2024

Topic: The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine

Transform Your Business with AI

Stay competitive by leveraging the Agent-as-a-Judge framework. Here are practical steps:

- Identify Automation Opportunities: Find key areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with pilot projects, gather data, and expand AI usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.