Revolutionizing Software Development with LLMs

Large Language Models (LLMs) have transformed how software is developed by automating coding tasks. They help bridge the gap between natural language and programming languages. However, they face challenges in specialized areas like High-Performance Computing (HPC), especially in creating parallel code. This is due to the lack of good quality parallel code data and the complexities involved in parallel programming.

Enhancing Developer Productivity

Creating HPC-specific LLMs can greatly improve developer productivity and speed up scientific discoveries. To tackle existing challenges, researchers stress the need for curated datasets with high-quality parallel code and improved training methods that focus on quality, not just quantity.

Adapting LLMs for HPC

Efforts to adapt LLMs for HPC include fine-tuning models like HPC-Coder and OMPGPT. While promising, many of these models rely on older architectures with limited applications. Newer models like HPC-Coder-V2 utilize advanced techniques to enhance performance and efficiency.

Importance of Data Quality

Research indicates that the quality of data is more important than the quantity for improving parallel code generation. Future research aims to develop strong HPC-specific LLMs that connect serial and parallel programming insights, focusing on high-quality datasets.

Breakthrough Research from the University of Maryland

Researchers from the University of Maryland created HPC-INSTRUCT, a synthetic dataset with quality instruction-answer pairs derived from parallel code samples. They fine-tuned HPC-Coder-V2, which has become one of the top open-source models for parallel code generation, performing similarly to GPT-4.

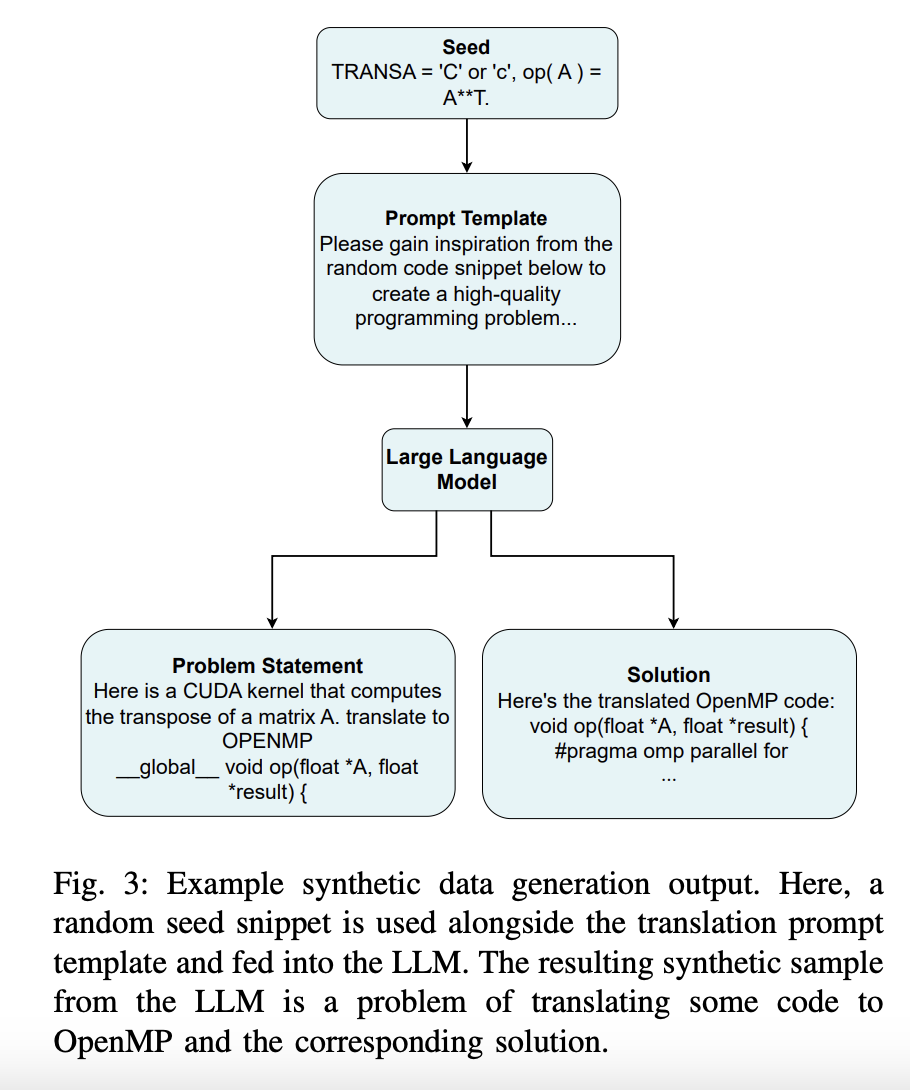

Innovative Dataset Development

HPC-INSTRUCT consists of 120,000 instruction-response pairs from open-source parallel code snippets. The models were fine-tuned using this dataset and other resources, assessing their ability to generate effective parallel code through various studies on data quality and model size.

Evaluating Model Performance

The ParEval benchmark was used to evaluate models on 420 diverse problems across multiple categories and execution models. Performance metrics showed that fine-tuning base models led to better results, and larger models had diminishing returns in performance gains.

Key Findings and Optimizations

The study established that fine-tuning base models is more effective than using instruction-tuned variants. Additionally, increasing training data or model size yielded diminishing returns in performance. The HPC-Coder-V2 models achieved remarkable success in parallel code generation for HPC.

Discover the Potential of AI

Explore how AI can transform your business operations and maintain competitiveness by leveraging solutions like HPC-INSTRUCT. Here are some practical steps:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI projects have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot program, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. Stay updated on AI insights through our Telegram or @itinaicom Twitter.

Join the Conversation

Stay engaged with our community on LinkedIn and our 60k+ ML SubReddit.