Code Generation Models: A New Era

Code generation models have advanced significantly due to better computing power and high-quality training data. Models like Code-Llama, Qwen2.5-Coder, and DeepSeek-Coder excel in various programming tasks. They are trained using vast amounts of coding data from the internet. However, the use of reinforcement learning (RL) in code generation is still in its early stages. The main challenges are:

- Difficulty in creating reliable reward signals.

- Lack of comprehensive coding datasets with trustworthy test cases.

Practical Solutions to Code Generation Challenges

To tackle these issues, several methods have emerged:

- Specialized large language models (LLMs) like Code Llama and Qwen Coder follow a two-step training process: pre-training and fine-tuning.

- Automatic test case generation is widely used for program verification, where models create both code and corresponding test cases. However, these generated cases can be inaccurate.

- While some efforts like Algo have aimed to enhance test quality, scalability remains a challenge.

- Reward models help align LLMs through RL but struggle in specialized areas like coding.

Innovative Approach by Researchers

Researchers from the University of Waterloo, HKUST, and others have introduced a groundbreaking method to improve code generation models using RL. This approach focuses on creating reliable reward signals. Key highlights include:

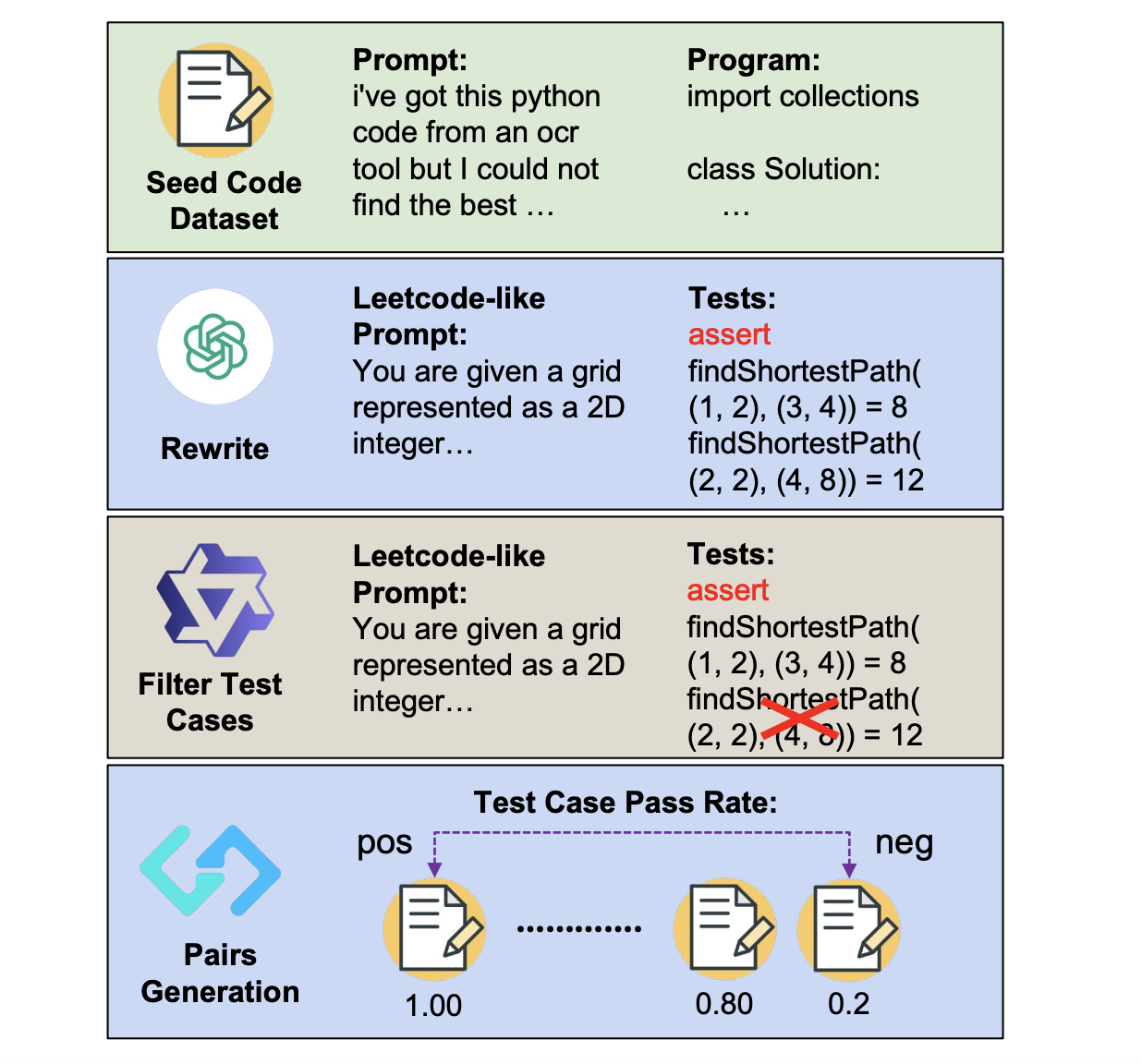

- An innovative pipeline that automatically generates question-test case pairs from existing code data.

- Using test case pass rates to establish preference pairs, which train reward models through Bradley-Terry loss.

- Significant performance improvements: a 10-point boost with Llama-3.1-8B-Ins and a 5-point increase with Qwen2.5-Coder7B-Ins.

Experimental Setup

The research involved three main setups:

- Reward Model Training: Using Qwen2.5-Coder-7B-Instruct to generate responses and create preference pairs from a large question set.

- Reinforcement Learning: Utilizing different policy models with varying reward systems.

- Evaluation: Testing performance across multiple benchmarks.

Promising Results

The experiments show that the new reward model consistently enhances performance, especially in weaker models. Notable improvements include:

- Gains exceeding 10 points in benchmarks like HumanEval and MBPP.

- Rule-based rewards improved scores significantly on various tests.

Conclusion

This research presents the first automated large-scale test-case synthesis method for training coding models. It demonstrates that high-quality verifiable code data can be generated efficiently, paving the way for improvements in reward model training and RL applications. The findings establish a solid foundation for future research in enhancing code generation capabilities.

Get Involved

Explore the Paper, GitHub Page, and Project Page. Follow us on Twitter, join our Telegram Channel, and connect with us on LinkedIn. Join our community of over 75k on our ML SubReddit.

If you want to boost your business with AI, consider the solutions offered by ACECODER. Here’s how to leverage AI effectively:

- Identify Opportunities: Find key areas where AI can enhance customer interactions.

- Define KPIs: Ensure measurable impacts on your business outcomes.

- Select AI Solutions: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start small, gather data, and expand your AI use wisely.

For AI KPI management advice, reach out to us at hello@itinai.com. Stay updated with AI insights on our Telegram channel or Twitter.

Discover how AI can transform your sales processes and customer engagement at itinai.com.