SampleAttention: Practical Solution for LLMs

Addressing Time-to-First-Token Latency

Large language models (LLMs) with long context windows face prolonged Time-to-First-Token (TTFT) latency due to the quadratic complexity of standard attention. Existing solutions often compromise accuracy or require extra pretraining, making real-time interactions challenging.

Practical Solutions for Efficient Attention

Current methods to mitigate the attention complexity in LLMs include sparse attention, low-rank matrices, unified sparse and low-rank attention, recurrent states, and external memory. However, these approaches often lead to accuracy losses, requiring additional pretraining or finetuning.

Introduction of SampleAttention

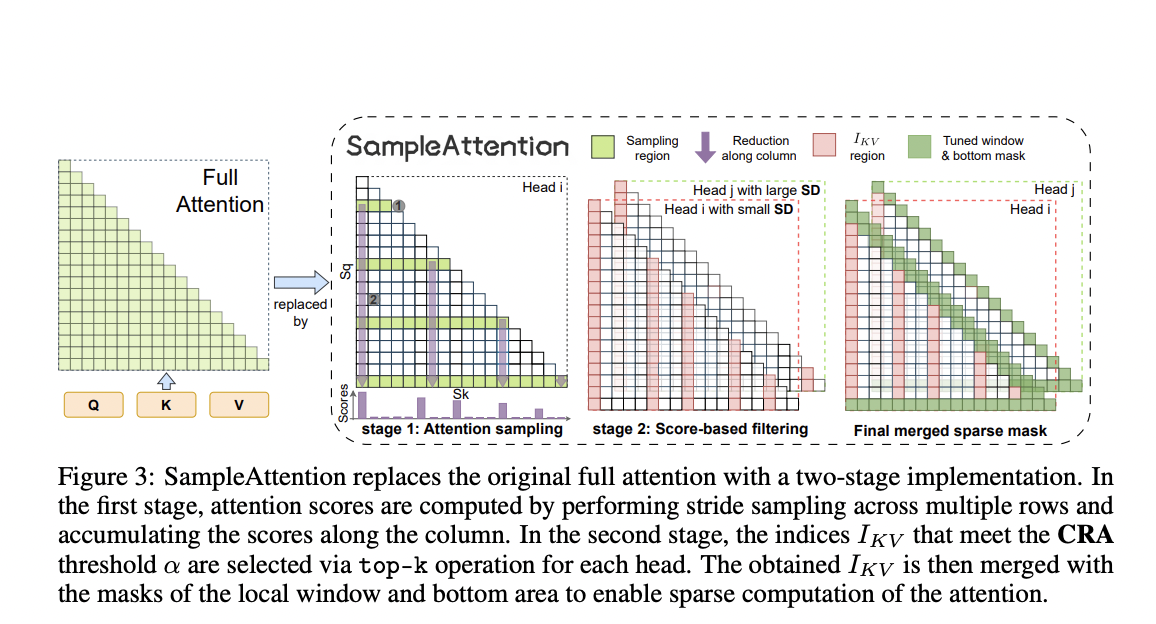

SampleAttention is an adaptive structured sparse attention mechanism designed to capture essential information with minimal overhead. It leverages sparse patterns in attention mechanisms to handle local window and column stripe patterns without compromising accuracy.

Improving TTFT Latency

SampleAttention dynamically captures head-specific sparse patterns during runtime, focusing on local window and column stripe patterns. It significantly reduces TTFT by attending to adjacent tokens and employing a query-guided KV filtering approach, maintaining nearly no accuracy loss.

Evidence of Effectiveness

Evaluated on widely used LLM variants, SampleAttention demonstrated up to 2.42 times reduction in TTFT compared to FlashAttention, showcasing significant performance improvements and maintaining accuracy in various tasks.

Practical Application and Advancement

SampleAttention effectively addresses the TTFT latency problem in LLMs, offering a practical solution for integrating into pre-trained models. Its efficient handling of essential information makes it promising for real-time applications of LLMs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter.

Don’t Forget to join our 46k+ ML SubReddit.

Driving Business Transformation with AI

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

AI for Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.