Practical Solutions for Parameter-Efficient Fine-Tuning Techniques

Enhancing LoRA with MoRA

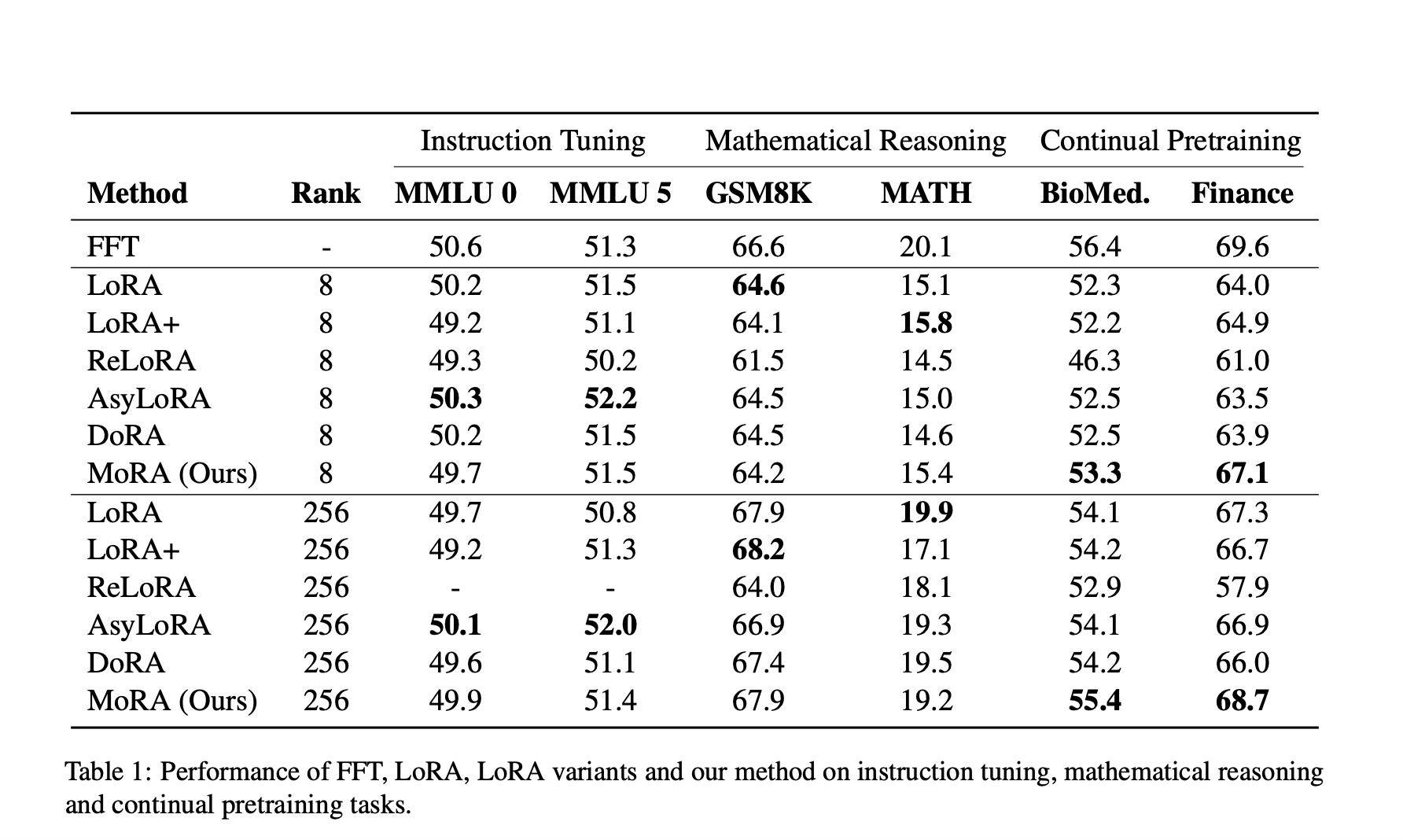

Parameter-efficient fine-tuning (PEFT) techniques, such as Low-Rank Adaptation (LoRA), reduce memory requirements by updating less than 1% of parameters while achieving similar performance to Full Fine-Tuning (FFT). MoRA, a robust method, achieves high-rank updating with the same number of trainable parameters by using a square matrix instead of low-rank matrices in LoRA. It introduces non-parameter operators to ensure the weight can be merged back into large language models (LLMs).

Practical Value of MoRA

MoRA performs similarly to LoRA in instruction tuning and mathematical reasoning but outperforms LoRA in biomedical and financial domains due to high-rank updating. It addresses the limitations of low-rank updating in LoRA for memory-intensive tasks and demonstrates superior results in continual pretraining. MoRA’s effectiveness is validated through comprehensive evaluation across various tasks.

AI Solutions for Business Evolution

Implementing AI for Business Advantages

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to redefine your way of work and stay competitive with AI. Connect with us for AI KPI management advice and continuous insights into leveraging AI.

Spotlight on a Practical AI Solution

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.