Challenges in Evaluating AI Capabilities

The mismatch between human expectations of AI capabilities and the actual performance of AI systems can hinder the effective utilization of large language models (LLMs). Incorrect assumptions about AI capabilities can lead to dangerous situations, especially in critical applications like self-driving cars or medical diagnosis.

MIT’s Approach to Evaluating LLMs

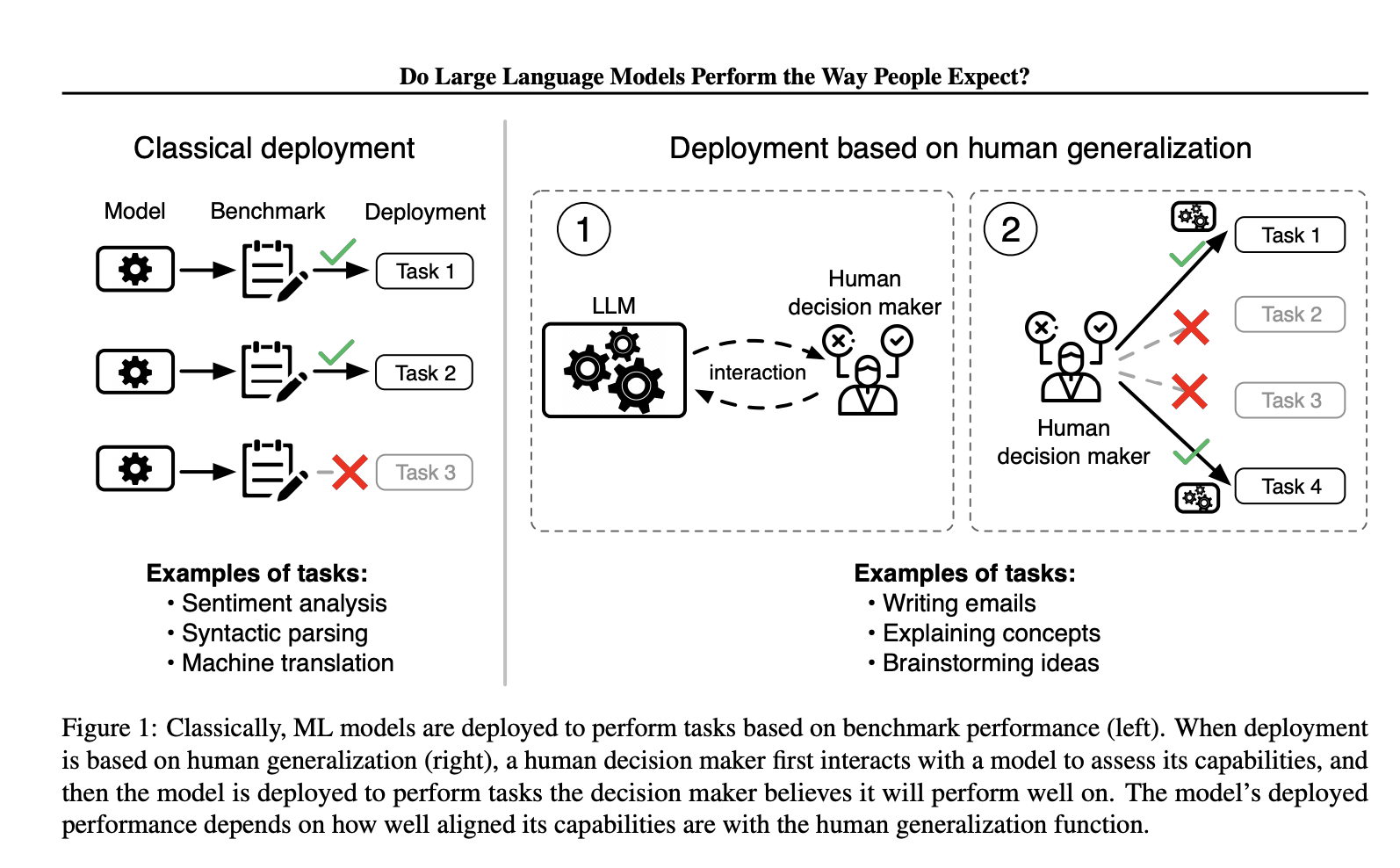

MIT researchers in collaboration with Harvard University address the challenge of evaluating large language models (LLMs) due to their broad applicability across various tasks, from drafting emails to assisting in medical diagnoses. They propose a new framework that evaluates LLMs based on their alignment with human beliefs about their performance capabilities.

Understanding Human Expectations

The key challenge is understanding how humans form beliefs about the capabilities of LLMs and how these beliefs influence the decision to deploy these models in specific tasks.

Human Generalization Function

The researchers introduce the concept of a human generalization function, which models how people update their beliefs about an LLM’s capabilities after interacting with it. This approach aims to understand and measure the alignment between human expectations and LLM performance, recognizing that misalignment can lead to overconfidence or underconfidence in deploying these models.

Survey and Results

The researchers designed a survey to measure human generalization, showing participants questions that a person or LLM got right or wrong and then asking whether they thought the person or LLM would answer a related question correctly. Results showed that humans are better at generalizing about other humans’ performance than about LLMs, often placing undue confidence in LLMs based on incorrect responses.

Implications and Recommendations

The study highlights the need for better understanding and integrating human generalization into LLM development and evaluation. The proposed framework accounts for human factors in deploying general-purpose LLMs to improve their real-world performance and user trust.

Practical AI Solutions for Businesses

If you want to evolve your company with AI, stay competitive, and use AI for your advantage, consider the following practical solutions:

- Identify Automation Opportunities

- Define KPIs

- Select an AI Solution

- Implement Gradually

AI Solutions for Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.