Enhancing AI Performance: Insights from MIT Research

Understanding Large Language Models (LLMs)

Large language models (LLMs) are increasingly utilized to tackle mathematical problems that reflect real-world reasoning tasks. These models are evaluated based on their ability to answer factual questions and manage multi-step logical processes. The effectiveness of LLMs in mathematical problem-solving serves as a reliable metric for assessing their capacity to extract relevant information, navigate complex statements, and compute accurate answers. This area of research is crucial for understanding the logical and cognitive capabilities of artificial intelligence.

Challenges with Input Variability

A significant challenge in the deployment of LLMs is their performance when faced with unstructured or cluttered inputs. In real-world scenarios, the questions posed to these models often include extraneous background information, irrelevant details, or subtle hints that can mislead them. While LLMs may excel in standard benchmark tests, their ability to discern critical information from noisy prompts remains uncertain. This highlights the need to investigate how distractions affect their reasoning and whether current models are prepared for unpredictable, real-world applications.

Research Findings from MIT

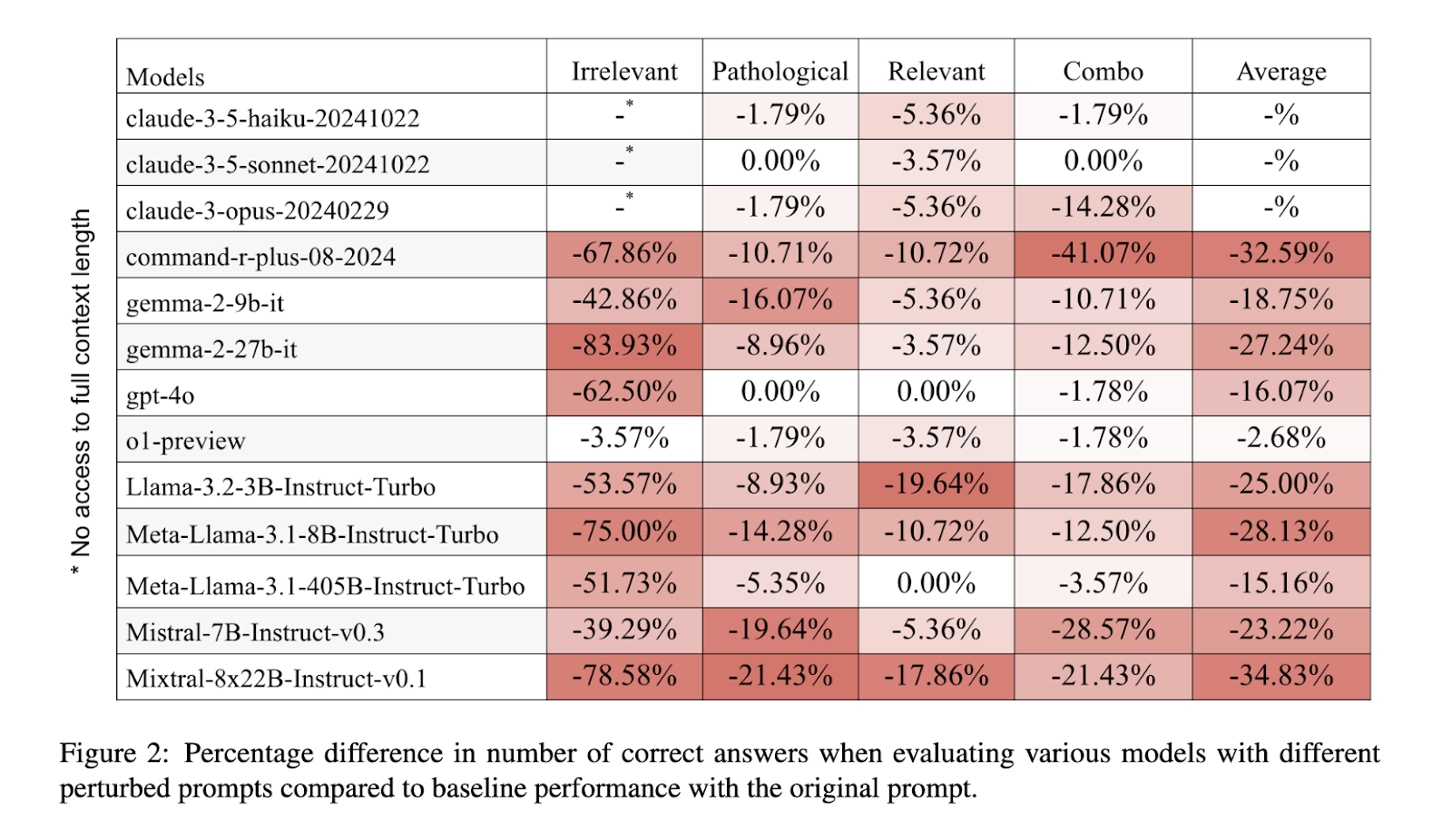

Researchers from the Massachusetts Institute of Technology (MIT) conducted a study to evaluate how LLMs respond to systematic perturbations in input prompts. They examined 13 large language models, both open-source and commercial, using APIs from OpenAI, Anthropic, Cohere, and TogetherAI. The study focused on four types of perturbations: irrelevant context, misleading instructions, relevant but non-essential information, and a combination of the latter two.

Methodology

The researchers modified prompts by incorporating dense and irrelevant contexts, such as Wikipedia articles or financial reports, which occupied up to 90% of the model’s context window. In the case of misleading instructions, they appended information designed to alter the reasoning path without changing the original question. They also inserted factually correct but unnecessary details to assess how well models managed distractions that appeared informative. The final variant combined both misleading and relevant information to further complicate the input.

Results

The results revealed a significant decline in model performance when irrelevant context was introduced, with an average accuracy drop of 55.89%. Misleading instructions caused an 8.52% decrease, while relevant context led to a 7.01% decline. The combination of both types of perturbations resulted in a 12.91% drop in accuracy. Notably, larger models did not necessarily perform better; some smaller models outperformed larger ones, indicating that size does not equate to resilience against input variability.

Implications for Business

These findings underscore the limitations of current LLMs, even those with billions of parameters. The study reveals a critical gap in the ability of these models to filter and prioritize information effectively. For businesses looking to implement AI solutions, this research provides valuable insights into the following practical strategies:

- Identify Automation Opportunities: Examine your workflows to find processes that can be automated using AI, particularly in customer interactions where AI can add significant value.

- Define Key Performance Indicators (KPIs): Establish important KPIs to measure the impact of your AI investments and ensure they contribute positively to your business outcomes.

- Select Appropriate Tools: Choose AI tools that align with your business needs and allow for customization to meet your specific objectives.

- Start Small and Scale: Initiate AI projects on a small scale, gather data on their effectiveness, and gradually expand your AI applications based on proven results.

Conclusion

In conclusion, the research from MIT highlights the importance of developing more resilient AI models capable of handling complex and cluttered inputs. As businesses increasingly rely on AI for decision-making and problem-solving, understanding these limitations is crucial for successful implementation. By adopting practical strategies and focusing on continuous improvement, organizations can harness the full potential of AI technology to enhance their operations and drive growth.