Advancing Sequence Modeling with RWKV-7

Introduction to RWKV-7

The RWKV-7 model represents a significant advancement in sequence modeling through an innovative recurrent neural network (RNN) architecture. This development emerges as a more efficient alternative to traditional autoregressive transformers, particularly for tasks requiring long-term sequence processing.

Challenges with Current Models

Autoregressive transformers excel in in-context learning and parallel training; however, they face limitations in computational efficiency due to quadratic complexity with sequence length. This results in high memory use and costs, especially during inference. Addressing these inefficiencies has led to the exploration of recurrent architectures that maintain linear complexity and constant memory usage.

Case Study: Performance of RWKV-7

RWKV-7, developed by a collaboration of researchers from institutions such as the RWKV Project and Tsinghua University, has achieved a new state-of-the-art (SoTA) performance at the 3 billion parameter scale for multilingual tasks. Despite being trained on fewer tokens than competing models, RWKV-7 provides comparable results in English language tasks while ensuring constant memory use and efficient inference time.

Key Innovations of RWKV-7

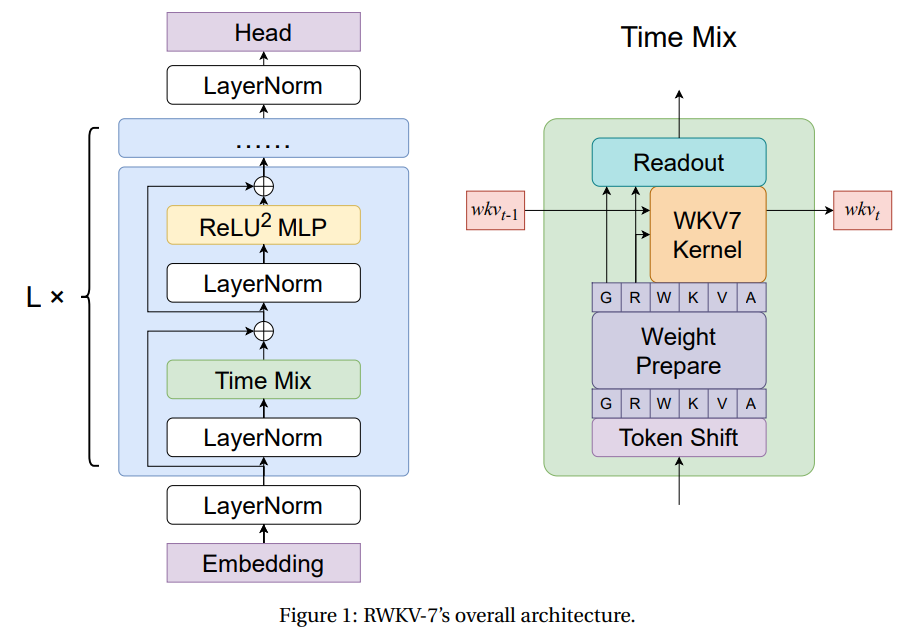

RWKV-7 introduces several advancements built upon its predecessor, RWKV-6. These include:

- Token-Shift Mechanism: Enhances the model’s ability to process sequences flexibly.

- Bonus Mechanisms: Improve learning efficiency by dynamically adjusting learning rates.

- ReLU² Feedforward Network: Offers improved computational stability.

Technical Enhancements

The architecture employs vector-valued state gating and an adaptive learning approach, enabling better state tracking and recognition across various languages. It utilizes a weighted key-value mechanism to facilitate efficient transitions within the model’s states, approximating the functionalities of traditional forget gates.

Performance Insights

Evaluated using the LM Evaluation Harness, RWKV-7 demonstrated competitive performance across numerous benchmarks while using significantly fewer training tokens. Notably, it excelled in tasks associated with associative recall and long-context retention, proving its capability to handle complex inputs efficiently.

Comparative Efficiency

RWKV-7 stands out for its ability to achieve strong results while utilizing fewer floating point operations (FLOPs) compared to leading transformer models, making it a cost-effective solution for businesses aiming to leverage AI.

Recommendations for Businesses

To harness the capabilities of RWKV-7 and similar AI technologies, businesses can adopt the following strategies:

- Automate Processes: Identify tasks or processes that can benefit from automation, particularly in customer interactions.

- Set Clear KPIs: Define key performance indicators to measure the impact of AI investments effectively.

- Select Custom Tools: Choose AI tools that align with your specific business needs and allow for customization.

- Start Small: Initiate with a manageable project, assess its effectiveness, and gradually expand the use of AI tools within your operations.

Conclusion

In summary, RWKV-7 represents a groundbreaking approach in sequence modeling, offering impressive efficiency and performance that can significantly benefit businesses. It provides a robust framework for handling complex tasks at a reduced cost while maintaining high parameter efficiency. As organizations explore AI integration, RWKV-7 serves as a compelling model that exemplifies how emerging technologies can transform business operations.

For further insights on implementing AI in your organization or to explore collaboration opportunities, please contact us at hello@itinai.ru. Connect with us on Telegram, X, and LinkedIn.