Introduction to Multi-modal Large Language Models (MLLMs)

Multi-modal Large Language Models (MLLMs) have advanced significantly, evolving into multi-modal agents that assist humans in various tasks. However, when it comes to PC environments, these agents face unique challenges compared to those used in smartphones.

Challenges in GUI Automation for PCs

PCs have complex interactive elements, often filled with icons that lack clear textual labels, making it difficult for agents to interpret and react accurately. Even sophisticated models such as Claude-3.5 have a limited accuracy of just 24% in user interface tasks. Furthermore, productivity tasks on PCs involve intricate workflows that span multiple applications, leading to a drastic drop in performance. For instance, GPT-4o sees its success rate diminish from 41.8% at the subtask level to merely 8% when handling complete instructions.

Existing Solutions and Their Limitations

Previous frameworks have attempted to tackle the complexity of PC tasks with different strategies. UFO uses a dual-agent architecture to separate application selection from control interactions, while AgentS enhances planning with online search and local memory. However, both approaches struggle with fine-grained perception and the handling of on-screen text, which is essential for tasks like document editing. Additionally, they often overlook the complex dependencies between subtasks, leading to suboptimal performance in everyday PC workflows.

Introducing the PC-Agent Framework

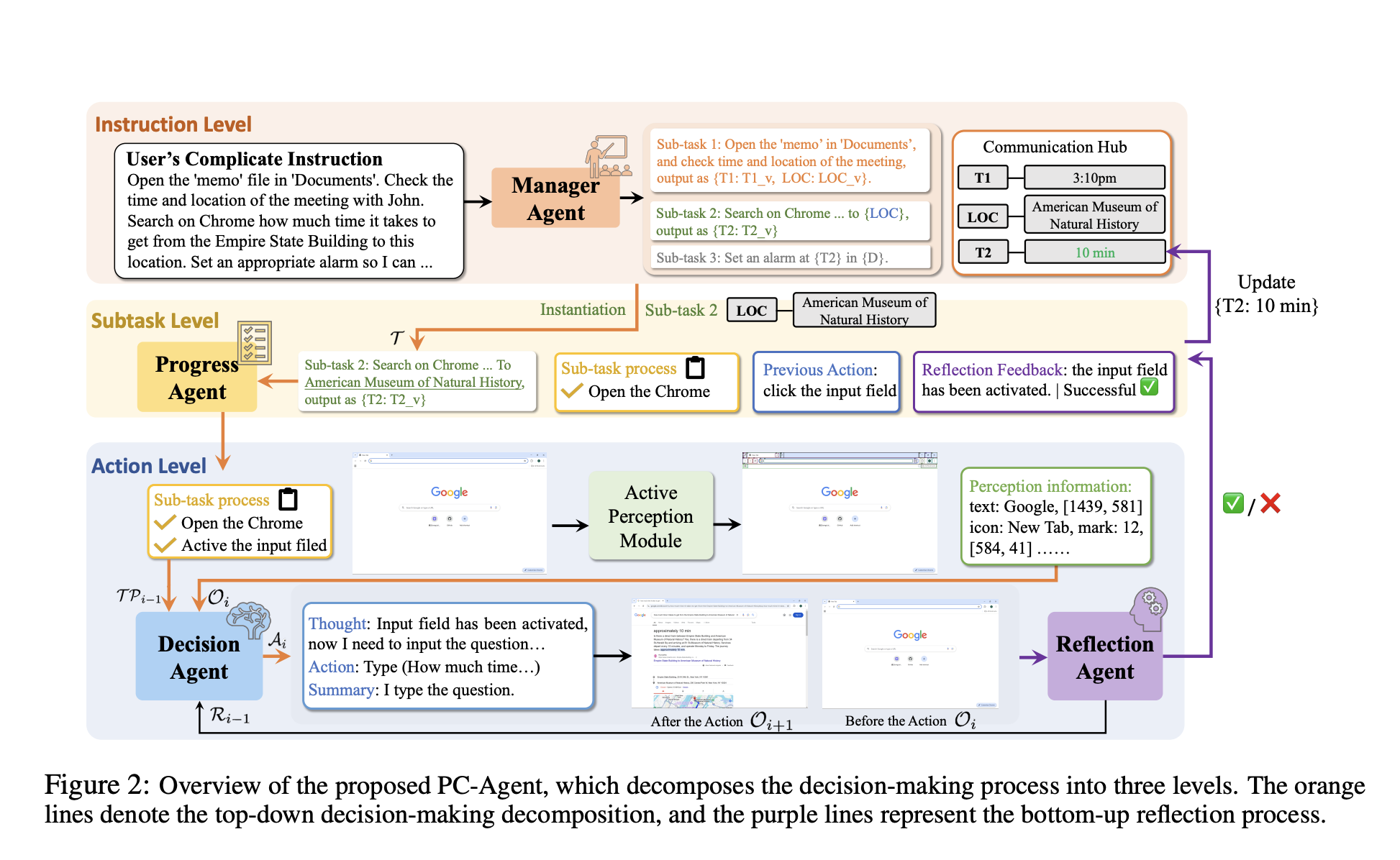

Researchers have developed the PC-Agent framework, designed to address these challenges through three innovative approaches:

1. Active Perception Module

This module enhances fine-grained interaction by accurately identifying interactive elements using accessibility trees, integrated with intention understanding and optical character recognition (OCR) for precise text localization.

2. Hierarchical Multi-Agent Collaboration

The framework features a three-level decision-making process:

- The Manager Agent breaks down instructions into manageable subtasks and oversees dependencies.

- The Progress Agent monitors operation history.

- The Decision Agent executes actions based on perception and progress data.

3. Reflection-based Dynamic Decision-Making

This involves a Reflection Agent that evaluates task execution accuracy and provides feedback, allowing for adaptive task management and real-time corrections.

Architecture and Functionality

The PC-Agent architecture formalizes GUI interaction by processing user instructions, observations, and history to determine actions. The Active Perception Module uses tools like pywinauto for better element recognition and leverages MLLM technology for enhanced text localization.

Experimental Results

Tests indicate that PC-Agent outperforms existing single and multi-agent solutions. Single-agent models like GPT-4o and others consistently fall short on complex tasks, achieving only a 12% success rate. Meanwhile, multi-agent frameworks show minor improvements but are still hindered by perception and dependency issues. In contrast, PC-Agent outstrips previous approaches, boasting a success rate that exceeds UFO by 44% and AgentS by 32% due to its comprehensive design.

Conclusion

The PC-Agent framework represents a significant leap forward in automating complex PC tasks through innovative features. It enhances interaction capabilities, effectively decomposes decision-making into manageable parts, and allows for real-time error correction. Validation through rigorous benchmarks confirms that PC-Agent excels in managing the complexity of typical PC productivity scenarios.

Explore Further

Discover how artificial intelligence can transform your business operations. Identify processes suitable for automation, monitor key performance indicators (KPIs), and select adaptable tools tailored to your objectives. Begin with a small project, evaluate its effectiveness, and gradually expand your AI initiatives.

Get in Touch

If you need assistance with managing AI in your business, contact us at hello@itinai.ru. Connect with us on Telegram, X, and LinkedIn.