Building an Interactive Multimodal Image-Captioning Application

In this tutorial, we will guide you on creating an interactive multimodal image-captioning application using Google’s Colab platform, Salesforce’s BLIP model, and Streamlit for a user-friendly web interface. Multimodal models, which integrate image and text processing, are essential in AI applications, enabling tasks like image captioning and visual question answering. This step-by-step guide ensures a smooth setup, addresses common challenges, and demonstrates how to implement advanced AI solutions without requiring extensive experience.

Setting Up the Environment

First, we need to install the necessary dependencies for building the application:

!pip install transformers torch torchvision streamlit Pillow pyngrokThis command installs:

- Transformers: For the BLIP model

- Torch & Torchvision: For deep learning and image processing

- Streamlit: For creating the user interface

- Pillow: For handling image files

- pyngrok: For exposing the app online

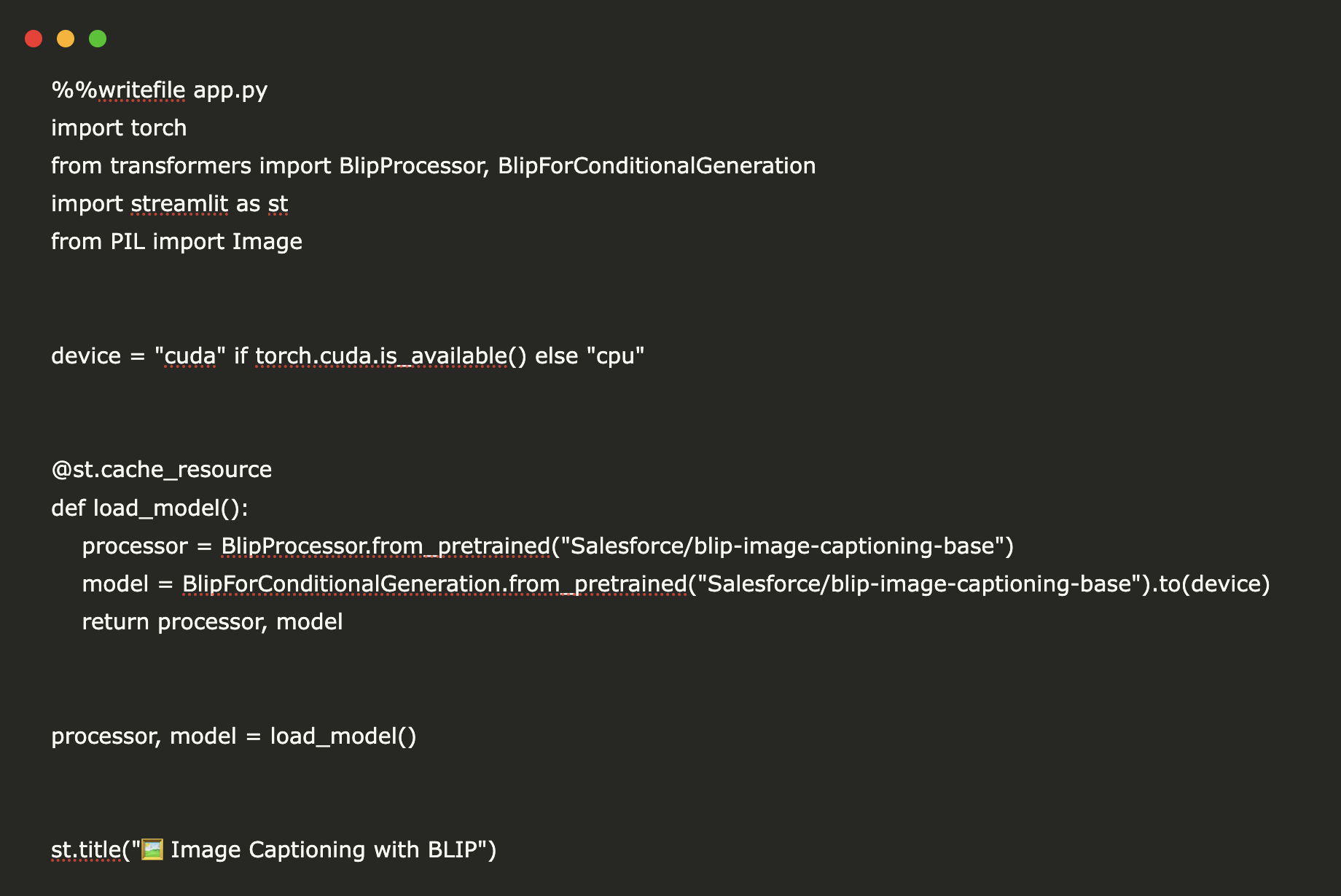

Creating the Application

Next, we will create a Streamlit-based multimodal image captioning app using the BLIP model. The following code loads the BLIPProcessor and BLIPForConditionalGeneration from Hugging Face, allowing the model to process images and generate captions:

import torch

from transformers import BlipProcessor, BlipForConditionalGeneration

import streamlit as st

from PIL import Image

device = "cuda" if torch.cuda.is_available() else "cpu"

@st.cache_resource

def load_model():

processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-base")

model = BlipForConditionalGeneration.from_pretrained("Salesforce/blip-image-captioning-base").to(device)

return processor, model

processor, model = load_model()

st.title("Image Captioning App")The Streamlit interface allows users to upload an image, display it, and generate a caption with a button click. The use of @st.cache_resource ensures efficient model loading, and CUDA support is utilized for faster processing if available.

Making the App Publicly Accessible

Finally, we will set up a publicly accessible Streamlit app running in Google Colab using ngrok:

from pyngrok import ngrok

NGROK_TOKEN = "use your own NGROK token here"

ngrok.set_auth_token(NGROK_TOKEN)

public_url = ngrok.connect(8501)

print("Public URL:", public_url)This step does the following:

- Authenticates ngrok using your personal token to create a secure tunnel.

- Exposes the Streamlit app running on port 8501 to an external URL.

- Prints the public URL for accessing the app in any browser.

- Launches the Streamlit app in the background.

This method allows remote interaction with your image captioning app, even though Google Colab does not provide direct web hosting.

Conclusion

We have successfully created and deployed a multimodal image captioning app powered by Salesforce’s BLIP and Streamlit, hosted securely via ngrok from a Google Colab environment. This exercise demonstrates how easily sophisticated machine learning models can be integrated into user-friendly interfaces and provides a foundation for further exploring and customizing multimodal applications.

Exploring AI in Business

Explore how artificial intelligence can transform your business processes:

- Identify processes that can be automated.

- Find customer interaction points where AI can add value.

- Establish key performance indicators (KPIs) to measure the impact of your AI investments.

- Select tools that meet your needs and allow customization.

- Start with small projects, gather data on effectiveness, and gradually expand your AI use.

If you need guidance on managing AI in business, contact us at hello@itinai.ru or follow us on our social media channels.