Advancements in Multimodal AI

Recent developments in multimodal large language models have significantly improved AI’s ability to analyze complex visual and textual information. However, challenges remain, particularly in mathematical reasoning tasks. Traditional multimodal AI systems often struggle with mathematical problems that involve visual contexts or geometric configurations, indicating a need for specialized models that can handle these complexities more effectively.

Introducing MMR1-Math-v0-7B

Researchers at Nanyang Technological University (NTU) have created the MMR1-Math-v0-7B model and the MMR1-Math-RL-Data-v0 dataset to tackle these challenges. This innovative model is specifically designed for mathematical reasoning in multimodal tasks and has demonstrated exceptional efficiency and performance. Notably, it achieves leading results with a surprisingly small training dataset, thereby setting new benchmarks in the field.

Efficient Training Methodology

The model was fine-tuned using only 6,000 carefully selected data samples. The researchers employed a balanced data selection strategy to ensure a diverse range of problem difficulty and mathematical reasoning types. This approach effectively enhanced the model’s reasoning capabilities by focusing on more complex problems.

Advanced Architecture and Training

MMR1-Math-v0-7B is based on the Qwen2.5-VL multimodal framework and utilizes an innovative training method called Generalized Reward-driven Policy Optimization (GRPO). This method allowed the model to be trained efficiently over 15 epochs, taking approximately six hours on 64 NVIDIA H100 GPUs, emphasizing its rapid learning and generalization capabilities.

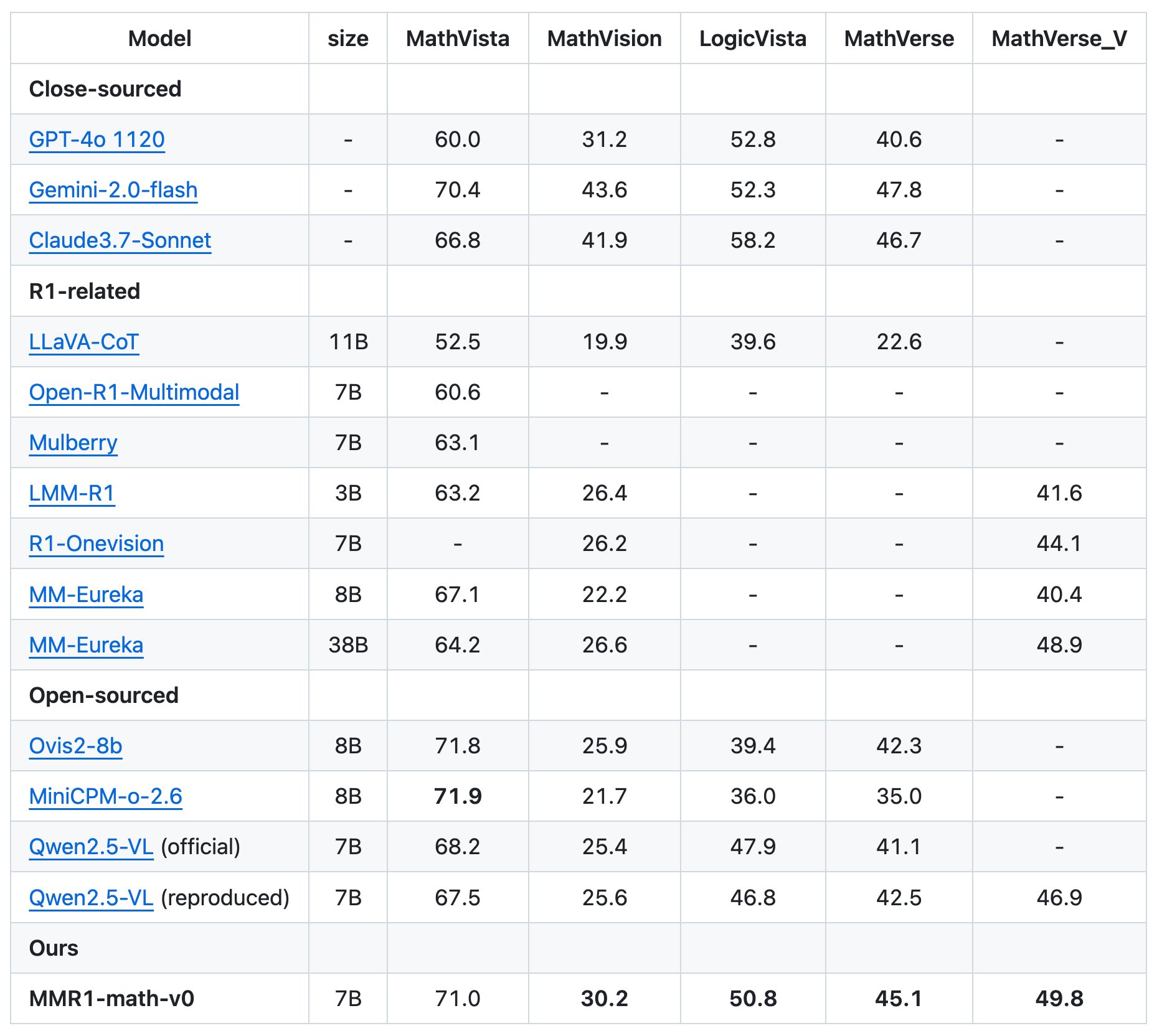

Benchmark Performance

The model was evaluated against established benchmarks using the VLMEvalKit, excelling in multimodal mathematical reasoning tasks. It achieved 71.0% accuracy on MathVista, outperforming notable models such as Qwen2.5-VL and LMM-R1. Additionally, it scored 30.2% on MathVision and performed well on LogicVista and MathVerse, demonstrating its strong generalization and reasoning abilities.

Key Takeaways

- MMR1-Math-v0-7B sets a new benchmark for multimodal mathematical reasoning among open-source 7B parameter models.

- It achieves superior performance with a small training dataset of only 6,000 samples.

- Efficient training was conducted in just 6 hours using GRPO on 64 NVIDIA H100 GPUs.

- The complementary MMR1-Math-RL-Data-v0 dataset includes 5,780 diverse and challenging multimodal math problems.

- It outperforms other multimodal models, showcasing exceptional efficiency and reasoning in complex mathematical scenarios.

Explore AI Solutions

Discover how artificial intelligence can transform your business operations. Consider the following strategies:

- Identify processes that can be automated and enhance customer interactions with AI.

- Establish key performance indicators (KPIs) to measure the impact of your AI investments.

- Select tools that align with your business needs and allow for customization.

- Start with a pilot project to assess effectiveness before scaling up AI integration.

Contact Us

If you need assistance managing AI in your business, please reach out to us at hello@itinai.ru or connect with us on Telegram, X, and LinkedIn.