Understanding AI in Video Processing

Efficiently handling video sequences with AI is crucial for accurate analysis. Current challenges arise from models that fail to process videos as continuous flows, leading to missed motion details and disruptions in continuity. This lack of temporal modeling results in incomplete event tracking and insights. Moreover, lengthy videos pose additional difficulties due to high computational costs and the need for techniques like frame skipping, which can sacrifice vital information and accuracy.

Current Limitations of Video-Language Models

Video-language models typically treat videos as static sequences of frames, which complicates the representation of motion and continuity. As a result, language models are forced to infer temporal relations independently, leading to limited comprehension. Subsampling frames can reduce computational load but can also omit important details, thereby affecting accuracy. While token reduction methods exist, they can increase complexity without significantly enhancing performance.

Introducing STORM: A Practical Solution

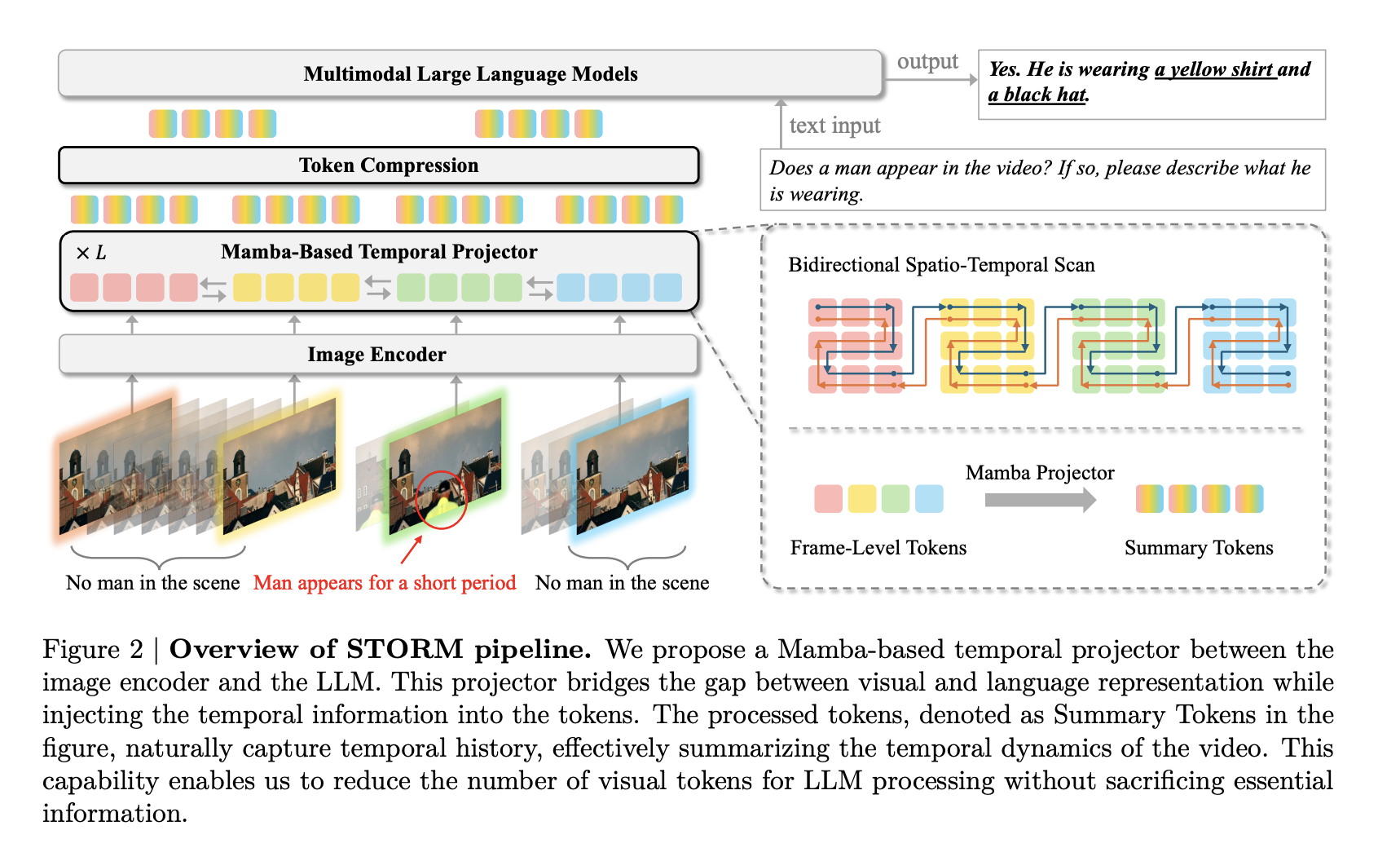

To tackle these issues, researchers from leading institutions developed STORM (Spatiotemporal Token Reduction for Multimodal LLMs), an innovative architecture designed for efficient long video processing. Unlike traditional methods, STORM integrates temporal information directly at the token level, enhancing computation efficiency and reducing redundancies.

Key Features of STORM

The STORM framework employs Mamba layers for improved temporal modeling, utilizing a bidirectional scanning module to capture dependencies across spatial and temporal dimensions. The temporal encoder efficiently processes image and video inputs, integrating global context while capturing dynamic motion. Token compression techniques are implemented to enhance computational efficiency, allowing the system to function effectively on a single GPU without specialized equipment.

Successful Validation and Performance

Extensive experiments validated the effectiveness of STORM. The model was trained using pre-existing datasets and underwent two key stages: alignment and supervised fine-tuning. Results demonstrated that STORM outperformed existing models, achieving state-of-the-art results across several long-video benchmarks. The Mamba module notably reduced inference times and improved performance, especially in understanding broader contexts.

Conclusion: Future Implications

In summary, STORM significantly enhances long-video understanding with its innovative architecture and efficient token reduction strategies. This model serves as a foundational reference for future research, promoting advancements in token compression and multimodal alignment while maintaining low computational demands.

Explore Further

For more insights on how AI can transform your business operations, consider identifying automation opportunities within customer interactions and monitoring key performance indicators to validate the effectiveness of your AI investments. Select tools that align with your objectives, and start with small projects to gather data before expanding.

For guidance on managing AI in your business, reach out to us at hello@itinai.ru or connect with us on Telegram, Twitter, and LinkedIn.