Challenges with Large Language Models

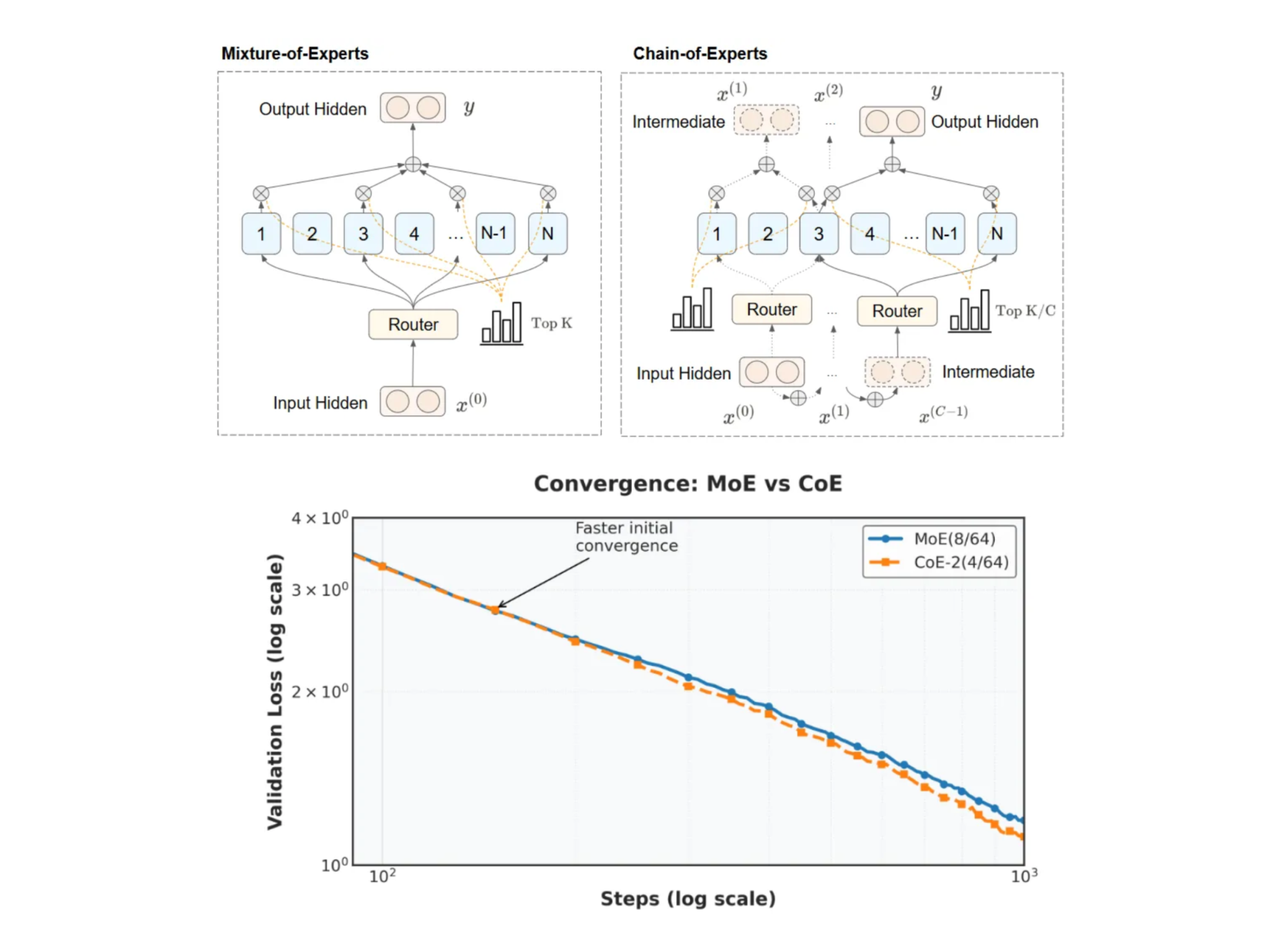

Large language models have greatly improved our understanding of artificial intelligence, but efficiently scaling these models still poses challenges. Traditional Mixture-of-Experts (MoE) architectures activate only a few experts for each token to save on computation. This design, however, leads to two main issues:

- Experts work independently, limiting the model’s ability to integrate diverse perspectives.

- Despite sparse activation, high overall parameter counts require significant memory resources.

These challenges indicate that while MoE models enhance scalability, their design may restrict both performance and resource efficiency.

The Chain-of-Experts (CoE) Approach

The Chain-of-Experts (CoE) method reexamines MoE architectures by enabling sequential communication among experts. Unlike traditional MoE models, CoE processes tokens through a series of iterations within each layer. Each expert’s output becomes the input for the next, fostering collaboration and refined interpretation throughout the processing.

Technical Details and Benefits

The CoE method employs an iterative process that transforms expert interactions. For example, in a configuration like CoE-2(4/64), the model processes tokens over two iterations, selecting four experts from a pool of 64 each time. This contrasts with traditional MoE setups, which only take a single pass through a selected group.

A key feature of CoE is its independent gating mechanism. Unlike conventional MoE models, where gating decisions are made once per token per layer, CoE allows gating decisions to be made independently during each iteration. This flexibility fosters specialization, enabling experts to adapt based on prior information.

Additionally, CoE incorporates inner residual connections, enhancing the model’s capability. Rather than adding the original token back after processing, CoE integrates residual connections within each iteration, preserving token integrity and allowing for incremental improvements.

Experimental Results and Insights

Empirical studies highlight the advantages of the Chain-of-Experts method. For instance, in controlled experiments focused on math tasks, configurations like CoE-2(4/64) showed a reduction in validation loss from 1.20 to 1.12 compared to traditional MoE models, without increasing memory or computational costs.

Furthermore, increasing iterations in CoE can match or exceed the performance improvements gained by adding more experts in a single pass. CoE configurations have demonstrated up to an 18% reduction in memory usage while achieving similar or superior performance outcomes.

The sequential design of CoE also allows for significantly more expert combinations—up to 823 times more than traditional methods—leading to richer processing pathways and potentially more specialized outputs.

Conclusion

The Chain-of-Experts framework signifies a thoughtful evolution in sparse neural network design. By fostering sequential communication among experts, CoE addresses the limitations of traditional MoE models while enhancing efficiency. The independent gating mechanism and inner residual connections create a more flexible and resource-efficient approach to scaling large language models.

Preliminary experimental results suggest that CoE can yield meaningful improvements in performance and resource utilization. This approach encourages further investigation into how iterative communication might be refined in future models, ultimately contributing to more sustainable AI applications.

Next Steps for Businesses

- Explore how AI technology can transform your work processes and identify areas for automation.

- Determine key performance indicators (KPIs) to assess the impact of AI investments on your business.

- Select AI tools that align with your needs and allow for customization.

- Start with a small AI project, gather data on its effectiveness, and expand its use gradually.

If you need assistance with managing AI in your business, contact us at hello@itinai.ru or follow us on Telegram, X, and LinkedIn.