Challenges of Large Language Models in Complex Reasoning

Large Language Models (LLMs) experience difficulties with complex reasoning tasks, particularly due to the computational demands of longer Chain-of-Thought (CoT) sequences. These sequences can increase processing time and memory usage, making it essential to find a balance between reasoning accuracy and computational efficiency.

Practical Solutions for Businesses

To address these challenges, various strategies have been developed:

- Simplifying Reasoning: Streamlining the reasoning process by removing unnecessary steps.

- Parallel Generation: Generating reasoning steps simultaneously to save time.

- Latent Representations: Compressing reasoning into continuous representations, avoiding explicit token generation.

- Prompt Compression: Using lightweight models and filtering high-informative tokens to manage complex instructions more efficiently.

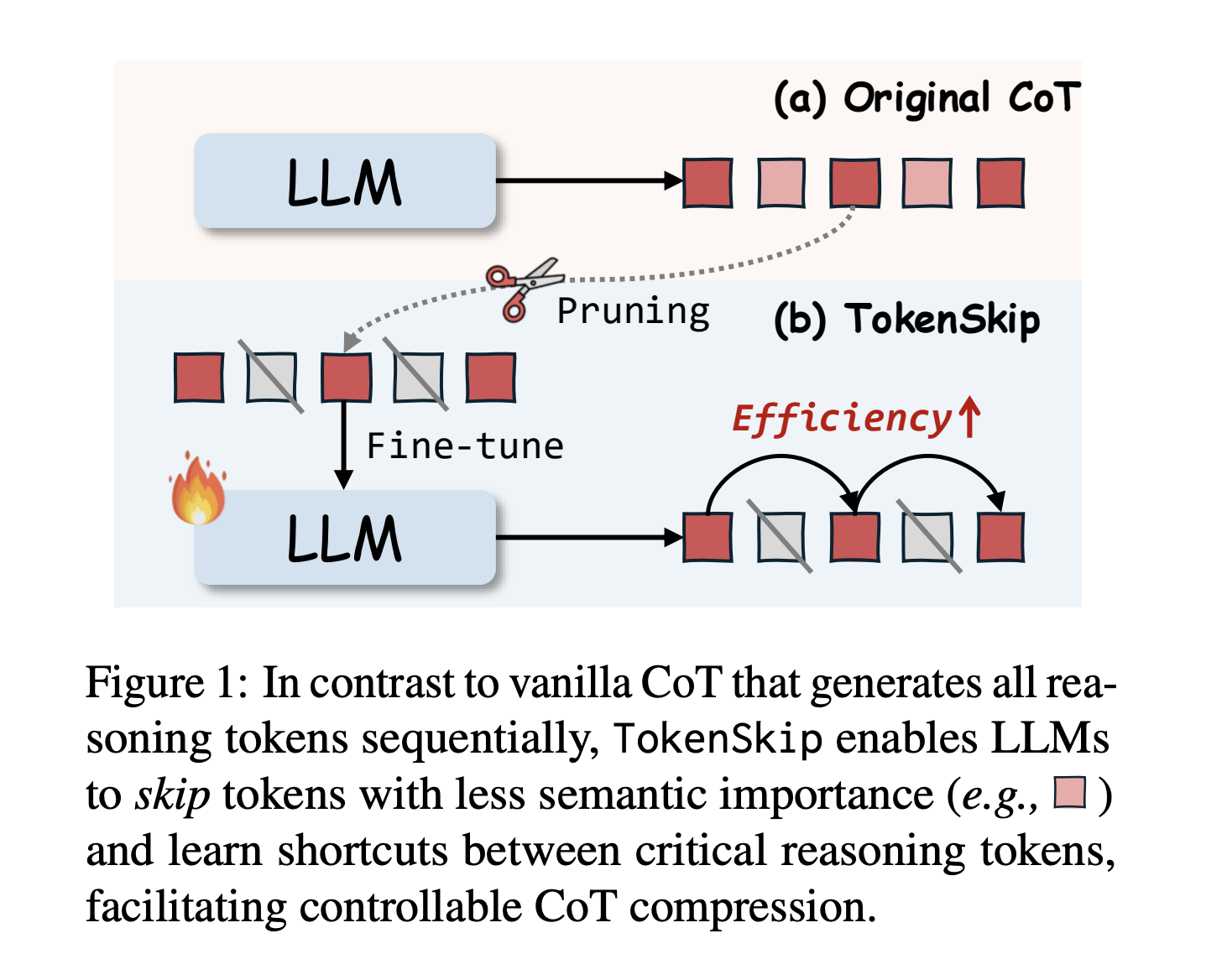

Introducing TokenSkip

Researchers have developed an innovative method called TokenSkip, which optimizes CoT processing in LLMs. This technique allows models to skip less critical tokens while keeping essential reasoning connections, thus reducing computational overhead.

How TokenSkip Works

The TokenSkip method consists of two main phases:

- Training Data Preparation: Creating compressed CoT training data through token pruning based on importance scoring.

- Inference: Utilizing an autoregressive decoding approach while allowing the model to skip less important tokens.

Results and Benefits

Initial tests show that larger language models perform well with higher compression rates. For example, the Qwen2.5-14B-Instruct model demonstrates only a 0.4% performance drop with a 40% reduction in token usage. TokenSkip outperforms other methods, maintaining reasoning capabilities while achieving significant efficiency gains.

Future Opportunities

The TokenSkip research opens new avenues for improving LLM efficiency while preserving robust reasoning capabilities. Businesses can leverage these advancements to enhance their AI applications.

Transform Your Business with AI

Explore how AI technology can benefit your work by considering the following steps:

- Identify processes that can be automated.

- Pinpoint customer interaction moments where AI adds value.

- Establish KPIs to measure the impact of your AI initiatives.

- Select customizable tools that align with your objectives.

- Start with small projects, evaluate effectiveness, and expand AI use gradually.

Need Assistance?

If you require guidance on managing AI in your business, please reach out to us at hello@itinai.ru. You can also follow us on Telegram, X, and LinkedIn.

“`