Understanding Large Language Models (LLMs)

Large Language Models (LLMs) analyze vast amounts of data to produce clear and logical responses. They use a method called Chain-of-Thought (CoT) reasoning to break down complex problems into manageable steps, similar to how humans think. However, creating structured responses has been challenging and often requires significant computational power and large datasets. Recent advancements focus on making LLMs more efficient, needing less data while still achieving high accuracy in reasoning.

Challenges in Enhancing LLM Reasoning

One major challenge is training LLMs to produce long, structured CoT responses that include self-reflection and validation. Current models have made progress, but they often need expensive fine-tuning on large datasets. Many proprietary models keep their methods secret, limiting access. There is a growing demand for data-efficient training techniques that maintain reasoning abilities without high computational costs.

Innovative Training Approaches

Traditional methods for improving LLM reasoning include fully supervised fine-tuning (SFT) and parameter-efficient techniques like Low-Rank Adaptation (LoRA). These methods help refine reasoning without extensive retraining. Some models, like OpenAI’s o1-preview and DeepSeek R1, have shown improvements but still require a lot of training data.

Breakthrough from UC Berkeley

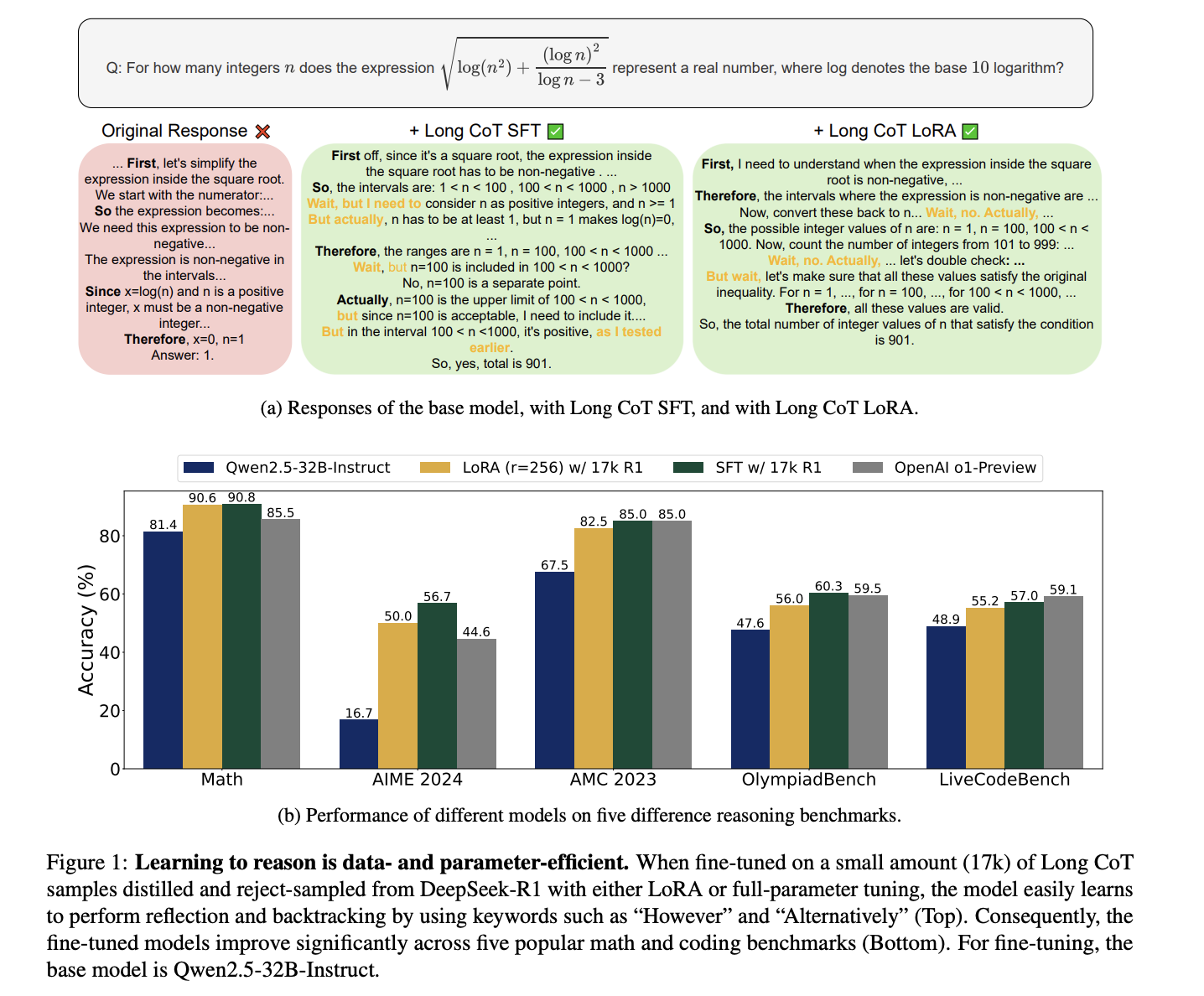

A research team from UC Berkeley has developed a new training method that enhances LLM reasoning using minimal data. Instead of millions of samples, they used only 17,000 CoT examples to fine-tune the Qwen2.5-32B-Instruct model. Their approach focuses on improving the structure of reasoning steps rather than the content, leading to better logical consistency and reduced computational costs. This makes the technology more accessible for various applications.

Key Findings

The research shows that the structure of CoT is vital for improving LLM performance. Experiments revealed that changing the logical order of training data significantly affected accuracy, while altering individual reasoning steps had little impact. The team found that maintaining the logical sequence of CoT is crucial for optimal reasoning capabilities. Using LoRA fine-tuning, the model updated less than 5% of its parameters, providing an efficient alternative to full fine-tuning.

Performance Improvements

The Qwen2.5-32B-Instruct model, trained with 17,000 CoT samples, achieved impressive results: 56.7% accuracy on AIME 2024 (a 40.0% improvement), 57.0% on LiveCodeBench (an 8.1% increase), and 90.8% on Math-500 (a 6.0% rise). These results demonstrate that efficient fine-tuning can lead to competitive performance, comparable to proprietary models.

Conclusion

This research marks a significant advancement in improving LLM reasoning efficiency. By focusing on structural integrity rather than large datasets, the team has created a training method that ensures strong logical coherence with minimal resources. This approach reduces reliance on extensive data while maintaining robust reasoning capabilities, making LLMs more scalable and accessible. The insights from this study pave the way for future model optimizations, showing that structured fine-tuning can enhance LLM reasoning without sacrificing efficiency.

Explore More

Check out the Paper and GitHub Page. All credit for this research goes to the researchers involved. Follow us on Twitter and join our 75k+ ML SubReddit.

Transform Your Business with AI

If you want to enhance your company with AI, consider the following steps:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.