Challenges in Current NLP Models

Transformer models have improved natural language processing (NLP) but face issues with:

- Long Context Reasoning: Difficulty in understanding extended text.

- Multi-step Inference: Struggles with complex reasoning tasks.

- Numerical Reasoning: Inefficient at handling numerical data.

These problems are due to their complex self-attention mechanisms and lack of effective memory, which limits their ability to connect scattered information. Current solutions like Recurrent Memory Transformers (RMT) and Retrieval-Augmented Generation (RAG) offer partial fixes but often compromise on efficiency or adaptability.

Introducing the Large Memory Model (LM2)

Convergence Labs presents the Large Memory Model (LM2), an advanced Transformer model designed to overcome the limitations of traditional models in long-context reasoning.

Key Features of LM2:

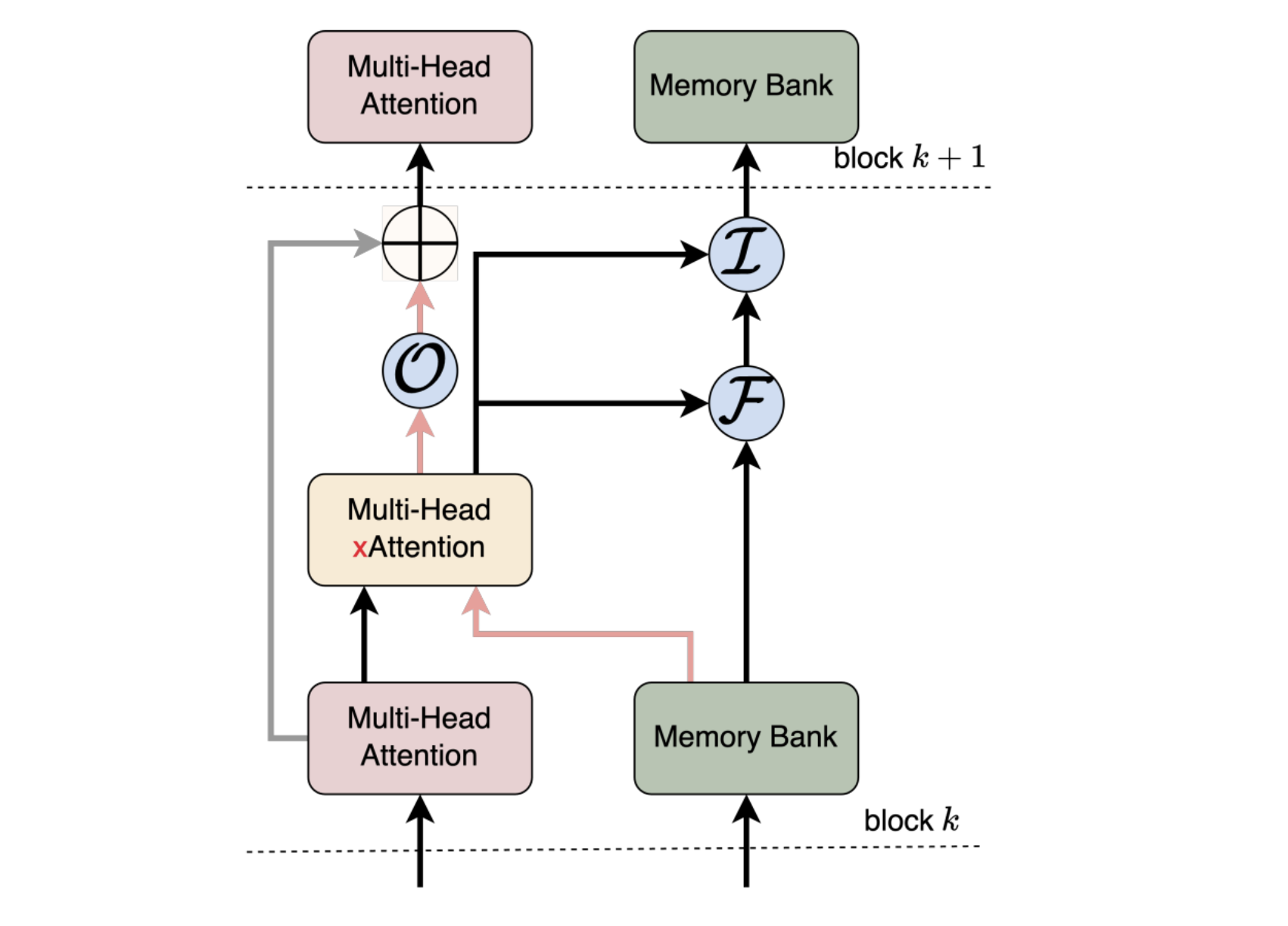

- Memory-Augmented Transformer: A dedicated memory bank for long-term information storage, enhancing the model’s ability to retrieve relevant data.

- Hybrid Memory Pathway: Maintains the original information flow while adding a memory pathway, ensuring efficiency.

- Dynamic Memory Updates: Uses learnable gates to selectively update memory, retaining important information without clutter.

These features enable LM2 to handle long sequences efficiently, improving reasoning and inference capabilities.

Proven Results

LM2 was tested on the BABILong dataset, focusing on memory-intensive reasoning tasks, and showed impressive results:

- Short Context (0K): 92.5% accuracy, outperforming RMT (76.4%) and Llama-3.2 (40.7%).

- Long Context (1K–4K): Maintained higher accuracy with 55.9% at 4K context, compared to RMT (48.4%) and Llama-3.2 (36.8%).

- Extreme Long Context (≥8K): More stable performance, excelling in multi-step inference and relational reasoning.

Additionally, LM2 showed a 5.0% improvement on the MMLU dataset, especially in Humanities and Social Sciences, confirming that its memory module enhances reasoning without sacrificing overall performance.

Conclusion

LM2 represents a significant advancement in addressing the limitations of standard Transformers for long-context reasoning. Its memory module enhances multi-step inference and numerical reasoning while ensuring efficiency. The positive experimental results highlight LM2’s superiority over existing models, especially in tasks requiring extended context retention. This model demonstrates that integrating memory can improve reasoning capabilities without losing versatility.

Explore AI Solutions

If you want to elevate your company with AI, consider the following steps:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand AI usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or @itinaicom.

Discover how AI can transform your sales processes and customer engagement at itinai.com.