Understanding Regression Tasks and Their Challenges

Regression tasks aim to predict continuous numeric values but often rely on traditional approaches that have some limitations:

Limitations of Traditional Approaches

- Distribution Assumptions: Many methods, like Gaussian models, assume normally distributed outputs, which limits their flexibility.

- Data Requirements: These methods typically need a lot of labeled data.

- Complexity and Instability: They often require complex normalization processes which can lead to instability.

A New Approach from Google DeepMind

Google DeepMind has introduced a new method that changes how we think about numeric prediction.

Innovative Regression Formulation

- Tokenization of Numbers: Numbers are represented as sequences of tokens rather than single values, which increases flexibility.

- Auto-Regressive Decoding: This method uses sequence generation for numeric predictions, avoiding the need for strict data assumptions.

- Improved Performance: It excels at modeling complex and multimodal distributions, leading to better density estimation and regression accuracy.

How the Model Works

The model uses two methods for representing numbers:

Tokenization Methods

- Normalized Tokenization: Encodes numbers within a limited range for more precise modeling.

- Unnormalized Tokenization: Supports a broader range of numbers without normalization constraints.

Model Training

- The model is trained to predict token sequences, enhancing numeric representation accuracy.

- Cross-entropy loss is used during training to achieve precise numeric outputs.

Results and Impact

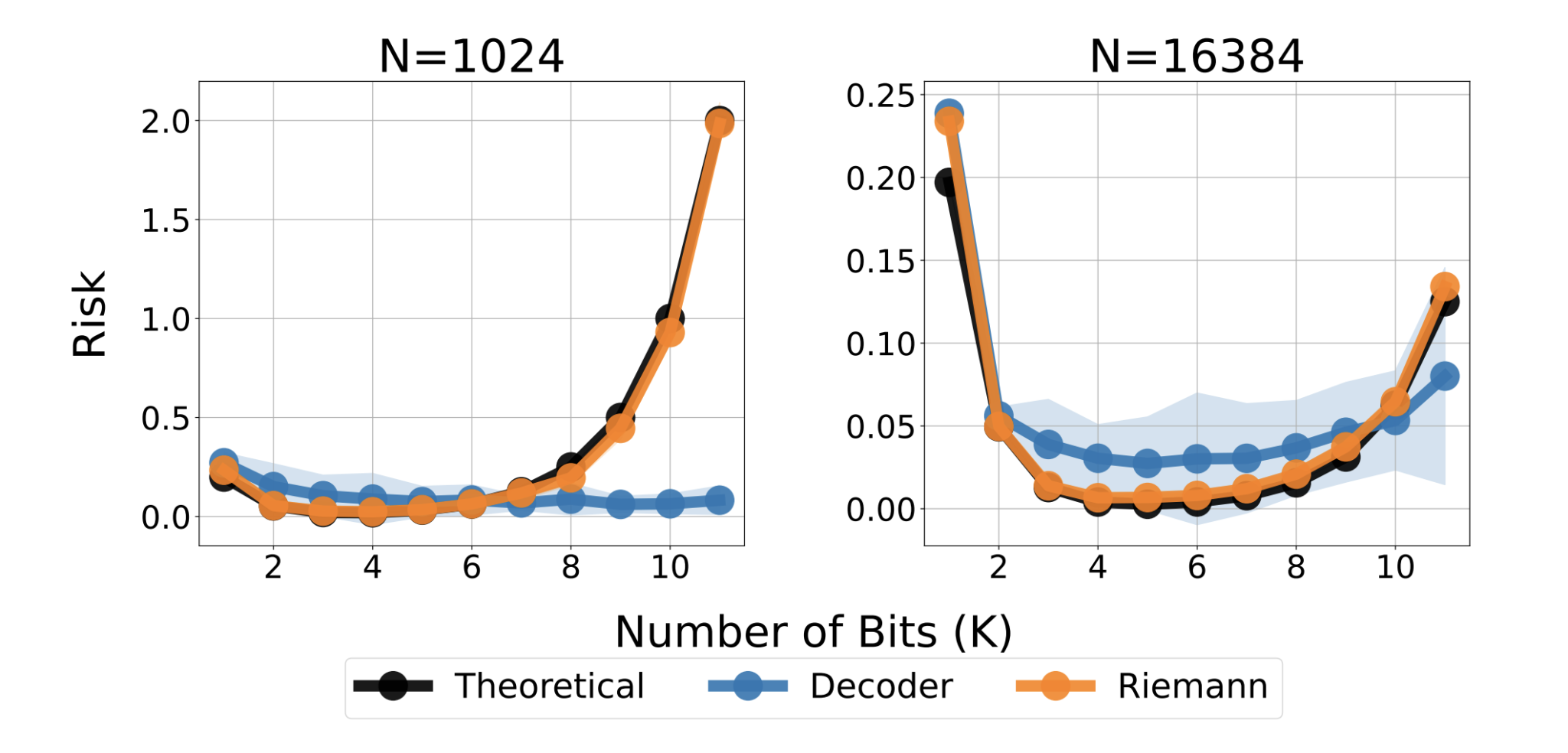

Experiments show that this method successfully captures complex numeric relationships and performs well across various regression tasks:

Key Findings

- High Accuracy: Achieves high correlation scores, especially in low-data situations.

- Better Density Estimation: Outperforms traditional models in capturing intricate distributions.

- Error Correction: Techniques like token repetition enhance numeric stability.

The Value of This New Approach

This new token-based regression framework offers several advantages:

- Improves flexibility in modeling real-valued data.

- Delivers competitive results on various regression tasks.

- Expands the range of tasks that AI models can handle effectively.

Looking Ahead

Future developments will focus on:

- Enhancing tokenization for better accuracy.

- Expanding capabilities to multi-output and high-dimensional tasks.

- Exploring applications in reinforcement learning and vision-based tasks.

Get Involved

Check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and be part of our LinkedIn Group. Join our 75k+ ML SubReddit community!

Transform Your Business with AI

Unlock the potential of this innovative regression approach to enhance your business:

- Identify Automation Opportunities: Find key areas in customer interactions to leverage AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select the Right AI Solution: Choose customizable tools that align with your objectives.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights on leveraging AI, follow us on Telegram or @itinaicom.

Explore how AI can transform your sales and customer engagement at itinai.com.