Enhancing Our AI Agent with Persistence and Streaming

Overview

We previously built an AI agent that answers queries by browsing the web. Now, we will enhance it with two vital features: **persistence** and **streaming**. Persistence allows the agent to save its progress and resume later, which is ideal for long tasks. Streaming provides real-time updates about what the agent is doing, ensuring transparency and control.

Setting Up the Agent

Let’s begin by recreating our agent. We will:

– Load environment variables

– Install necessary libraries

– Set up the Tavily search tool

– Define the agent’s state

– Construct the agent

Agent Setup Code

To install the necessary libraries, run:

pip install langgraph==0.2.53 langgraph-checkpoint==2.0.6 langgraph-sdk==0.1.36 langchain-groq langchain-community langgraph-checkpoint-sqlite==2.0.1

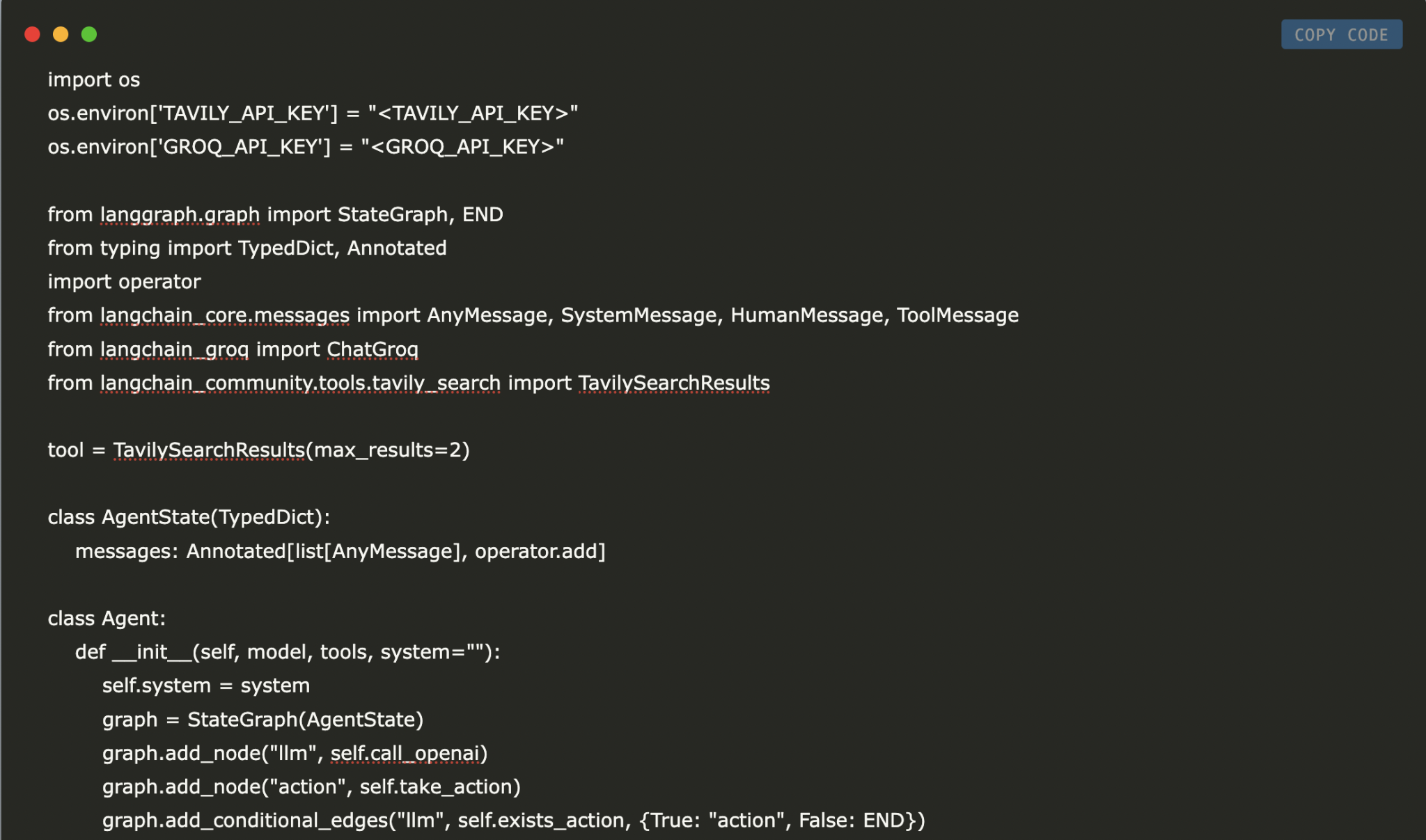

Next, set your API keys and import the required modules:

python

import os

os.environ[‘TAVILY_API_KEY’] = “

os.environ[‘GROQ_API_KEY’] = “

Now, create the agent structure equipped with tools and state management.

Adding Persistence

To implement persistence, use the **checkpointer feature** from LangGraph. This enables the agent to save its state after each action. We’ll use **SqliteSaver**, a lightweight checkpointer that employs SQLite.

Persistence Code Example

python

from langgraph.checkpoint.sqlite import SqliteSaver

import sqlite3

sqlite_conn = sqlite3.connect(“checkpoints.sqlite”, check_same_thread=False)

memory = SqliteSaver(sqlite_conn)

Modify the agent to accept this checkpointer, allowing it to maintain state across sessions.

Creating the Agent with Persistence

You can now instantiate the agent with persistence enabled:

python

model = ChatGroq(model=”Llama-3.3-70b-Specdec”)

bot = Agent(model, [tool], system=prompt, checkpointer=memory)

Adding Streaming

Streaming is vital for providing real-time updates. We will implement:

1. **Streaming Messages**: Real-time updates of decisions and tool results.

2. **Streaming Tokens**: Individual tokens from the AI’s responses.

Implementing Streaming Messages

Create a human message and utilize the streaming method to observe the agent’s actions live.

Example of Streaming Messages

python

messages = [HumanMessage(content=”What is the weather in Texas?”)]

thread = {“configurable”: {“thread_id”: “1”}}

for event in bot.graph.stream({“messages”: messages}, thread):

for v in event.values():

print(v[‘messages’])

This will show a series of results, including the AI’s instructions and tool responses.

Understanding Thread IDs

Thread IDs help the agent manage different conversations. Each thread ID maintains unique session context, allowing multiple interactions without confusion.

Example of Continuing a Conversation

If you ask a follow-up question in the same thread, the agent understands the context:

python

messages = [HumanMessage(content=”What about in LA?”)]

thread = {“configurable”: {“thread_id”: “1”}}

Streaming Tokens

To stream tokens asynchronously, switch to an async checkpointer.

Conclusion

By integrating persistence and streaming, we greatly enhance the AI agent’s functionality. Persistence keeps conversations flowing smoothly across sessions, while streaming delivers live insights into actions. These features are essential for building reliable applications, especially in multi-user environments.

Stay tuned for our next tutorial, where we will explore human collaboration with AI agents!

Explore AI Solutions

Want to improve your business with AI? Here are key steps:

1. **Identify Automation Opportunities**: Find customer interactions suitable for AI.

2. **Define KPIs**: Set measurable goals for AI impact.

3. **Select the Right AI Tools**: Choose customizable solutions.

4. **Implement Gradually**: Start small, gather data, and expand.

For inquiries about AI KPI management, contact us at hello@itinai.com and follow us on Twitter and join our Telegram for the latest updates!