Understanding Tokenization in Language Models

What is Tokenization?

Tokenization is essential for improving the performance and scalability of Large Language Models (LLMs). It helps models process and understand text but hasn’t been fully explored for its impact on training and efficiency.

The Challenge with Traditional Tokenization

Traditional methods use the same vocabulary for both input and output. While larger vocabularies can handle longer text sequences, they can also make it harder for smaller models to perform well. For example, a tokenizer that shortens sequences can overwhelm smaller models that can’t manage complex predictions.

Introducing Over-Tokenized Transformers

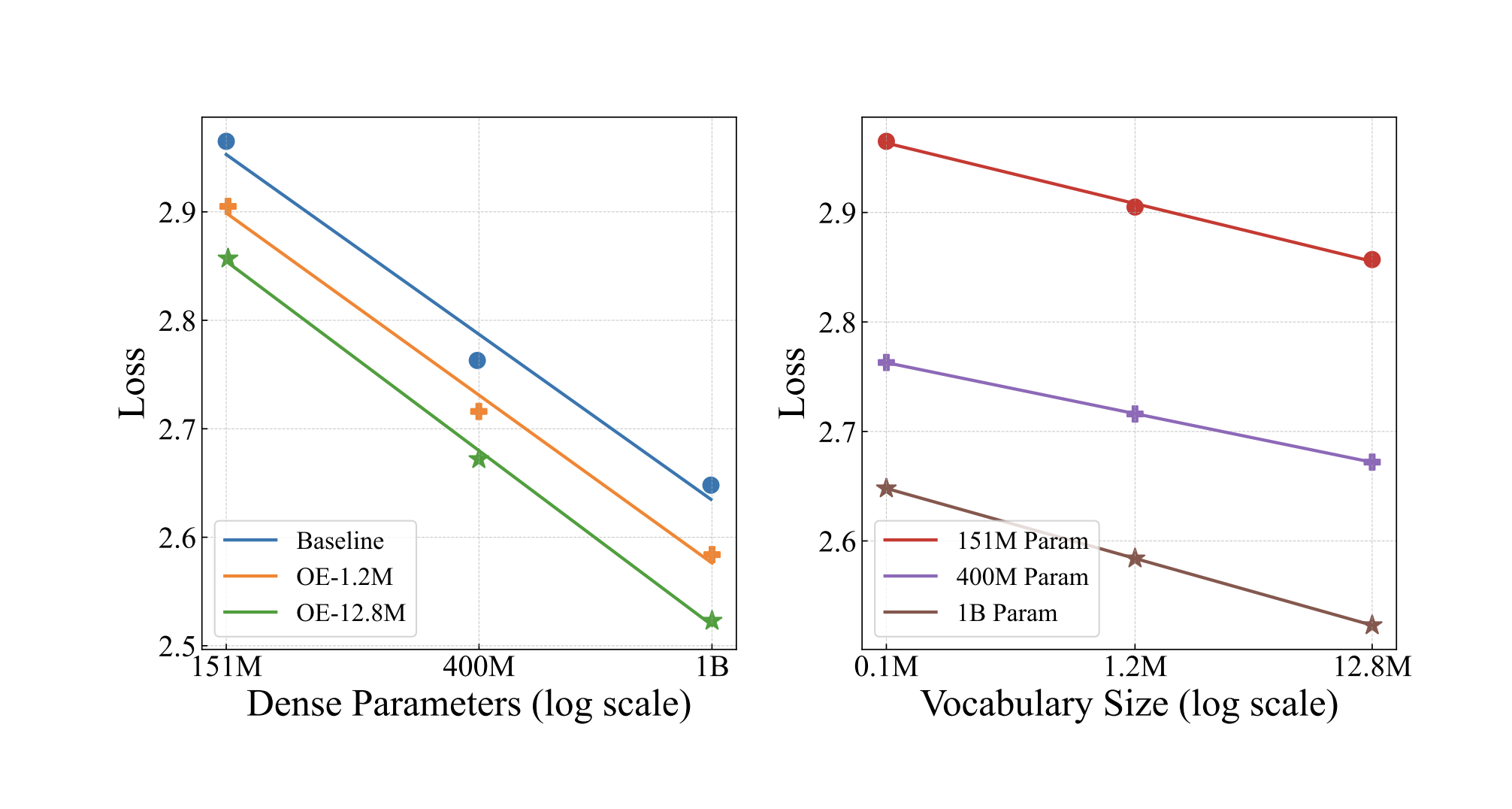

To solve these issues, researchers developed a new approach called Over-Tokenized Transformers. This framework separates input and output vocabularies, allowing for better efficiency and performance.

Key Features of Over-Tokenized Framework

– **Over-Encoding (OE)**: This method uses hierarchical n-gram embeddings to create a richer input vocabulary. Instead of a single token, each input is represented by multiple embeddings, helping models understand context better.

– **Over-Decoding (OD)**: This technique improves output predictions by allowing the model to predict multiple tokens at once, but only applies this to larger models.

Benefits of Over-Tokenized Transformers

1. **Performance Boost**: Larger input vocabularies enhance all model sizes, leading to better context understanding.

2. **Faster Learning**: The new framework can reduce the number of training steps needed, accelerating model convergence.

3. **Efficient Resource Use**: Even with larger vocabularies, memory and computation costs remain low, allowing for better scalability.

Real-World Applications and Results

The Over-Tokenized framework has shown consistent performance improvements across various benchmarks. For instance:

– A 151M Over-Encoded model achieved a 14% reduction in perplexity.

– Models trained with this framework experienced significant speed improvements in training and task performance.

Conclusion

The Over-Tokenized Transformers framework redefines how tokenization works in language models, allowing smaller models to excel without overly complex predictions. This approach not only offers immediate benefits but also provides a low-cost upgrade for existing systems.

Explore Further

For more insights, check out the original research paper. Stay connected with us on Twitter, join our Telegram Channel, and engage in our LinkedIn Group. Don’t miss out on our 70k+ ML SubReddit community.

Your Path to AI Integration

To enhance your business with AI:

– **Identify Opportunities**: Find areas where AI can improve customer interactions.

– **Set KPIs**: Measure the impact of AI on your business.

– **Select Solutions**: Choose AI tools that fit your needs.

– **Implement Gradually**: Start small, gather data, and expand wisely.

For AI KPI management advice, reach out at hello@itinai.com, and follow us for ongoing AI insights on Telegram and Twitter. Discover how AI can transform your sales and customer engagement at itinai.com.