Transforming AI with Large Language Models (LLMs)

Large Language Models (LLMs) are changing the landscape of research and industry. Their effectiveness improves with larger model sizes, but training these models is a significant challenge due to high requirements for computing power, time, and costs. For example, training top models like Llama 3 405B can take up to 16,000 H100 GPUs and 54 days. Models like GPT-4 also require immense computational resources. This creates barriers for development, emphasizing the need for more efficient training methods to advance LLM technology while minimizing computing demands.

Practical Solutions to Computational Challenges

To tackle these challenges, several strategies have been developed:

- Mixed Precision Training: This method speeds up model training while keeping accuracy intact, initially used for convolutional and deep neural networks and now applied to LLMs.

- Post-Training Quantization (PTQ) and Quantization Aware Training (QAT): These techniques significantly reduce model size by allowing for lower precision in computations, thus saving resources.

Despite these developments, managing outliers remains difficult, as existing methods often rely on time-consuming pre-processing steps.

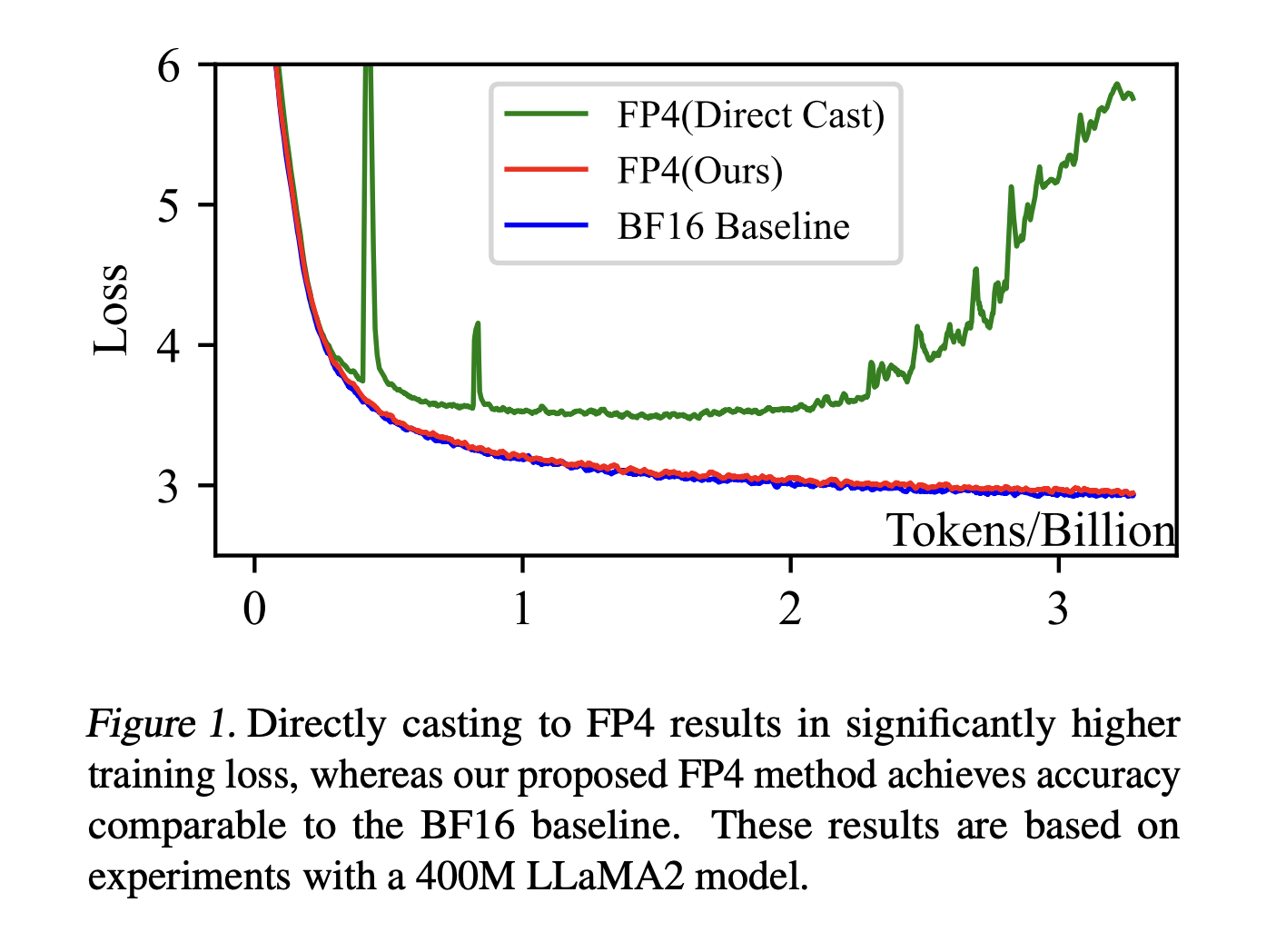

Innovative FP4 Framework

Researchers have introduced a new framework for training language models using a technique called FP4, which allows for ultra-low precision training. This framework corrects quantization errors with two main innovations:

- A differentiable quantization estimator that improves gradient updates.

- An outlier handling mechanism that combines clamping and a sparse matrix for better accuracy.

This framework focuses on optimizing General Matrix Multiplication (GeMM) operations, which account for over 95% of LLM training work. It uses 4-bit quantization for these operations, optimizing performance through various quantization techniques and utilizing Nvidia H-series GPUs to simulate the FP4 dynamic range.

Results and Benefits

The FP4 framework has shown promising results during its testing phase. Training models like LLaMA 1.3B, 7B, and 13B with FP4 yielded similar performance to traditional methods, with slight differences in training losses. Moreover, the FP4 models often outperformed their BF16 counterparts in various tests, showcasing the effectiveness of the new approach and its scalability.

Conclusion and Future Needs

This FP4 pretraining framework represents a significant leap forward in ultra-low-precision computing, achieving comparable performance to higher-precision models. Nevertheless, the current system lacks dedicated hardware for FP4, requiring simulation which adds computational overhead. Advancements in hardware are essential to unlock the full potential of this innovative training approach.

Explore More: Check out the original paper for detailed insights. Follow us on Twitter, join our Telegram Channel, and engage with our LinkedIn Group for the latest updates. And don’t forget to connect with our thriving ML SubReddit community!

Enhance Your Business with AI

Embrace AI to stay competitive and transform your operations:

- Identify Automation Opportunities: Find key areas in customer interaction where AI can add value.

- Define KPIs: Ensure your AI initiatives impact business outcomes.

- Select the Right AI Solution: Choose customizable tools that fit your needs.

- Implement Gradually: Start small with pilot programs, analyze results, and expand as needed.

For advice on AI KPI management, reach out at hello@itinai.com. For ongoing insights into optimizing AI, follow us on our Telegram channel or Twitter @itinaicom.

Discover how AI can transform your sales and customer engagement processes by exploring solutions at itinai.com.