Enhancing Large Language Models (LLMs) with Efficient Compression Techniques

Understanding the Challenge

Large Language Models (LLMs) like GPT and LLaMA are powerful due to their complex structures and extensive training. However, not all parts of these models are necessary for good performance. This has led to the need for methods that make these models more efficient without losing quality.

Practical Solutions

The LASER model is one example that reduces unnecessary weight in its networks using a method called Singular Value Decomposition (SVD). While effective, it only focuses on individual weight matrices and misses out on shared information.

A New Approach from Imperial College London

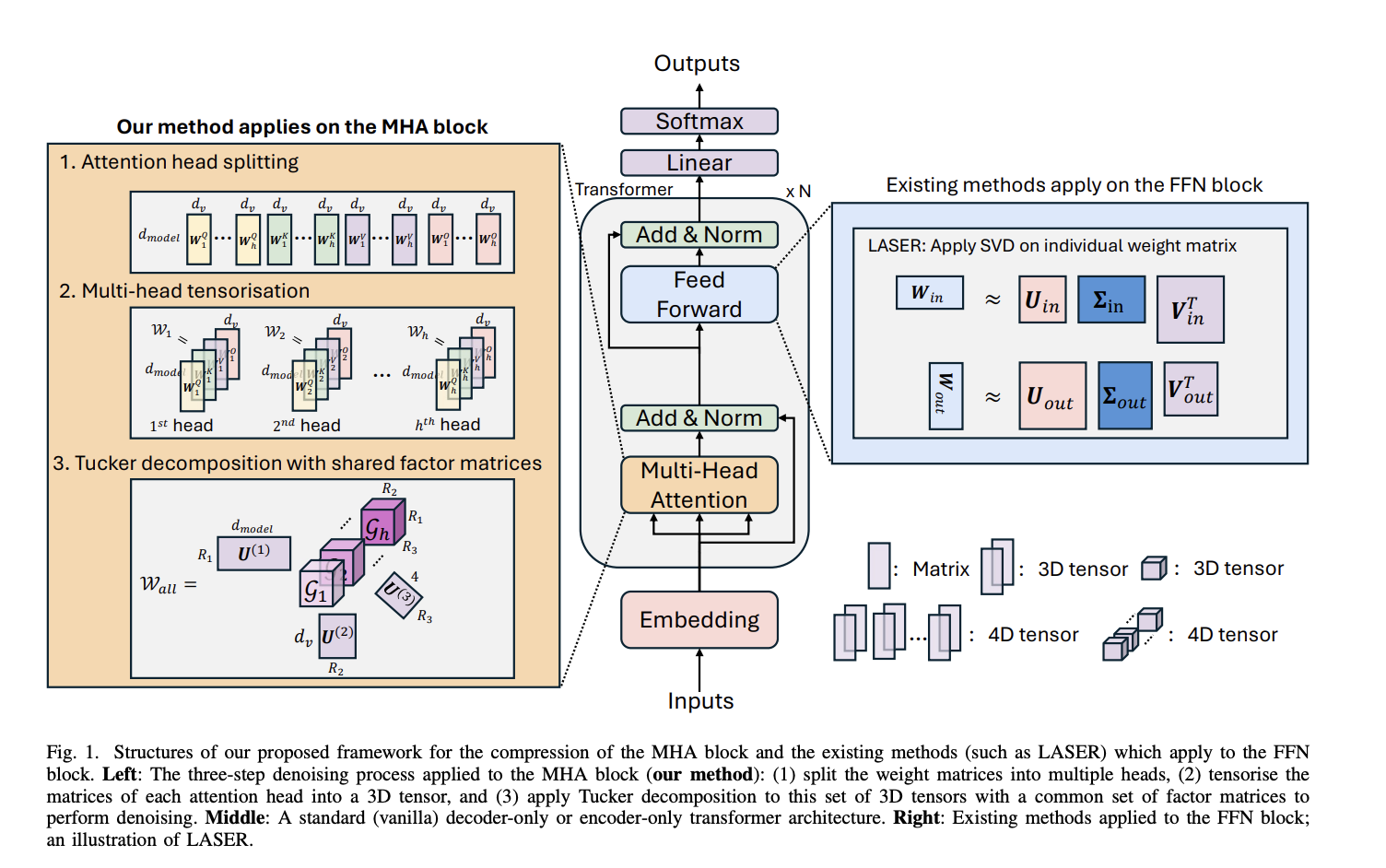

Researchers have developed a new framework that improves LLM reasoning by compressing the Multi-Head Attention (MHA) block. This method uses multi-head tensorisation and Tucker decomposition, allowing for significant compression—up to 250 times—without needing extra data or fine-tuning. It enhances the model’s reasoning by utilizing the shared roles of attention heads.

Technical Insights

This framework reshapes MHA weight matrices into 3D tensors, which helps in better data representation and reduces noise. By ensuring that all attention heads work in the same higher-dimensional space, the model’s reasoning capability is improved.

Proven Results

Tests on various benchmark datasets with models like RoBERTa, GPT-J, and LLaMA2 showed that this method significantly enhances reasoning while compressing parameters. It works well with existing compression methods and often outperforms them when combined.

Conclusion and Future Directions

This new framework not only boosts reasoning in LLMs but also achieves impressive parameter compression. By using advanced techniques, it enhances model efficiency without requiring additional training. Future work will focus on making this approach applicable across different datasets.

Get Involved

For more insights, check out the research paper. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 70k+ ML SubReddit for more discussions!

Transform Your Business with AI

Stay competitive by leveraging TensorLLM to enhance reasoning and efficiency in your operations. Here’s how:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on leveraging AI by following us on Telegram or Twitter @itinaicom.

Revolutionize Your Sales and Customer Engagement

Discover more AI solutions at itinai.com.