Understanding Large Language Models (LLMs)

Large Language Models (LLMs) are essential in today’s world, impacting various fields. They excel in many tasks but sometimes produce unexpected or unsafe responses. Ongoing research aims to better align LLMs with human preferences while utilizing their vast training data.

Effective Methods for Improvement

Techniques like Reinforcement Learning from Human Feedback (RLHF) and Direct Preference Optimization (DPO) are useful but often require impractical iterative training. Researchers are now focusing on improving inference methods to achieve results similar to traditional training.

Introducing Test-Time Preference Optimization (TPO)

Researchers from Shanghai AI Laboratory have developed a new framework called Test-Time Preference Optimization (TPO). This framework aligns LLM outputs with human preferences during inference, allowing the model to learn and improve continuously.

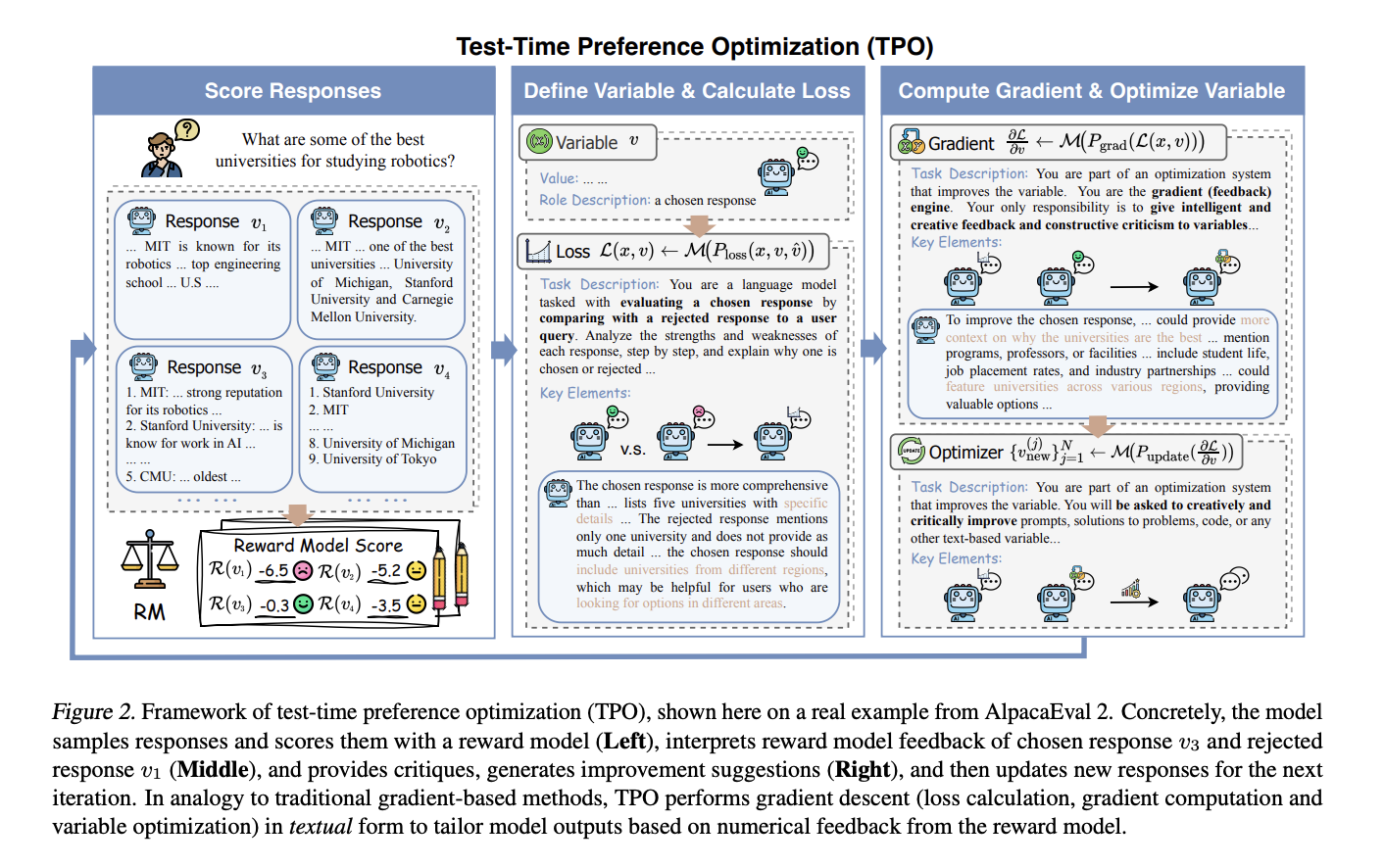

How TPO Works

TPO uses interpretable textual feedback instead of traditional numerical scoring for preference optimization. It translates reward signals into textual rewards through critiques, enabling the model to generate better suggestions based on this feedback.

Iterative Improvement Process

During testing, the model scores new responses at each optimization step, categorizing them as “chosen” or “rejected.” It learns from the best outputs and identifies weaknesses in rejected ones to create a “textual loss,” which guides future iterations.

Research Findings

The study tested aligned and unaligned models to assess preference optimization. Key models included Llama-3.1-70B-SFT (unaligned) and Llama-3.1-70B-Instruct (aligned). Results showed that TPO significantly improved performance in both models, with the unaligned model outperforming the aligned one after TPO optimization.

Conclusion

The TPO framework offers a scalable and flexible solution for aligning LLM outputs with human preferences during inference, eliminating the need for retraining. This innovative approach holds promise for future advancements in LLM technology.

Explore Further

Check out the research paper and GitHub for more details. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Join our 70k+ ML SubReddit for ongoing discussions.

Enhance Your Business with AI

To stay competitive, consider implementing Test-Time Preference Optimization in your company. Here’s how to get started:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot program, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights through our Telegram channel t.me/itinainews or Twitter @itinaicom.

Transform Your Sales and Customer Engagement

Discover how AI can redefine your sales processes and customer interactions. Explore solutions at itinai.com.