Introduction to DeepSeek R1

DeepSeek R1 has created excitement in the AI community. This open-source model performs exceptionally well, often matching top proprietary models. In this article, we will guide you through setting up a Retrieval-Augmented Generation (RAG) system using DeepSeek R1, from environment setup to running queries.

What is RAG?

RAG combines retrieval and generation techniques. It retrieves relevant information from a knowledge base and generates accurate responses to user queries.

Prerequisites

- Python: Version 3.7 or higher.

- Ollama: This framework allows you to run models like DeepSeek R1 locally.

Step-by-Step Implementation

Step 1: Install Ollama

Follow the instructions on the Ollama website to install it. Verify the installation by running:

ollama --versionStep 2: Run DeepSeek R1 Model

Open your terminal and execute:

ollama run deepseek-r1:1.5bThis command starts the 1.5 billion parameter version of DeepSeek R1, suitable for various applications.

Step 3: Prepare Your Knowledge Base

Gather documents, articles, or any relevant text data for your retrieval system.

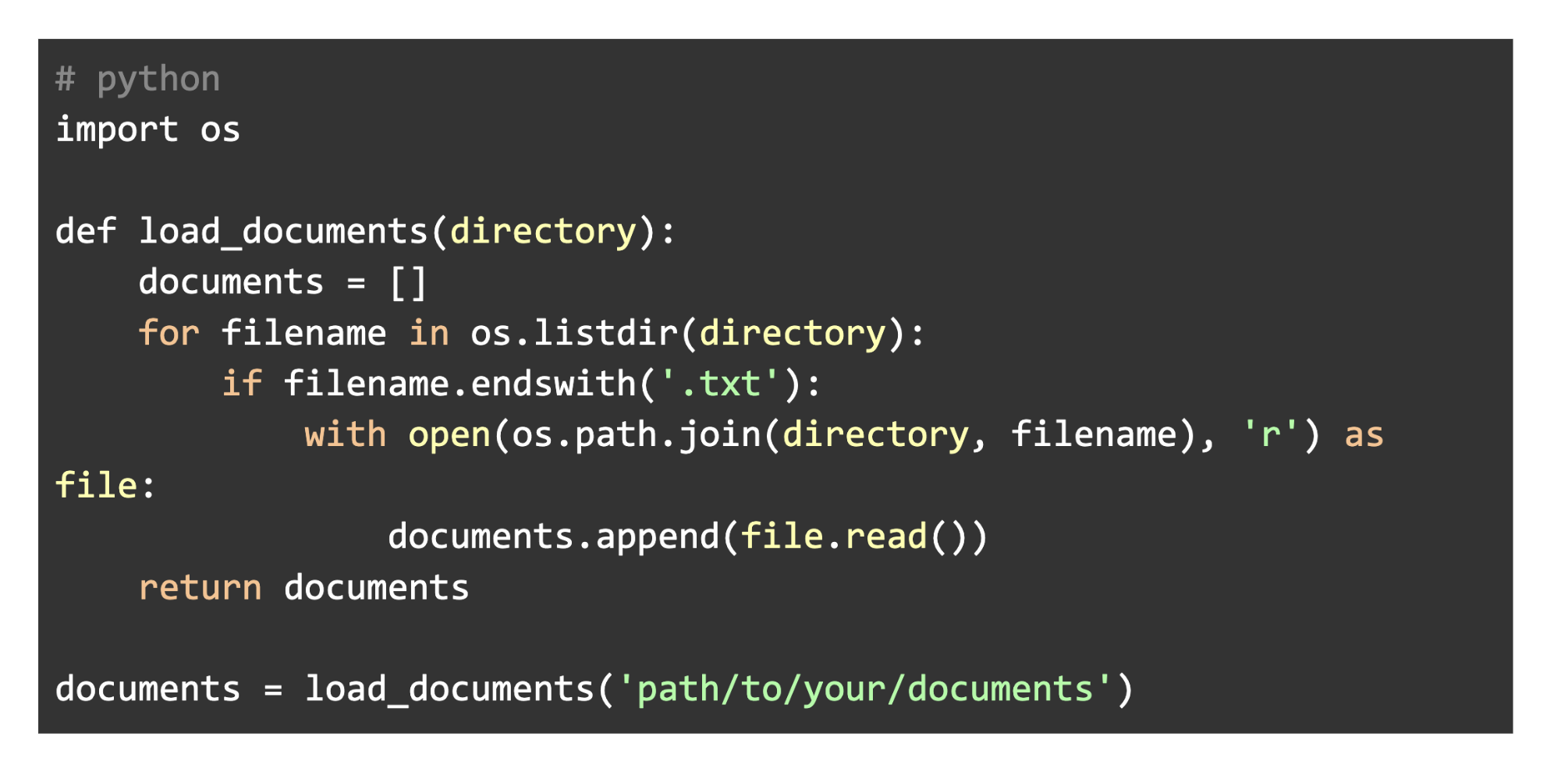

3.1 Load Your Documents

Load documents from text files, databases, or web scraping. Here’s an example:

import os

def load_documents(directory):

documents = []

for filename in os.listdir(directory):

if filename.endswith('.txt'):

with open(os.path.join(directory, filename), 'r') as file:

documents.append(file.read())

return documents

documents = load_documents('path/to/your/documents')Step 4: Create a Vector Store for Retrieval

Use a vector store like FAISS for efficient document retrieval.

4.1 Install Required Libraries

Install additional libraries:

pip install faiss-cpu huggingface-hub4.2 Generate Embeddings and Set Up FAISS

Generate embeddings and set up the FAISS vector store:

from huggingface_hub import HuggingFaceEmbeddings

import faiss

import numpy as np

embeddings_model = HuggingFaceEmbeddings()

document_embeddings = [embeddings_model.embed(doc) for doc in documents]

document_embeddings = np.array(document_embeddings).astype('float32')

index = faiss.IndexFlatL2(document_embeddings.shape[1])

index.add(document_embeddings)Step 5: Set Up the Retriever

Create a retriever to fetch relevant documents based on user queries:

class SimpleRetriever:

def __init__(self, index, embeddings_model):

self.index = index

self.embeddings_model = embeddings_model

def retrieve(self, query, k=3):

query_embedding = self.embeddings_model.embed(query)

distances, indices = self.index.search(np.array([query_embedding]).astype('float32'), k)

return [documents[i] for i in indices[0]]

retriever = SimpleRetriever(index, embeddings_model)Step 6: Configure DeepSeek R1 for RAG

Set up a prompt template for DeepSeek R1:

from ollama import Ollama

from string import Template

llm = Ollama(model="deepseek-r1:1.5b")

prompt_template = Template("""

Use ONLY the context below.

If unsure, say "I don't know".

Keep answers under 4 sentences.

Context: $context

Question: $question

Answer:

""")Step 7: Implement Query Handling Functionality

Create a function to combine retrieval and generation:

def answer_query(question):

context = retriever.retrieve(question)

combined_context = "n".join(context)

response = llm.generate(prompt_template.substitute(context=combined_context, question=question))

return response.strip()Step 8: Running Your RAG System

Test your RAG system by calling the answer_query function:

if __name__ == "__main__":

user_question = "What are the key features of DeepSeek R1?"

answer = answer_query(user_question)

print("Answer:", answer)Conclusion

By following these steps, you can implement a Retrieval-Augmented Generation (RAG) system using DeepSeek R1. This setup allows efficient information retrieval and accurate response generation. Explore the potential of DeepSeek R1 for your specific needs.

AI Solutions for Your Business

To enhance your company with AI, consider the following:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights, follow us on Telegram or @itinaicom.