Understanding the Growth of AI in Vision and Language

Artificial intelligence (AI) has made remarkable progress by combining vision and language capabilities. This allows AI systems to understand and create information from various sources such as text, images, and videos. This integration improves applications like natural language processing and human-computer interaction. However, challenges persist in ensuring that AI outputs are accurate and align with human expectations.

Challenges in Multi-Modal AI Models

The main issue with large vision-language models is ensuring their outputs match human preferences. Many current systems struggle with inconsistent responses and often generate incorrect or irrelevant information. Additionally, high-quality datasets for training these models are limited, affecting their performance in real-world scenarios.

Current Solutions and Their Limitations

Most existing solutions rely on narrow text-based rewards, which are not scalable or transparent. These approaches often depend on fixed datasets and prompts, failing to account for the variability of real-world inputs. This creates a significant gap in developing effective reward models for guiding these AI systems.

Introducing IXC-2.5-Reward

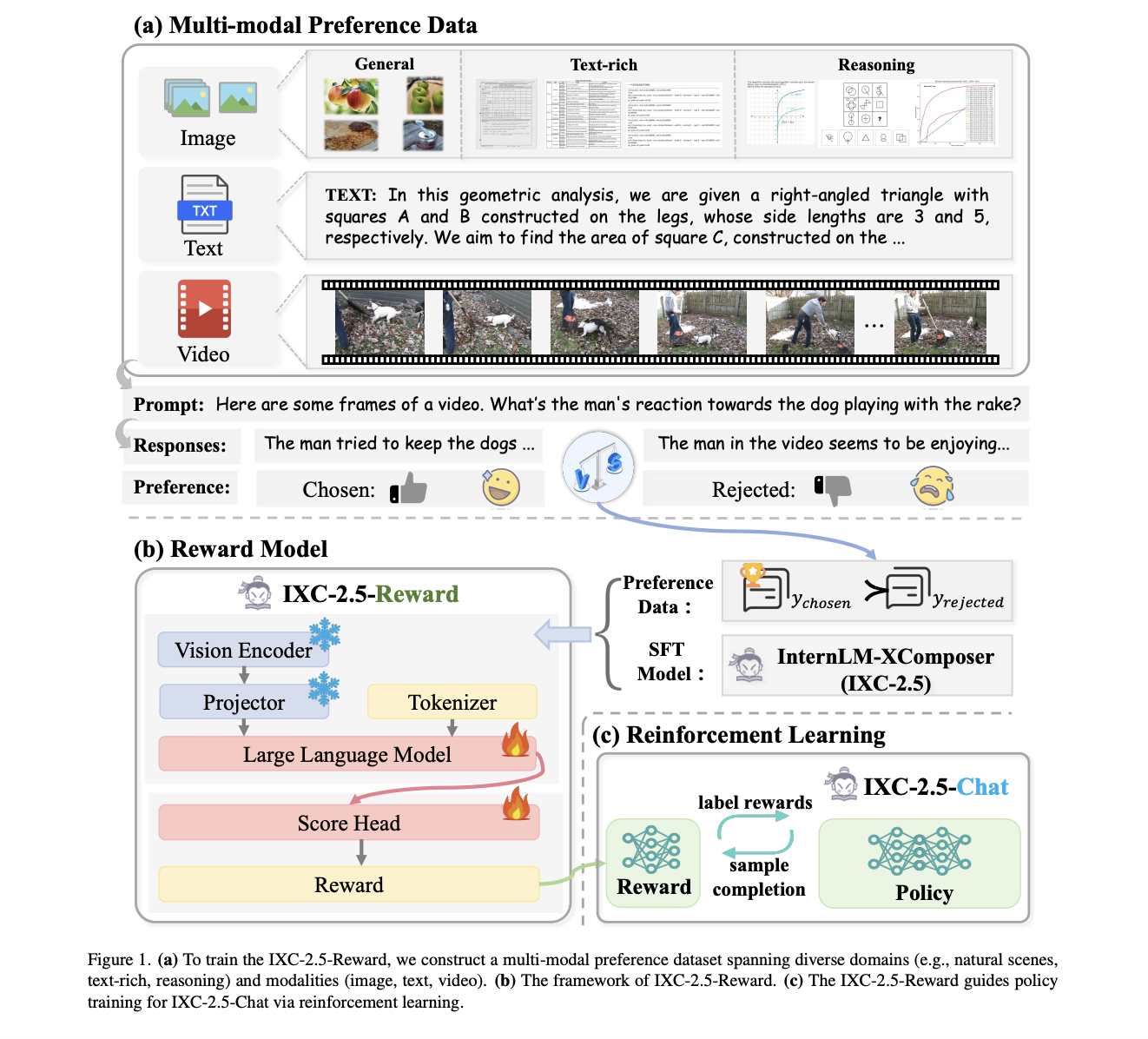

A collaborative team of researchers has developed InternLM-XComposer2.5-Reward (IXC-2.5-Reward). This innovative model enhances multi-modal reward systems, aligning AI outputs more closely with human preferences. Unlike previous models, IXC-2.5-Reward effectively processes text, images, and videos, making it suitable for a variety of applications.

Key Features of IXC-2.5-Reward

- Comprehensive Dataset: Built using a wide range of data types, including reasoning and video analysis.

- Reinforcement Learning: Utilizes advanced algorithms like Proximal Policy Optimization (PPO) for training.

- Quality Control: Implements constraints on response lengths to ensure concise and high-quality outputs.

Performance Highlights

IXC-2.5-Reward sets a new standard in multi-modal AI, achieving 70.0% accuracy on VL-RewardBench and outperforming leading models like Gemini-1.5-Pro and GPT-4o. It also excels in text-only benchmarks, demonstrating robust language processing capabilities alongside multi-modal tasks.

Applications and Benefits

Research showcases three key applications of IXC-2.5-Reward:

- Reinforcement Learning Support: Acts as a guiding signal for effective model training.

- Response Optimization: Enhances performance by selecting the best responses from multiple candidates.

- Data Quality Improvement: Identifies and removes problematic samples from training datasets.

A Major Advancement in AI

This work represents a significant step forward in multi-modal AI, addressing scalability, versatility, and alignment with human preferences. IXC-2.5-Reward lays the groundwork for future advancements in AI systems, promising improved effectiveness in real-world applications.

Get Involved and Learn More

Check out the research paper and GitHub for more details. Follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t forget to join our 70k+ ML SubReddit!

Transform Your Business with AI

To stay competitive, consider how AI can enhance your operations:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI efforts lead to measurable business outcomes.

- Select an AI Solution: Choose customizable tools that meet your specific needs.

- Implement Gradually: Start with pilot projects, gather data, and expand thoughtfully.

For AI KPI management advice, contact us at hello@itinai.com. Follow us for ongoing insights on leveraging AI.