Introduction to ViTok

Modern methods for generating images and videos use tokenization to simplify complex data. While there have been significant improvements in generator models, tokenizers, especially those based on convolutional neural networks (CNNs), have not received as much focus. This raises questions about how enhancing tokenizers can improve accuracy in generating content. Challenges include limitations in architecture and dataset size, which affect scalability and usability. Understanding how auto-encoder design impacts performance metrics like quality and compression is also crucial.

What is ViTok?

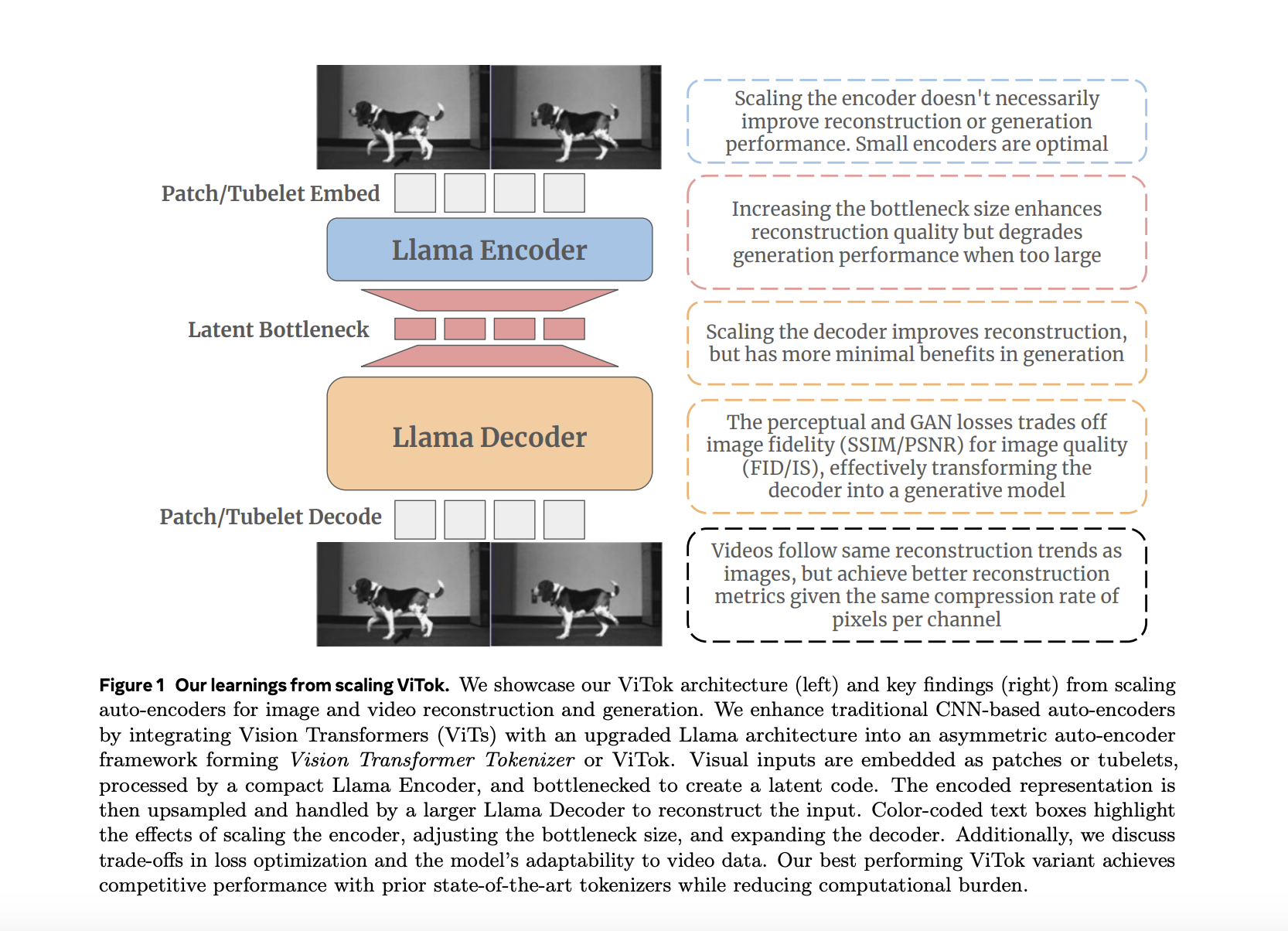

Researchers from Meta and UT Austin have developed ViTok, a new auto-encoder based on Vision Transformers (ViT). Unlike traditional CNN-based tokenizers, ViTok uses a Transformer architecture supported by the Llama framework. This allows for large-scale tokenization of images and videos, effectively training on vast and varied datasets.

Key Features of ViTok

- Bottleneck Scaling: Analyzes how the size of latent codes affects performance.

- Encoder Scaling: Studies the effects of increasing encoder complexity.

- Decoder Scaling: Evaluates how larger decoders impact reconstruction and generation.

Technical Advantages of ViTok

ViTok employs an asymmetric auto-encoder with unique features:

- Patch and Tubelet Embedding: Breaks down inputs into patches for images and tubelets for videos to capture essential details.

- Latent Bottleneck: The size of the latent space balances compression and quality.

- Encoder and Decoder Design: Uses a lightweight encoder for efficiency and a powerful decoder for high-quality reconstruction.

By utilizing Vision Transformers, ViTok enhances scalability and produces high-quality outputs through its advanced decoder.

Performance Insights

ViTok was tested on benchmarks like ImageNet-1K, COCO for images, and UCF-101 for videos. Key insights include:

- Bottleneck Scaling: Larger bottleneck sizes improve reconstruction but complicate generative tasks.

- Encoder Scaling: Bigger encoders offer limited benefits and may hinder generative performance.

- Decoder Scaling: Larger decoders improve reconstruction quality, but their generative benefits vary.

Overall, ViTok demonstrates:

- Top metrics for image reconstruction at various resolutions.

- Enhanced video reconstruction scores, showing adaptability.

- Strong generative performance with lower computational needs.

Conclusion

ViTok presents a scalable, Transformer-based solution to traditional CNN tokenizers, tackling challenges in design and optimization. Its strong performance in both reconstruction and generation highlights its potential for diverse applications in handling image and video data.

For more information, check out the research paper. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 65k+ ML SubReddit!

Transform Your Business with AI

To stay competitive and leverage AI effectively, consider the following steps:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Explore how AI can enhance your sales processes and customer engagement at itinai.com.