Understanding the Importance of LLMs

Large Language Models (LLMs) are vital in fields like education, healthcare, and customer service where understanding natural language is key. However, adapting LLMs to new tasks is challenging, often requiring significant time and resources. Traditional fine-tuning methods can lead to overfitting, limiting their ability to handle unexpected tasks.

Introducing Low-Rank Adaptation (LoRA)

LoRA is a method that updates specific parts of the model while keeping the rest unchanged, making fine-tuning cheaper. However, it can be sensitive to overfitting and struggles to scale across various tasks, which limits its effectiveness.

Transformer²: A New Solution

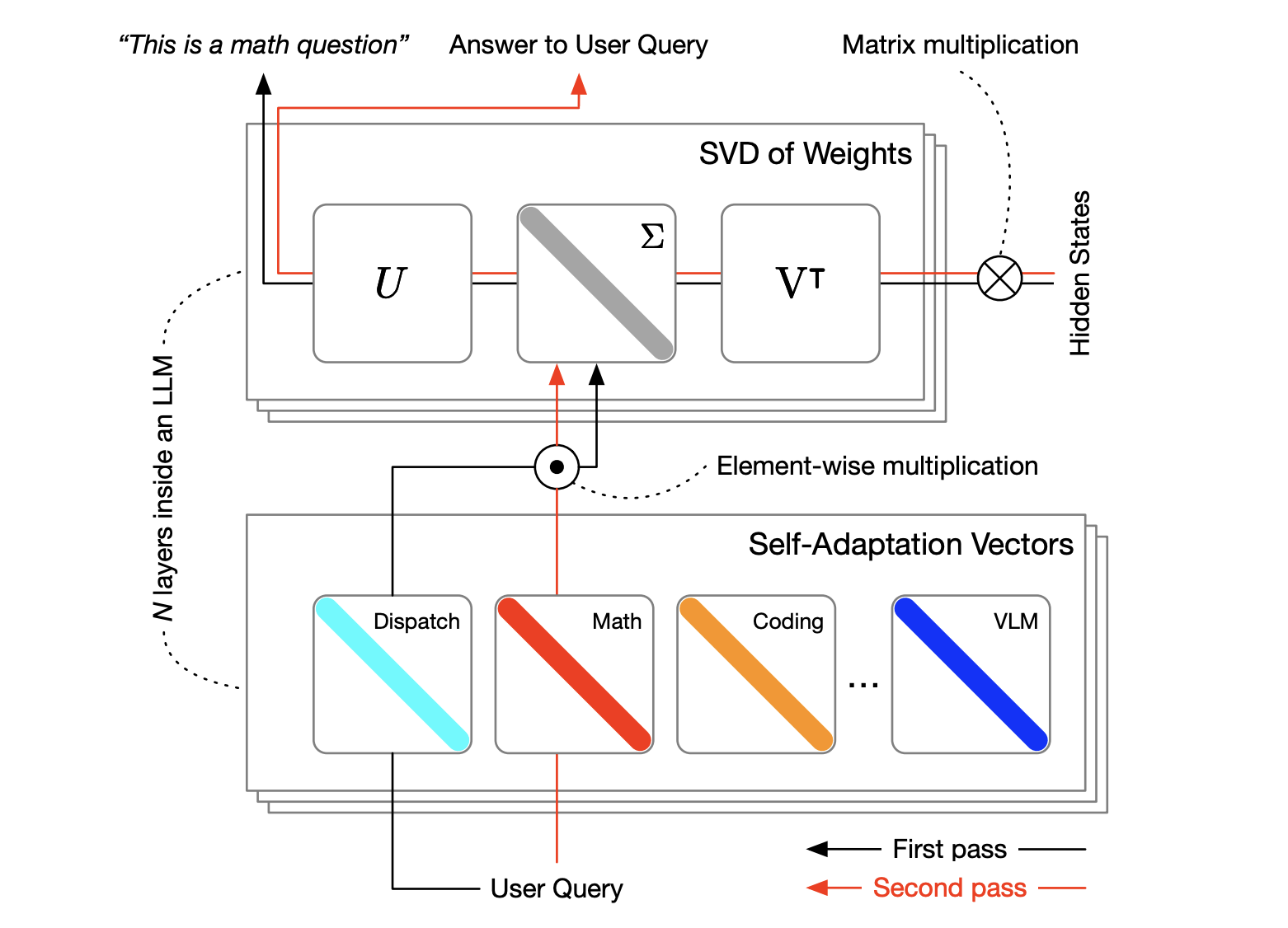

The team at Sakana AI and the Institute of Science Tokyo developed Transformer², a revolutionary framework that adapts LLMs in real-time without extensive retraining. It uses a technique called Singular Value Fine-tuning (SVF), allowing dynamic adjustments to the model with less computational effort.

Key Features of Transformer²

- Efficient Adaptation: SVF modifies only key components of the model, reducing the number of trainable parameters.

- Dynamic Task Handling: It uses reinforcement learning to create specialized “expert” vectors for specific tasks.

- Two-Pass Mechanism: The model first analyzes the task requirements and then integrates relevant expert vectors for optimal performance.

Performance Highlights

Transformer² has shown impressive results in benchmark tests:

- Over 39% improvement in visual question-answering tasks.

- Approximately 4% better performance on math problems compared to traditional fine-tuning methods.

- Significant accuracy boosts in programming tasks, demonstrating versatility across different domains.

Efficiency and Scalability

SVF drastically reduces training times and computational needs, requiring less than 10% of the parameters used by LoRA. For instance, SVF needed only 0.39 million parameters for the GSM8K dataset, compared to 6.82 million with LoRA, while still achieving superior performance.

Conclusion

The advancements made by the Sakana AI team with Transformer² and its SVF method represent a significant step forward in self-adaptive AI systems. This framework not only addresses current challenges but also lays the groundwork for future developments in adaptive AI technologies.

Stay Connected

Check out the Paper and GitHub Page for more details. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t miss out on our growing ML SubReddit community of over 65k members.

Unlock AI for Your Business

Transform your company with AI solutions from Sakana AI:

- Identify Automation Opportunities: Find key areas for AI integration.

- Define KPIs: Set measurable goals for your AI initiatives.

- Select the Right AI Solution: Choose tools that fit your specific needs.

- Implement Gradually: Start small, gather insights, and expand wisely.

For AI KPI management advice, reach out at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Explore how AI can enhance your sales processes and customer engagement at itinai.com.