Understanding Video with AI: The Challenge

Video understanding is a tough challenge for AI. Unlike still images, videos have complex movements and require understanding both time and space. This makes it hard for AI models to create accurate descriptions or answer specific questions. Problems like hallucination, where AI makes up details, further reduce trust in these systems. Even with advancements in models like GPT-4o and Gemini-1.5-Pro, reaching human-level understanding of videos is still difficult. Key issues include accurately perceiving events and understanding sequences while minimizing hallucinations.

Introducing Tarsier2: A Breakthrough Solution

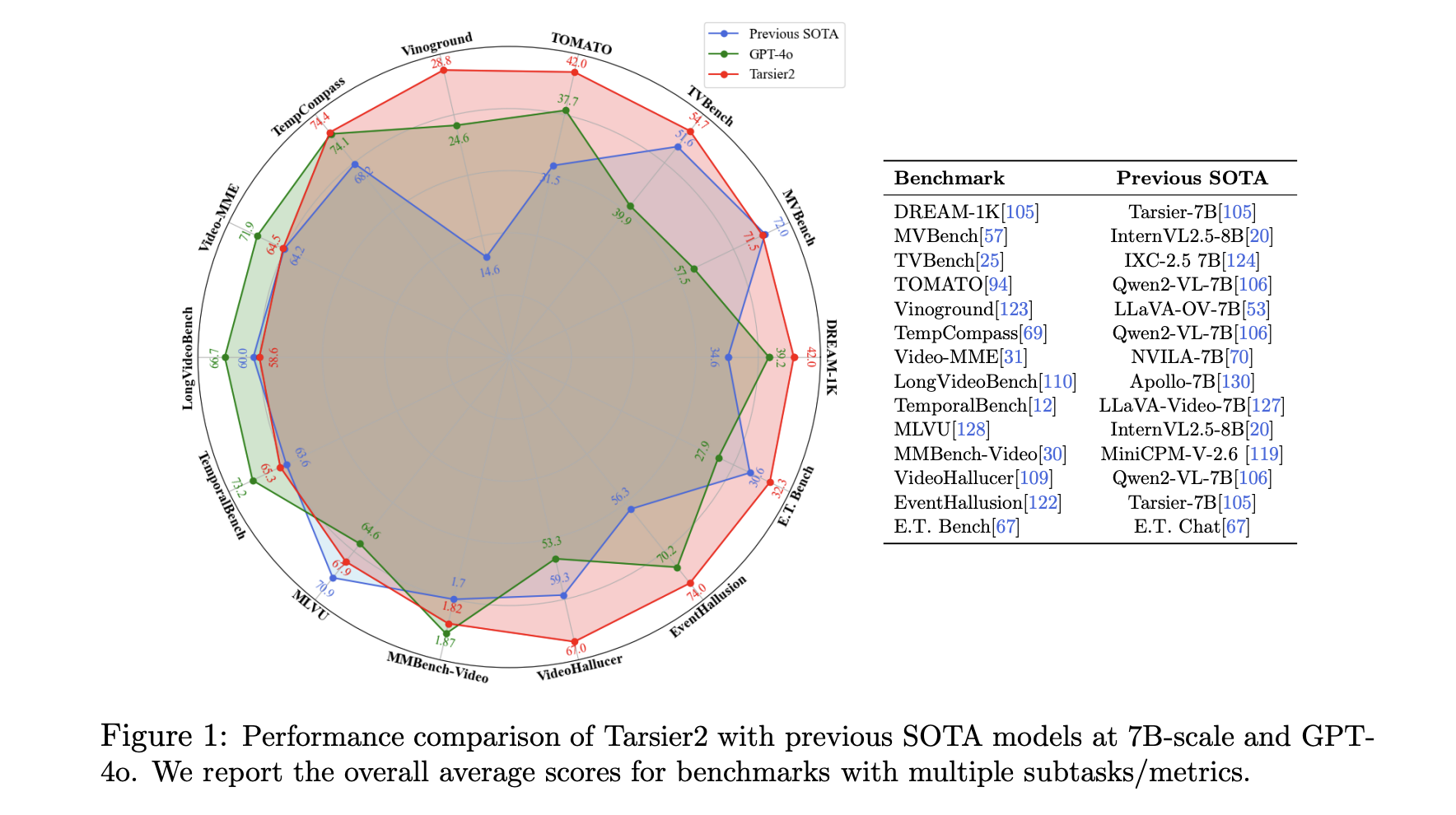

ByteDance researchers have developed Tarsier2, a powerful vision-language model (LVLM) with 7 billion parameters, aimed at overcoming the challenges of video understanding. Tarsier2 excels in creating detailed video descriptions, outperforming models like GPT-4o and Gemini-1.5-Pro. It also performs well in tasks such as question-answering and grounding. With a training dataset of 40 million video-text pairs and advanced training techniques, Tarsier2 shows significant improvements in performance.

Key Features and Benefits

- Pre-training: Utilizes a large dataset of video-text pairs, including commentary videos that capture both simple actions and complex plots.

- Supervised Fine-Tuning (SFT): Ensures accurate event association with video frames, reducing hallucinations and enhancing precision.

- Direct Preference Optimization (DPO): Refines decision-making using generated preference data to minimize errors.

Outstanding Results

Tarsier2 has achieved remarkable results across various benchmarks. It shows an 8.6% performance advantage over GPT-4o and a 24.9% improvement over Gemini-1.5-Pro. It is the first model to exceed a 40% overall recall score on the DREAM-1K benchmark, demonstrating its ability to accurately detect and describe dynamic actions. Tarsier2 also sets new records on 15 public benchmarks, excelling in video question-answering and temporal reasoning.

Conclusion: The Future of Video Understanding

Tarsier2 represents a major advancement in video understanding by tackling issues like temporal alignment and hallucination reduction. This model not only surpasses existing alternatives but also offers a scalable framework for future developments. As video content continues to grow, Tarsier2 has the potential for various applications, from content creation to intelligent surveillance.

For more information, check out the Paper. All credit goes to the researchers behind this project. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 65k+ ML SubReddit.

Transform Your Business with AI

To stay competitive, consider how Tarsier2 can enhance your operations:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or Twitter.

Explore how AI can transform your sales processes and customer engagement at itinai.com.