Enhancing Large Language Models with Cache-Augmented Generation

Overview of Cache-Augmented Generation (CAG)

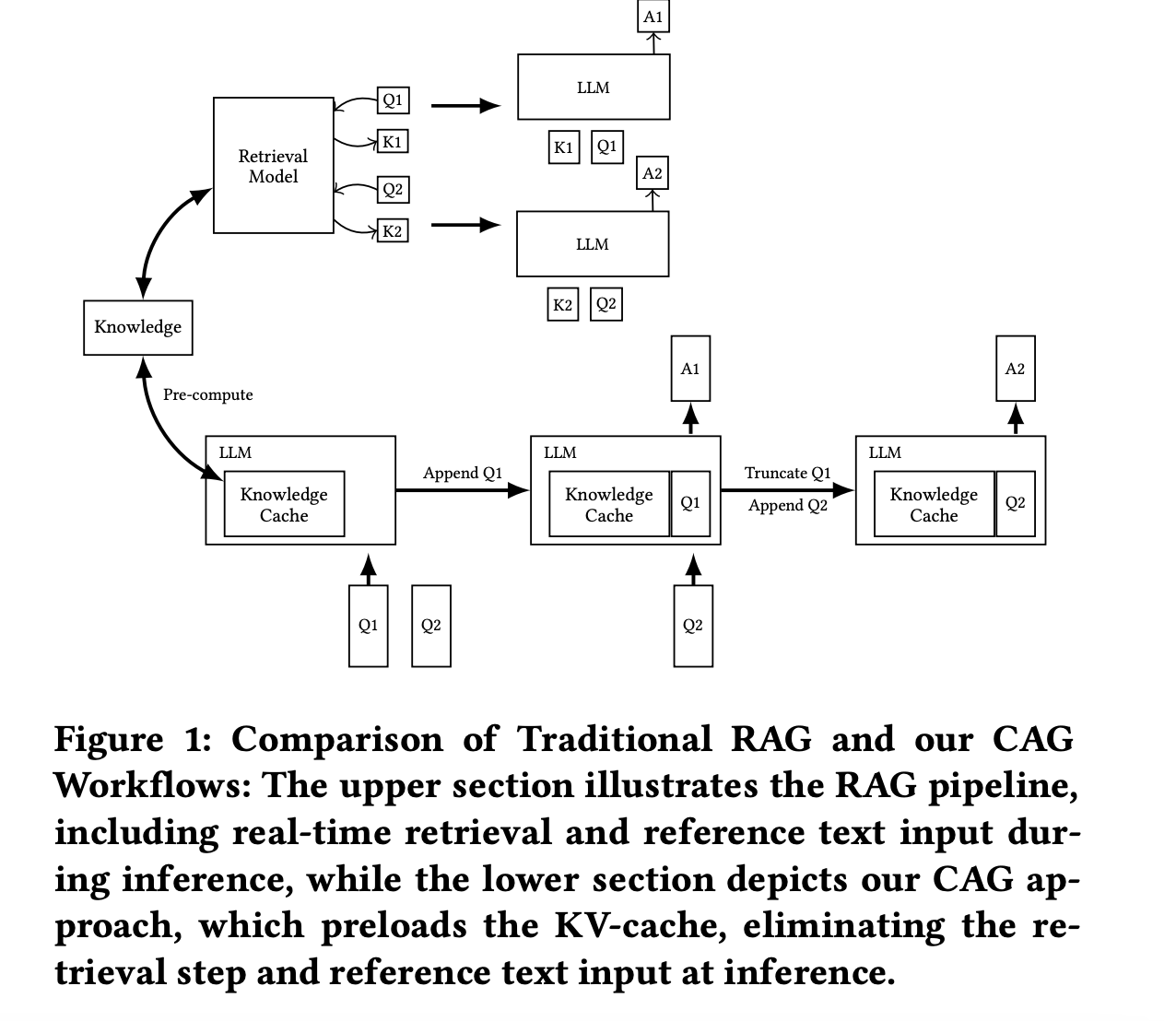

Large language models (LLMs) have improved with a method called retrieval-augmented generation (RAG), which uses external knowledge to enhance responses. However, RAG has challenges like slow response times and errors in selecting documents. To overcome these issues, researchers are exploring new methods that still benefit from knowledge integration.

Benefits of Long-Context LLMs

Recent advancements in long-context LLMs allow them to handle large amounts of text in one go. This makes them effective for tasks like understanding documents, engaging in multi-turn conversations, and summarizing text. Models such as GPT-4 and Claude 3.5 perform better than traditional RAG systems by efficiently processing extensive data.

Introducing Cache-Augmented Generation (CAG)

Researchers from Taiwan have developed a new method called Cache-Augmented Generation (CAG). This approach uses extended context windows in LLMs to avoid real-time retrieval. By preloading relevant documents and caching important parameters, CAG generates responses quickly and accurately, addressing the main issues of RAG systems.

How CAG Works

The CAG framework operates in three phases:

- External Knowledge Preloading: Relevant documents are loaded into the model’s context.

- Inference: The model generates responses using preloaded information.

- Cache Reset: The system resets the cache for future use.

This method allows for efficient knowledge integration without the delays of traditional retrieval methods.

Performance and Advantages

Experimental results show that CAG outperforms traditional RAG systems, achieving higher accuracy and faster response times. It eliminates retrieval errors by preloading comprehensive context, enabling better reasoning. CAG also reduces generation time, especially with longer texts, thanks to its efficient caching mechanism.

Conclusion and Future Directions

The CAG framework represents a significant step forward in knowledge integration for LLMs, providing a reliable alternative to RAG systems. It balances efficiency and adaptability, making it suitable for complex tasks. As LLMs continue to evolve, CAG sets the stage for more effective and dependable applications in various fields.

Get Involved

For more insights, check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 65k+ ML SubReddit!

Webinar Invitation

Join our webinar to learn how to enhance LLM performance while ensuring data privacy.

Transform Your Business with AI

Stay competitive by leveraging Cache-Augmented Generation. Here’s how to get started:

- Identify Automation Opportunities: Find customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or @itinaicom.

Revolutionize Your Sales and Customer Engagement

Discover innovative solutions at itinai.com.