Understanding Language Model Pre-Training

The pre-training of language models (LMs) is essential for their ability to understand and generate text. However, a major challenge is effectively using diverse training data from sources like Wikipedia, blogs, and social media. Currently, models treat all data the same, which leads to two main issues:

Key Issues:

- Missed Contextual Signals: Ignoring metadata like source URLs means LMs miss important context that helps them understand text better.

- Inefficiency in Specialized Tasks: Treating different data types equally reduces the model’s effectiveness in tasks needing specific knowledge.

These challenges result in a less effective training process, higher costs, and poorer performance on tasks. Solving these issues is crucial for creating better language models.

Introducing MeCo: A Practical Solution

Researchers from Princeton University have developed a method called Metadata Conditioning then Cooldown (MeCo) to tackle these pre-training challenges. MeCo uses available metadata, like source URLs, during training to help the model connect documents with their context.

How MeCo Works:

- Metadata Conditioning (First 90%): Metadata, such as “URL: wikipedia.org,” is added to the document. The model learns to link this metadata with the document’s content.

- Cooldown Phase (Last 10%): Training continues without metadata, ensuring the model can still perform well when metadata isn’t available.

This simple method speeds up training and makes language models more adaptable to different tasks with minimal extra effort.

Benefits of MeCo

- Improved Data Efficiency: MeCo allows models to achieve the same performance with 33% less training data.

- Enhanced Adaptability: The model can produce outputs with desired traits, like higher accuracy or lower toxicity, based on specific metadata.

- Minimal Overhead: MeCo adds little complexity or cost compared to more intensive methods like data filtering.

Results and Insights

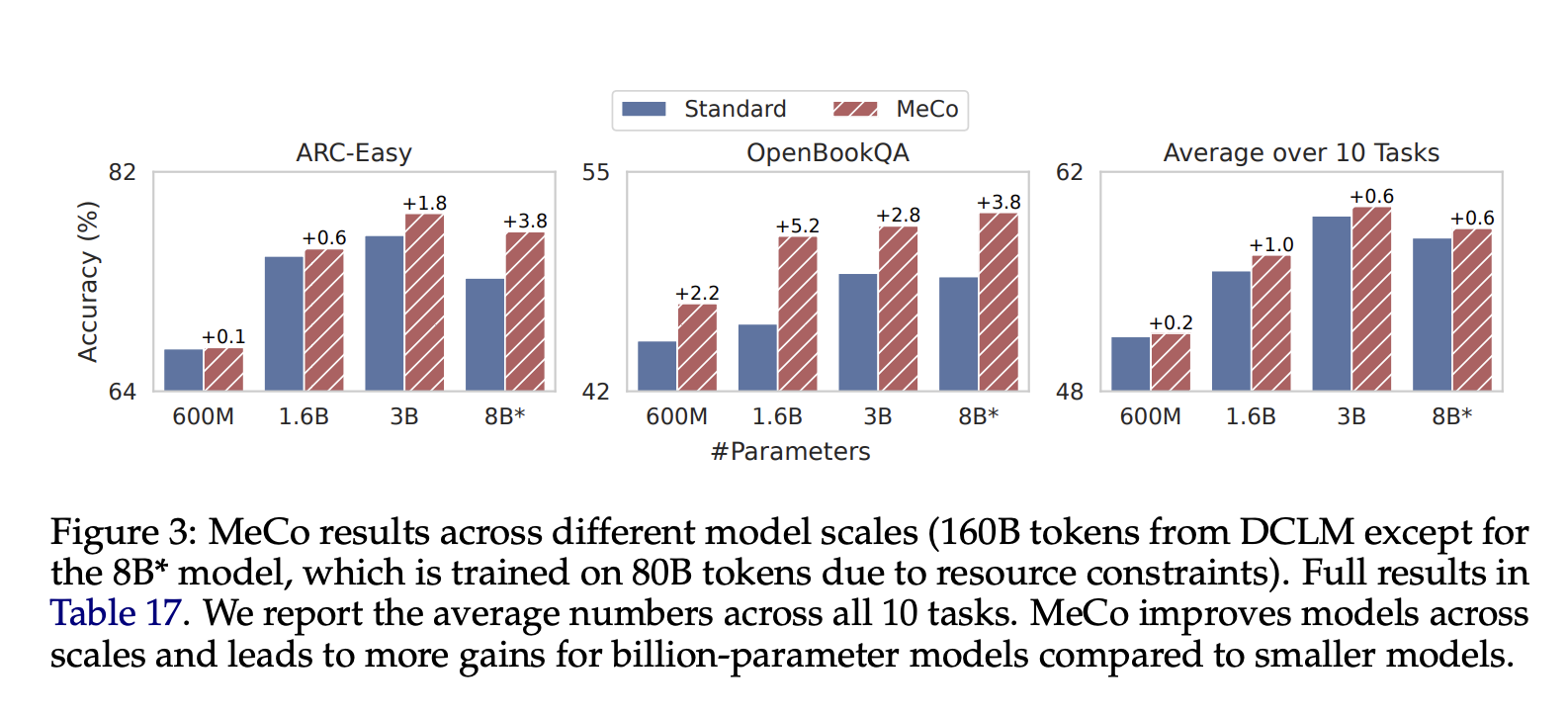

MeCo has shown significant performance improvements across various model sizes and datasets:

- It consistently outperformed standard pre-training in tasks like question answering.

- A 1.6B model trained with MeCo showed an average performance boost of 1.0% across 10 tasks.

- MeCo’s efficiency can lead to substantial savings in computational resources, especially in large-scale training.

Conditional Inference:

MeCo supports “conditional inference,” where adding specific metadata to a prompt can influence the model’s output. For example, using “wikipedia.org” can lower toxicity in generated text.

Conclusion

The MeCo method is a straightforward and effective way to enhance language model pre-training. By utilizing metadata, it addresses inefficiencies, reduces data needs, and improves performance and adaptability. Its simplicity and low computational cost make it an attractive option for researchers and practitioners.

As natural language processing continues to evolve, techniques like MeCo demonstrate the importance of metadata in refining training processes. Future research could explore combining MeCo with other innovative methods for even better results.

For more insights and to stay updated, follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to check out our 60k+ ML SubReddit.

Join our webinar for actionable insights on improving LLM model performance while ensuring data privacy.

If you want to enhance your business with AI, consider the following steps:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI projects have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot, gather data, and expand AI use wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay tuned for more insights on leveraging AI through our Telegram and Twitter channels.

Discover how AI can transform your sales processes and customer engagement at itinai.com.