Understanding Reward Functions in Reinforcement Learning

Reward functions are essential in reinforcement learning (RL) systems. They help define tasks but can be challenging to design effectively. A common method uses binary rewards, which are simple but can lead to difficulties in learning due to infrequent feedback.

Intrinsic rewards offer a way to improve learning. However, creating these requires deep knowledge and expertise, making it hard for experts to balance various factors accurately.

Innovative Solutions with Large Language Models (LLMs)

Recent advancements have leveraged Large Language Models (LLMs) to automate reward design based on natural language descriptions. Two main methods have emerged:

- Generating Reward Function Codes: This method has proven effective for continuous control tasks but needs access to environment source code and struggles with complex state representations.

- Generating Reward Values: Approaches like Motif rank observation captions using LLM preferences but require existing captioned datasets and involve a lengthy process.

Introducing ONI: A New Approach

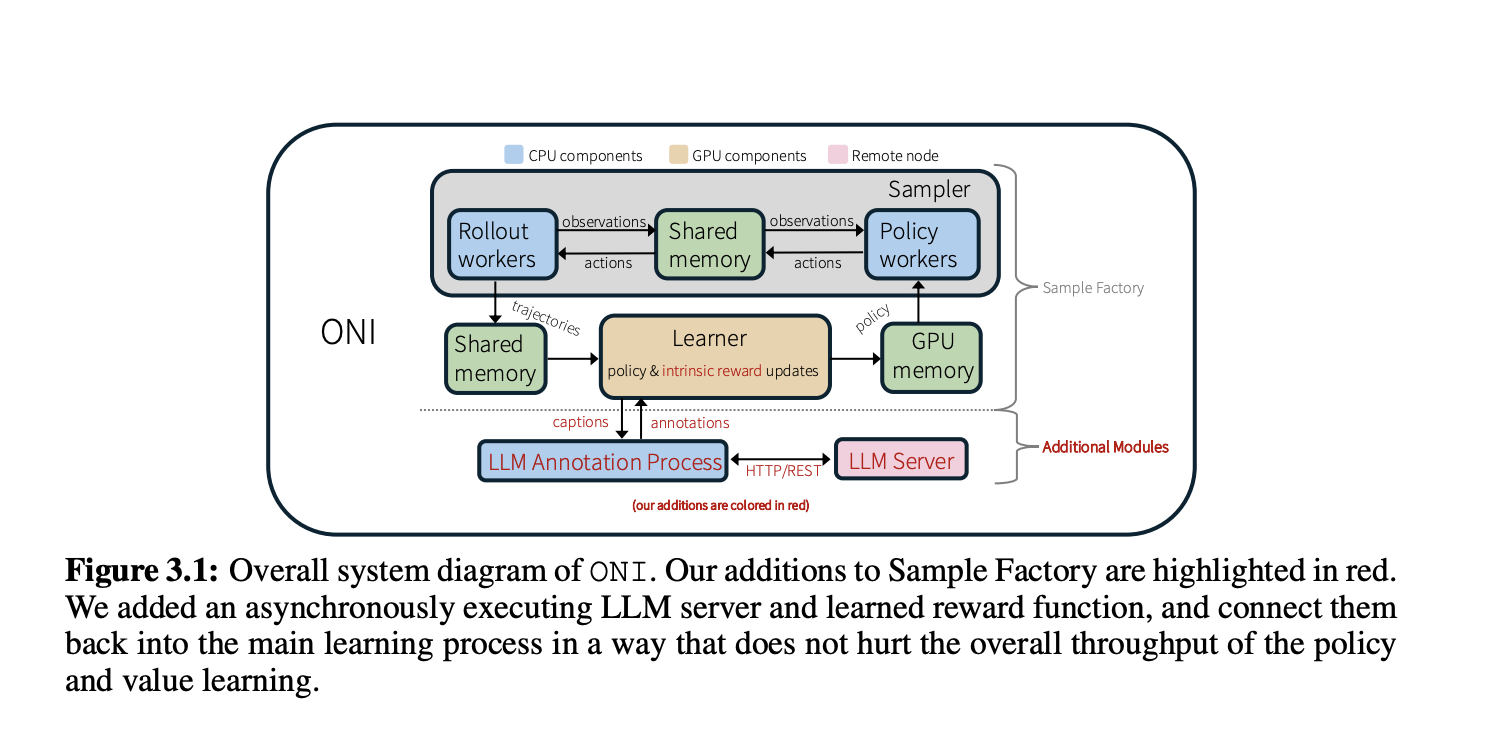

Researchers from Meta, the University of Texas Austin, and UCLA have developed ONI, a distributed architecture that learns RL policies and intrinsic rewards simultaneously using LLM feedback. This system:

- Utilizes an asynchronous LLM server to annotate the agent’s experiences.

- Transforms these experiences into an intrinsic reward model.

- Explores various algorithms to improve learning from sparse rewards.

ONI has shown superior performance in challenging tasks without the need for external datasets.

Key Features of ONI

ONI operates with high efficiency, running on a Tesla A100-80GB GPU and 48 CPUs. It achieves around 32,000 environment interactions per second and includes:

- An LLM server on a separate node.

- An asynchronous process for sending observation captions.

- A hash table to store captions and LLM annotations.

- A dynamic reward model learning code.

Performance Results

Experimental results show that ONI significantly improves performance on various tasks:

- ONI-classification competes with existing methods without needing pre-collected data.

- ONI-retrieval and ONI-ranking also demonstrate strong performance in different scenarios.

Conclusion: A Step Forward in AI

ONI marks a significant advancement in reinforcement learning. It facilitates the learning of intrinsic rewards and agent behaviors without relying on pre-collected datasets, laying the groundwork for more autonomous reward methods.

Transform Your Business with AI

To stay competitive and leverage AI effectively:

- Identify Automation Opportunities: Find key areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand cautiously.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Explore More

Discover how AI can transform your sales processes and customer engagement at itinai.com.