Advancements in Neural Network Architectures

Improving Efficiency and Performance

The field of neural networks is evolving quickly. Researchers are finding new ways to make AI systems faster and more efficient. Traditional models use a lot of computing power for basic tasks, which makes them hard to scale for real-world applications.

Challenges with Current Models

Many existing models struggle with simple factual tasks. For example, dense transformer models need more resources as they grow, which is a problem for tasks like question answering. There is a pressing need for solutions that can store and retrieve knowledge without using too much memory or processing power.

Mixture-of-Experts (MOE) Models

MOE models try to solve these problems by only using part of their parameters for each input. This reduces the workload compared to traditional models. However, MOE models often struggle with precise factual recall and can be complex to implement.

Innovative Memory Layers by Meta Researchers

Researchers from Meta have enhanced memory layers in AI architectures. These layers help store and retrieve information efficiently, showing significant improvements in memory capacity. By integrating memory layers into transformer models, they achieved a remarkable increase in performance, especially for factual tasks.

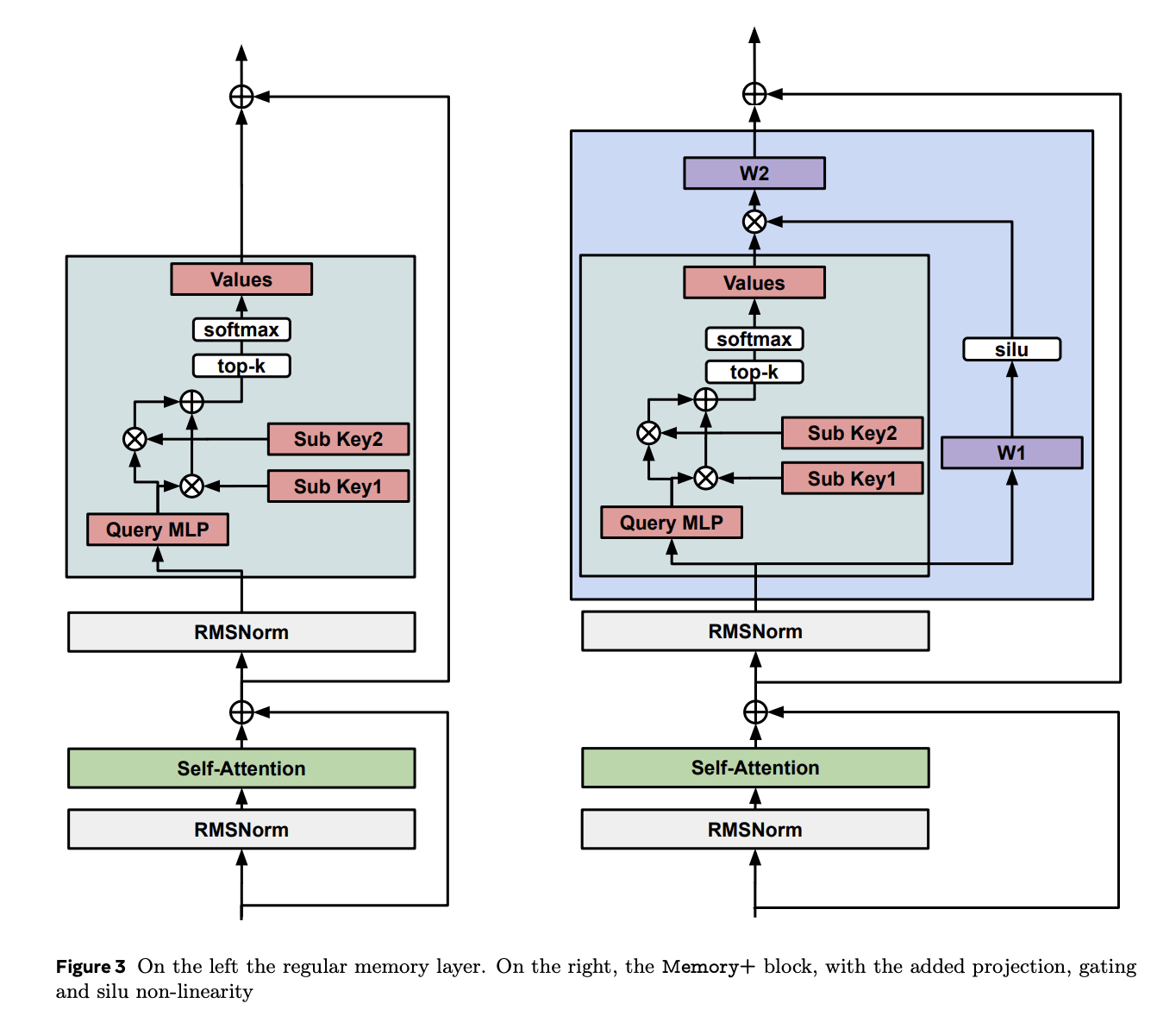

Key Features of the Memory Layer Design

- Trainable Key-Value Embeddings: These allow for improved efficiency in storing information.

- Sparse Activation Patterns: This technique reduces the computational load.

- Product-Key Lookup: This method divides keys into smaller groups for faster searching.

- Parallel Memory Operations: This enables handling millions of keys efficiently.

Performance Outcomes

A model with memory layers achieved similar accuracy to larger dense models with much lower computational requirements. In fact, memory-augmented models showed over a 100% accuracy increase in question-answering tasks. They learned faster and needed fewer training tokens, making them more efficient overall.

Key Takeaways

- Memory layers significantly improve performance in factual tasks.

- The approach scales well, reaching up to 128 billion parameters with consistent results.

- Custom CUDA kernels optimize GPU use for effective memory operations.

- Memory-augmented models learn efficiently with fewer resources.

- Shared memory pools enhance both dense and memory layers for better efficiency.

Conclusion

Meta’s research highlights the potential of memory layers in AI models, providing solutions to the challenges in neural network architectures. These advancements offer a promising direction for balancing computational demands with improved knowledge storage capabilities.

Explore Further

For more insights, check out the research paper. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Join our thriving ML SubReddit community of over 60,000 members.

Transform Your Business with AI

Stay competitive and leverage AI to enhance your operations. Here are some steps to consider:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Make sure your AI efforts have measurable impacts on your business.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and scale up wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing updates on leveraging AI, follow us on Telegram or Twitter @itinaicom.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.