Understanding Large Language Models (LLMs)

Large Language Models (LLMs) power many applications like chatbots, content generation, and understanding human language. They excel at recognizing complex language patterns from large datasets. However, training these models is costly and time-consuming, needing advanced hardware and significant computational resources.

Challenges in LLM Development

Current training methods are inefficient as they treat all data equally. They don’t prioritize which data could help models learn faster or use existing models to enhance training. This leads to wasted computational effort, processing simple and complex data together without distinction. Additionally, self-supervised learning typically overlooks smaller, efficient models that could guide larger models in their training.

Introducing Knowledge Distillation (KD)

Knowledge Distillation (KD) usually involves transferring knowledge from larger models to smaller ones. However, the reverse—using smaller models to train larger models—hasn’t been explored much. This presents a key opportunity, as smaller models can identify both easy and challenging data points, which can significantly improve training.

The SALT Approach

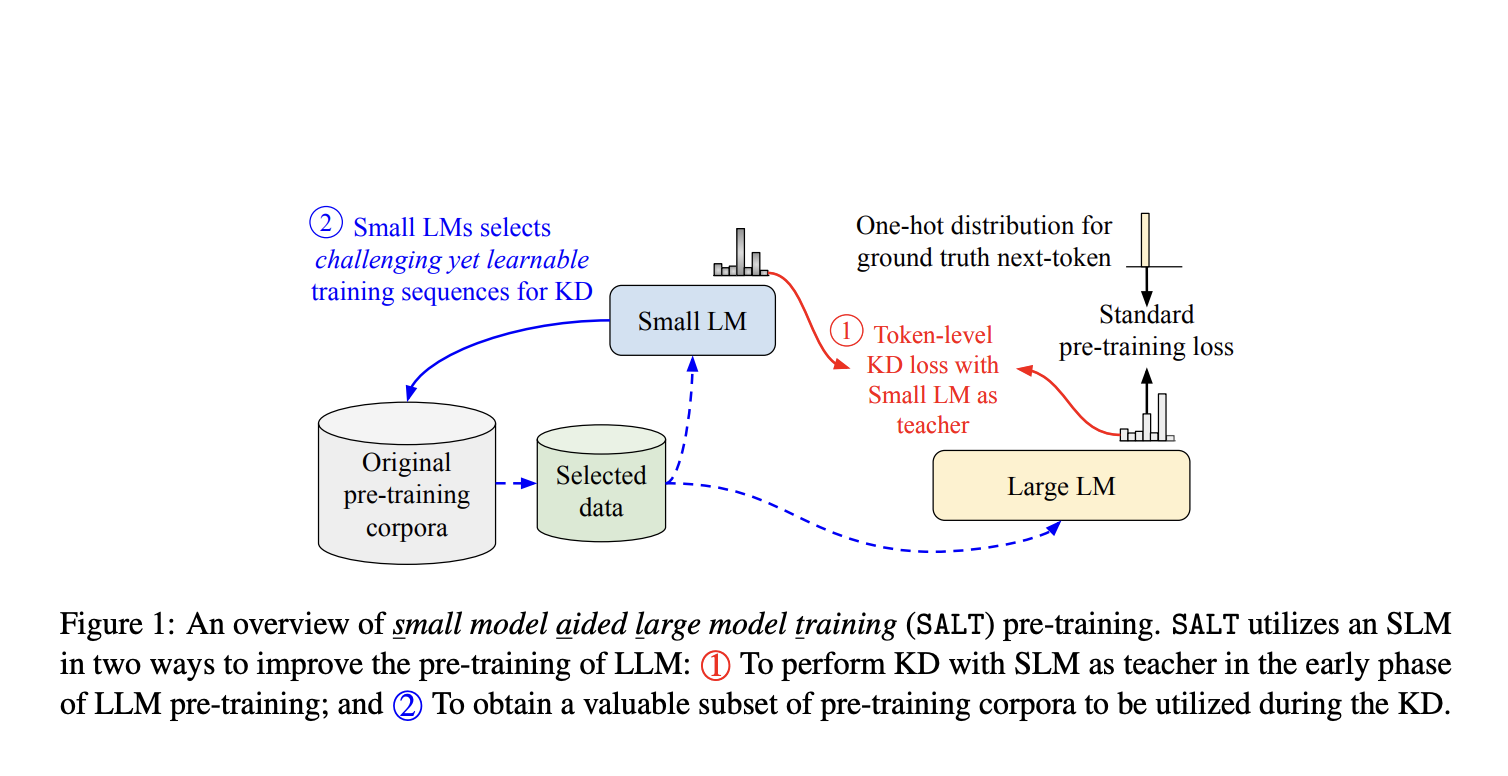

Researchers from Google introduced an innovative method called Small model Aided Large model Training (SALT). This technique uses smaller language models (SLMs) to enhance LLM training efficiency. SALT accomplishes this by:

- Offering soft labels during early training for better guidance.

- Selecting valuable data subsets for learning.

SALT’s Two-Phase Methodology

SALT operates through a two-step process:

- Phase One: SLMs act as teachers, sharing insights with LLMs and directing them to focus on challenging yet learnable data.

- Phase Two: The LLM independently improves its understanding of complex data.

Results and Benefits

In tests, a 2.8-billion-parameter LLM trained with SALT outperformed models trained with traditional methods. Key highlights include:

- 70% of the training steps were used, resulting in a 28% reduction in training time.

- Improved performance in reading comprehension, reasoning, and language tasks.

- Higher accuracy in next-token predictions and lower log-perplexity scores, indicating better model quality.

Key Takeaways

- SALT significantly cuts down computational needs by almost 28% during LLM training.

- It consistently yields better-performing models across various tasks.

- Smaller models help focus on crucial data points, speeding up learning without sacrificing quality.

- This method is especially beneficial for organizations with limited computing resources.

Conclusion

SALT redefines LLM training by turning smaller models into effective training partners. Its innovative approach balances efficiency and effectiveness, making it a groundbreaking strategy in machine learning. SALT is vital for overcoming resource challenges, boosting model performance, and democratizing access to advanced AI technologies.

For further insights and connections, feel free to reach us at hello@itinai.com. Stay updated on AI developments through our Telegram and @itinaicom.

Explore how AI can transform your business at itinai.com.