Advancements in Multimodal Large Language Models (MLLMs)

Understanding MLLMs

Multimodal large language models (MLLMs) are rapidly evolving technology that allows machines to understand both text and images at the same time. This capability is transforming fields like image analysis, visual question answering, and multimodal reasoning, enhancing AI’s ability to interact with the world more effectively.

Challenges Faced

However, MLLMs face challenges, primarily due to their reliance on natural language supervision for training. This can lead to poor quality in visual representation. While increasing dataset sizes has offered some improvement, a more focused approach is needed to enhance visual understanding without compromising efficiency.

Current Training Techniques

Training methods for MLLMs typically involve using visual encoders to extract image features. Some techniques use multiple encoders or cross-attention, but they also demand more data and computational power, making them less scalable.

Introducing OLA-VLM

What is OLA-VLM?

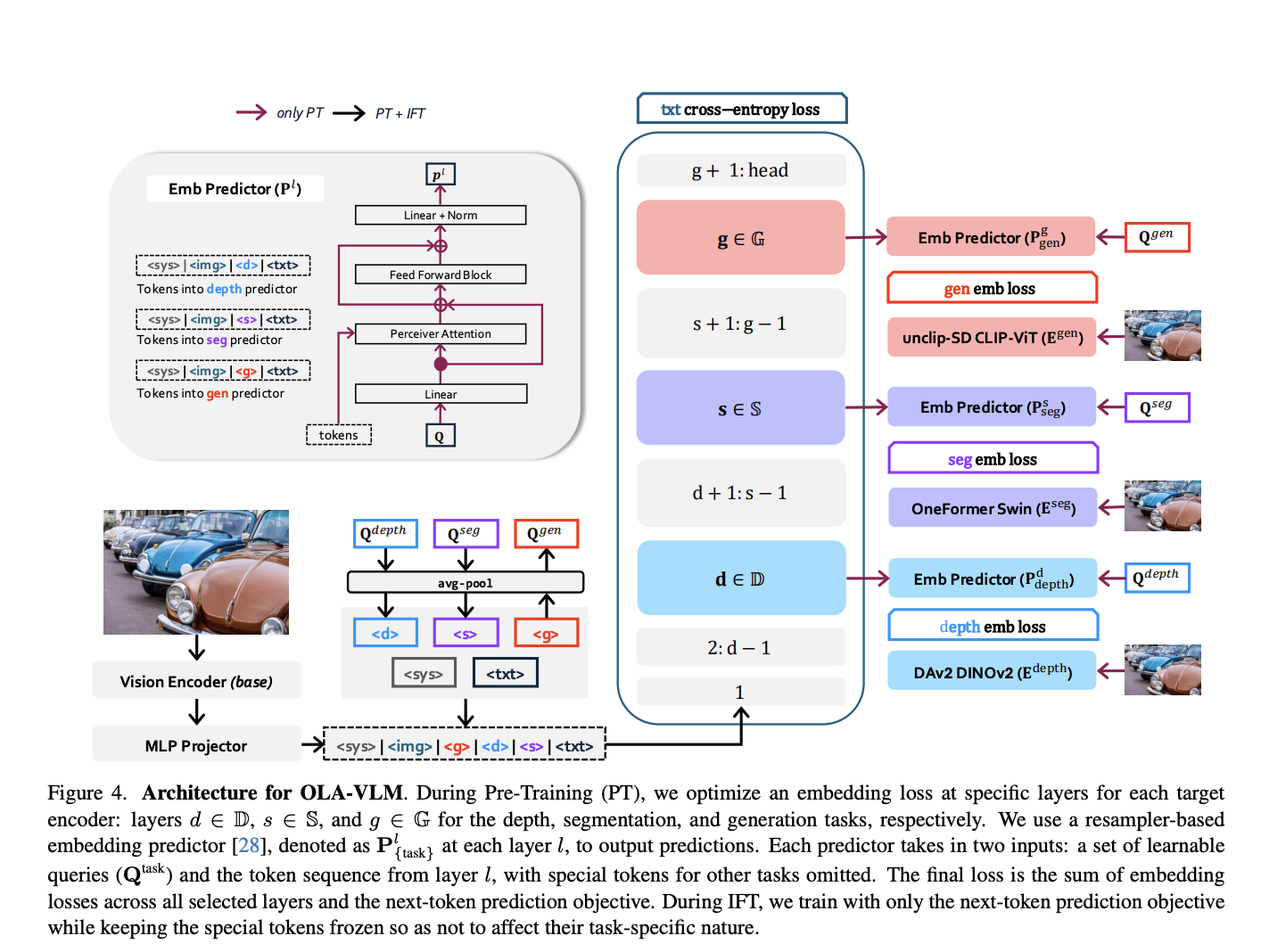

Researchers from SHI Labs at Georgia Tech and Microsoft Research have developed a new approach called OLA-VLM. This innovative method improves MLLMs by optimizing the integration of visual information without increasing the complexity of visual encoders.

Key Features of OLA-VLM

- Embedding Optimization: This technique enhances the alignment of visual and textual data during pretraining.

- Efficient Integration: Visual features are incorporated into the model without additional computational costs during inference.

- Special Tokens: Task-specific tokens are added to help the model process visual information effectively.

Performance Results

Proven Success

OLA-VLM has shown impressive results on various benchmarks:

- In-depth estimation tasks saw an accuracy improvement of up to 8.7% compared to existing models, reaching 77.8% accuracy.

- For segmentation tasks, it achieved a mean Intersection over Union (mIoU) score of 45.4%, up from the baseline of 39.3%.

- Overall, OLA-VLM improved performance in both 2D and 3D vision tasks by an average of 2.5%.

Efficiency Over Complexity

This model effectively uses a single visual encoder, making it much more efficient than systems requiring multiple encoders.

Impact on Future AI Development

A New Standard

OLA-VLM sets a new benchmark for integrating visual data into MLLMs. By focusing on embedding optimization, it improves the quality of visual representations while using fewer resources than traditional methods.

Conclusion

This research from SHI Labs and Microsoft Research marks a significant leap forward in multimodal AI, illustrating how focused optimization can enhance both performance and efficiency.

Get Involved

For more details, check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and connect through our LinkedIn Group. Don’t miss out on joining our 60k+ ML SubReddit.

Explore AI Solutions for Your Company

If you wish to evolve your company with AI, consider the following steps:

- Identify Automation Opportunities: Find key areas in customer interactions that could benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that meet your needs.

- Implement Gradually: Start small, gather data, and expand cautiously.

For advice on AI KPI management, contact us at hello@itinai.com. Stay updated with insights on leveraging AI through our Telegram and Twitter channels.