Transforming Text to Images with EvalGIM

Text-to-image generative models are changing how AI creates visuals from text. These models are useful in various fields like content creation, design automation, and accessibility. However, ensuring their reliability is challenging. We need effective ways to assess their quality, diversity, and how well they match the text prompts. Current evaluation methods are often limited and lack integration, making it hard to get a complete picture of model performance.

Challenges in Evaluation

Existing evaluation tools are fragmented. Metrics like Fréchet Inception Distance (FID) and CLIPScore are commonly used but often don’t work together. This leads to incomplete assessments. Additionally, many tools struggle to adapt to new datasets or metrics, making it hard to perform thorough evaluations.

Introducing EvalGIM

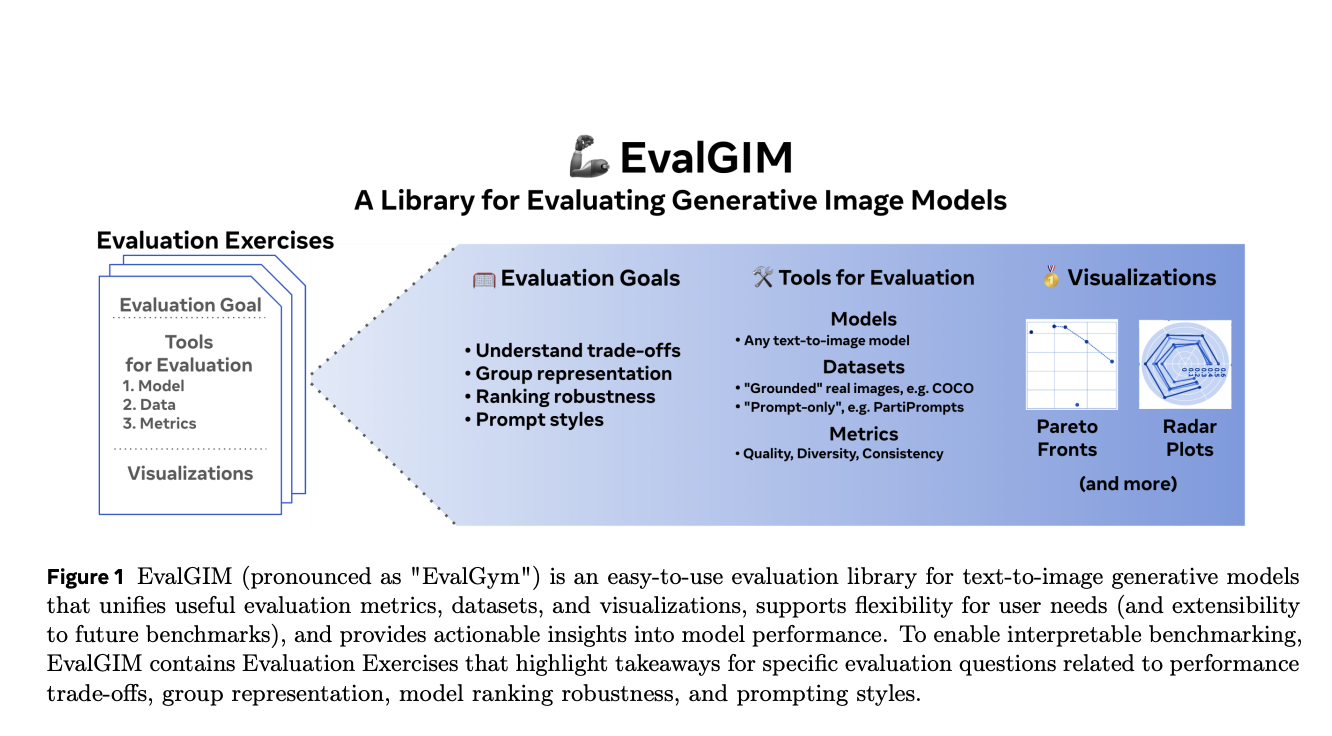

Researchers from several institutions have developed EvalGIM, a comprehensive library designed to improve the evaluation of text-to-image models. EvalGIM combines various metrics, datasets, and visualizations, allowing for effective assessments. Key features include:

- Evaluation Exercises: These help answer specific questions about model performance, such as the balance between quality and diversity.

- Support for Diverse Datasets: EvalGIM works with real-image datasets like MS-COCO and prompt-only datasets to assess performance across different scenarios.

- Modular Design: Users can easily add new evaluation components, keeping the library relevant as the field evolves.

Key Features of EvalGIM

EvalGIM includes:

- Distributed Evaluations: This allows for faster analysis across multiple computing resources.

- Hyperparameter Sweeps: Users can explore how different settings affect model performance.

- Compatibility: Works well with popular tools like HuggingFace diffusers for benchmarking models.

Insights from Evaluation Exercises

EvalGIM’s Evaluation Exercises provide valuable insights, such as:

- Consistency in model performance tends to plateau after around 450,000 training iterations.

- Geographic disparities in model performance reveal that regions like Southeast Asia and Europe have seen more improvements than Africa.

- Using a mix of original and recaptioned training data enhances model performance across different datasets.

Conclusion

EvalGIM sets a new benchmark for evaluating text-to-image generative models by overcoming the limitations of traditional tools. It offers a unified approach to assessments, revealing critical insights about performance and disparities. With its adaptable design, EvalGIM will continue to meet evolving research needs, helping to create more inclusive and robust AI systems.

For more information, check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Also, join our 60k+ ML SubReddit.

Elevate Your Business with AI

To remain competitive and harness the power of AI, consider using EvalGIM. Here’s how AI can transform your workflow:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For advice on AI KPI management, reach out to us at hello@itinai.com. Stay updated on leveraging AI by following us on Telegram or Twitter.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.