Understanding Neural Networks and Their Training Dynamics

Neural networks are essential tools in fields like computer vision and natural language processing. They help us model and predict complex patterns effectively. The key to their performance lies in the training process, where we adjust the network’s parameters to reduce errors using techniques like gradient descent.

Challenges in Neural Network Training

Despite advancements, we still have questions about how initial parameter settings affect the final trained model and how input data plays a role. Researchers want to know if certain initializations lead to better optimization paths or if other factors, like architecture and data distribution, are more important. Understanding this can help us create more efficient training algorithms and improve the interpretability of neural networks.

Insights from Previous Studies

Earlier research indicates that during training, parameter updates often occupy a small part of the overall parameter space. Most parameters tend to stay close to their initial values, but the connection between initial settings and final outcomes is not fully understood.

A New Framework by EleutherAI

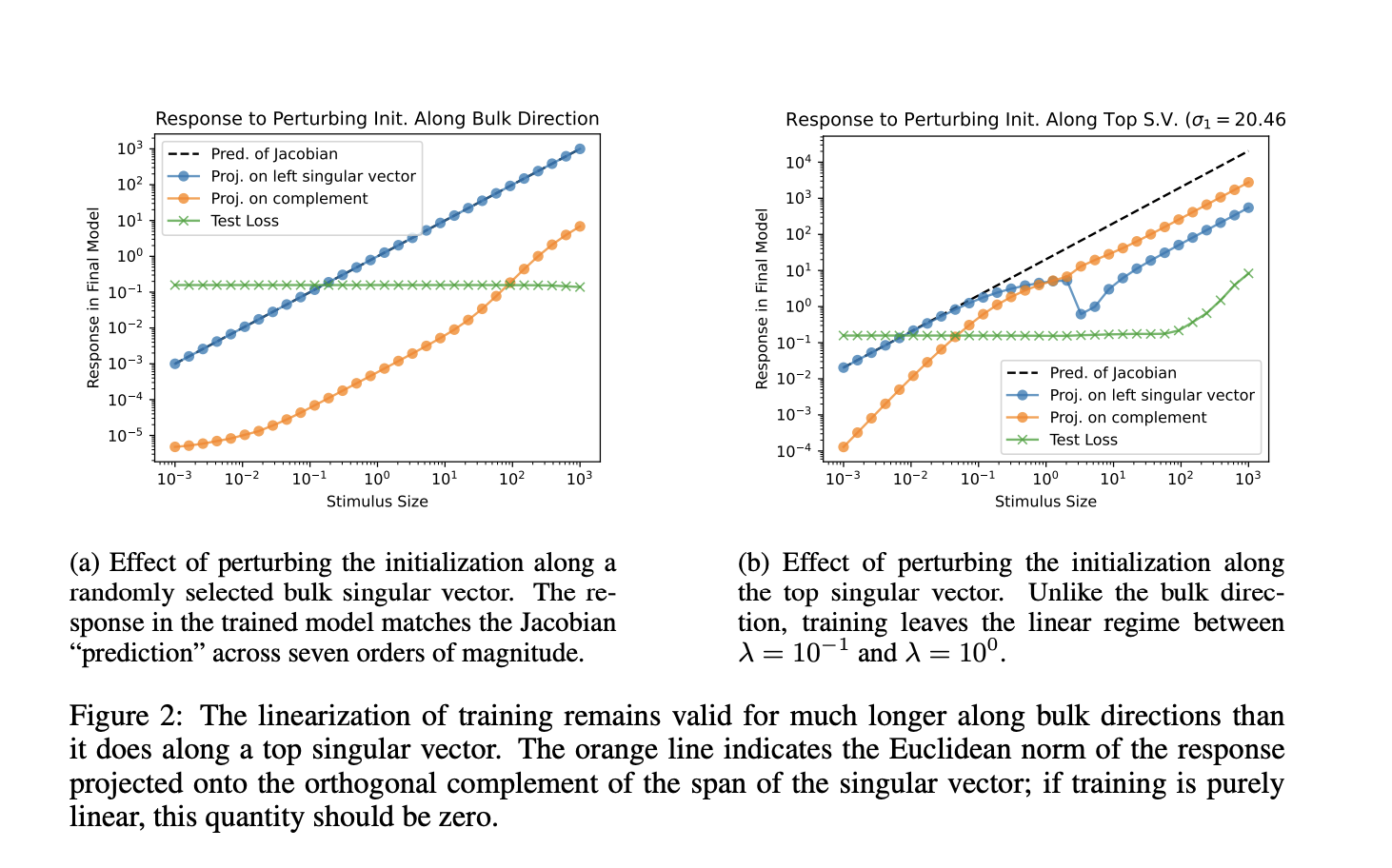

Researchers from EleutherAI have developed a new method to analyze neural network training using the Jacobian matrix. This approach helps us see how initial parameters influence the final state of the network.

Key Components of the Framework

By applying singular value decomposition to the Jacobian matrix, the training process is divided into three important areas:

- Chaotic Subspace: Amplifies changes in parameters.

- Bulk Subspace: Shows minimal changes during training.

- Stable Subspace: Dampens changes for smoother training.

Experimental Findings

Experiments reveal that:

- The chaotic subspace is crucial for optimizing parameter changes.

- The stable subspace helps ensure stable convergence during training.

- The bulk subspace, while large, has little impact on regular predictions but affects out-of-distribution predictions significantly.

- Training restricted to the bulk subspace is ineffective, while training in chaotic or stable subspaces yields strong results.

Key Takeaways

- The chaotic subspace is vital for shaping optimization dynamics.

- The stable subspace contributes to smooth training convergence.

- The bulk subspace has minimal impact on regular predictions but influences out-of-distribution outcomes.

- Understanding these dynamics can lead to better neural network optimization strategies.

Conclusion

This study offers valuable insights into neural network training by breaking down parameter updates into chaotic, stable, and bulk subspaces. It highlights how initialization and data structure affect training dynamics. The findings challenge traditional views on parameter updates and open new paths for optimizing neural networks.

For more information, check out the Paper. All credit goes to the researchers involved. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Join our community of over 60k members on ML SubReddit.

Transform Your Business with AI

Utilize the insights from EleutherAI to enhance your company’s AI capabilities:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Measure the impact of AI on business outcomes.

- Select AI Solutions: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand AI usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing AI insights, follow us on Telegram or Twitter.

Discover how AI can transform your sales processes and customer engagement at itinai.com.