Unlocking the Potential of LLMs with AsyncLM

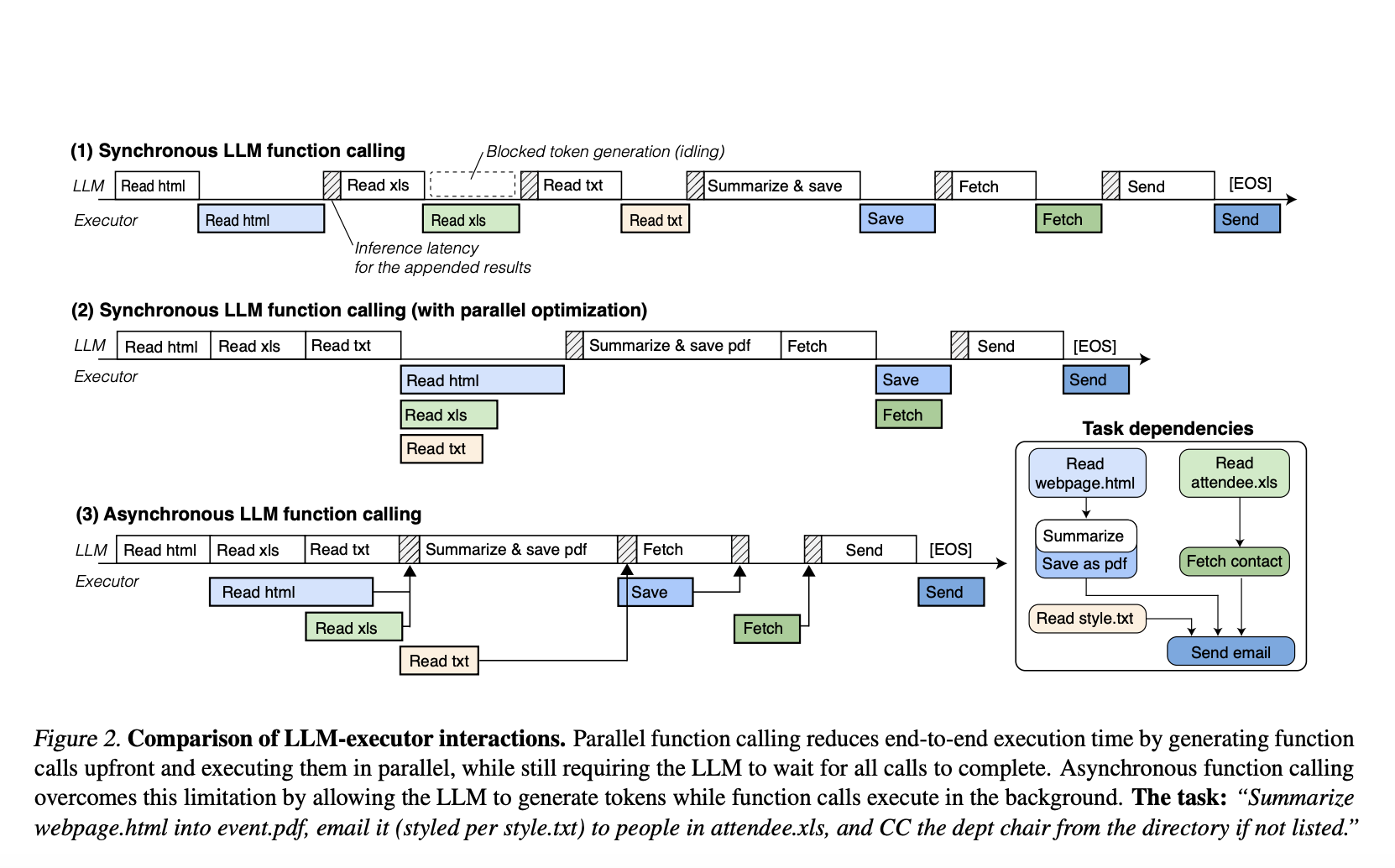

Large Language Models (LLMs) can now interact with external tools and data sources, such as weather APIs or calculators, through functions. This opens doors to exciting applications like autonomous AI agents and advanced reasoning systems. However, the traditional method of calling functions requires the LLM to pause until each task is completed, which can be inefficient and resource-heavy.

Challenges with Synchronous Function Calling

This synchronous method slows down processing, as LLMs must complete each function one after the other. As tasks become more complex, this approach becomes less practical for handling multiple operations simultaneously.

Improving LLM Efficiency

Recent improvements focus on:

- Parallel Function Execution: Running function calls at the same time.

- Combining Calls: Merging multiple calls into fewer transactions.

- Syntax Optimization: Improving how functions are structured to reduce overhead.

Despite these advances, the limitation of synchronous interaction still exists.

Introducing AsyncLM

The new AsyncLM system from Yale University allows LLMs to generate and execute function calls simultaneously. This means that while a function is being processed, the LLM can keep working on other tasks, enhancing efficiency and reducing waiting times.

Key Features of AsyncLM

AsyncLM incorporates:

- Interrupt Mechanism: LLMs receive notifications when function calls are completed, maximizing resource usage.

- Domain-Specific Language (CML): A specialized interface that supports seamless asynchronous interactions.

- Fine-Tuning Strategies: Optimizing how LLMs manage tasks and dependencies.

Performance Benefits

Benchmarks show that AsyncLM can complete tasks up to 5.4 times faster than traditional methods without compromising accuracy. This capability enables new AI applications, including enhanced communication between humans and LLMs.

Evaluation Metrics

We focus on:

- Latency: How quickly tasks are completed with the asynchronous approach.

- Correctness: Ensuring that function calls are accurate despite the new method.

Conclusion

AsyncLM represents a significant advance in LLM technology by allowing independent execution of tasks. This innovation leads to reduced waiting times and improved efficiency in LLM interactions with tools, data, and users.

Discover More and Connect

Explore the potential of AsyncLM and how it can revolutionize your business with AI. Key steps include:

- Identify Automation Opportunities: Find where AI can enhance customer interactions.

- Define KPIs: Measure the impact of AI initiatives on your business.

- Select an AI Solution: Choose tools that fit your needs.

- Implement Gradually: Start small, analyze results, and grow effectively.

For AI KPI management advice, contact us at hello@itinai.com. Follow us for more insights on Telegram and @Twitter.

Learn how AI can transform your sales and customer engagement processes at itinai.com.