Understanding Language Models and Synthetic Data

Language models (LMs) are evolving tools that help solve problems and create synthetic data, which is essential for improving AI capabilities. Synthetic data can replace traditional manual annotation, providing scalable solutions for training models in fields like mathematics, coding, and following instructions. By generating high-quality datasets, LMs enhance generalization in tasks, making them valuable assets in AI research and applications.

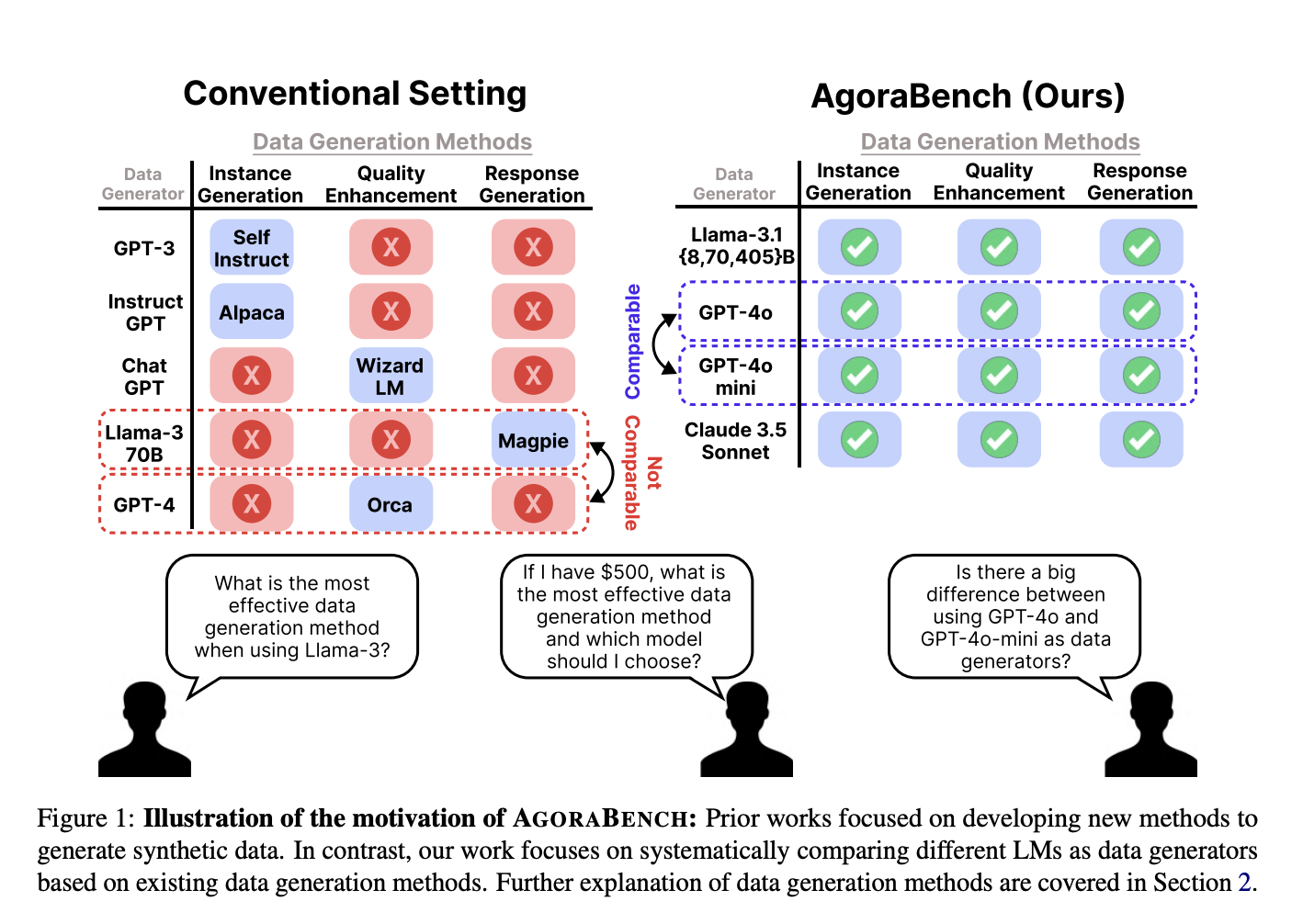

The Challenge of Evaluating Language Models

One major challenge is determining which LMs are the best at generating synthetic data. Researchers struggle to choose the right models for specific tasks due to the lack of a unified benchmark for evaluation. Notably, a model’s problem-solving ability does not always reflect its data generation performance, complicating direct comparisons.

Exploring Synthetic Data Generation

Researchers have examined various methods for synthetic data generation using LMs like GPT-3, Claude-3.5, and Llama-based architectures. Techniques such as instruction-following and response generation have been tested, but inconsistent results hinder meaningful conclusions about model strengths.

Introducing AGORABENCH

A group of researchers from institutions like Carnegie Mellon University and the University of Washington developed AGORABENCH. This benchmark allows for systematic evaluation of LMs as data generators under controlled conditions. AGORABENCH standardizes variables like seed datasets and evaluation metrics, enabling fair comparisons across tasks such as instance generation and quality enhancement.

Methodology of AGORABENCH

AGORABENCH uses a fixed methodology to assess data generation capabilities. Specific seed datasets are utilized for each domain, ensuring consistency. Meta-prompts guide models in generating synthetic data, while factors like instruction difficulty and response quality are measured. A key metric, Performance Gap Recovered (PGR), indicates the improvement of student models trained on synthetic data.

Key Findings from AGORABENCH

The results showed that GPT-4o was the top model for instance generation, achieving a PGR of 46.8%. Claude-3.5-Sonnet excelled in quality enhancement with a PGR of 17.9%. Interestingly, some weaker models performed better in specific scenarios, highlighting the complexity of model performance. Cost analysis revealed that using less expensive models can yield comparable results, emphasizing cost-effective strategies.

Implications for AI Research and Industry

The study reveals that stronger problem-solving models do not always generate better synthetic data. Factors such as response quality and instruction difficulty significantly impact outcomes. The insights from AGORABENCH can guide researchers in selecting suitable models for synthetic data generation, optimizing costs and performance.

Take Action with AI

To evolve your company with AI and stay competitive, consider the following steps:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand usage wisely.

Connect with Us

For AI KPI management advice, contact us at hello@itinai.com. For continuous insights, follow us on our Telegram or Twitter @itinaicom.

Explore More

Discover how AI can transform your sales processes and customer engagement at itinai.com.