Text Generation: A Key to Modern AI

Text generation is essential for applications like chatbots and content creation. However, managing long prompts and changing contexts can be challenging. Many systems struggle with speed, memory use, and scalability, especially when dealing with large amounts of context. This often forces developers to choose between speed and capability, showing a clear need for better solutions.

Introducing TGI v3.0 from Hugging Face

Hugging Face has launched Text Generation Inference (TGI) v3.0, which significantly improves efficiency. TGI v3.0 is 13 times faster than vLLM for long prompts and is easy to deploy with no setup required. Users simply need to provide a Hugging Face model ID to see enhanced performance.

Key Benefits

- Increased Token Capacity: TGI v3.0 can handle three times more tokens, allowing a single NVIDIA L4 GPU to process 30,000 tokens.

- Faster Response Times: Optimized data structures allow quick retrieval of context, speeding up responses for longer interactions.

Technical Highlights

TGI v3.0 includes several important improvements:

- Reduced Memory Use: This allows for better handling of long prompts and is ideal for developers with limited hardware.

- Prompt Optimization: TGI keeps the initial conversation context, enabling fast responses to follow-up questions with minimal delay.

- Zero-Configuration Setup: The system automatically adjusts settings based on hardware, reducing the need for manual configuration.

Results and Insights

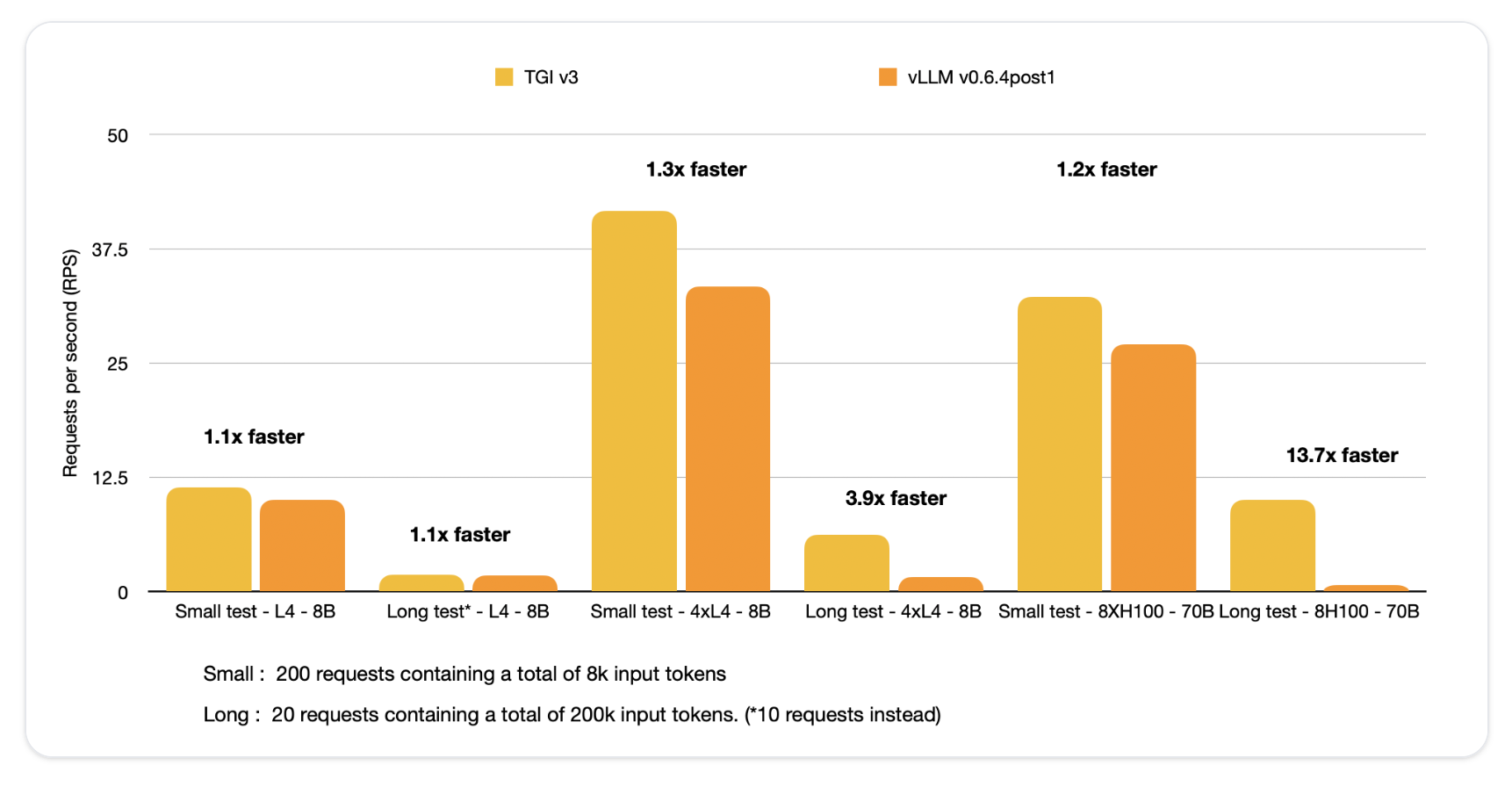

Benchmark tests show TGI v3.0’s impressive performance:

- For prompts over 200,000 tokens, TGI delivers responses in just 2 seconds, compared to 27.5 seconds with vLLM.

- Memory optimizations allow for large prompts and conversations without exceeding limits, making it ideal for developers focused on efficiency and scalability.

Conclusion

TGI v3.0 marks a major step forward in text generation technology. By tackling issues with token processing and memory usage, it helps developers create faster, scalable applications with ease. The zero-configuration model makes high-performance NLP accessible to more users.

Explore Further

For more details, check out the full information here. Follow us on Twitter, join our Telegram Channel, and be part of our LinkedIn Group. Also, join our 60k+ ML SubReddit.

Transform Your Business with AI

Stay competitive by leveraging TGI v3.0:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI projects have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Discover how AI can enhance your sales processes and customer engagement. Explore more solutions at itinai.com.