Understanding Transformers and Their Role in Graph Search

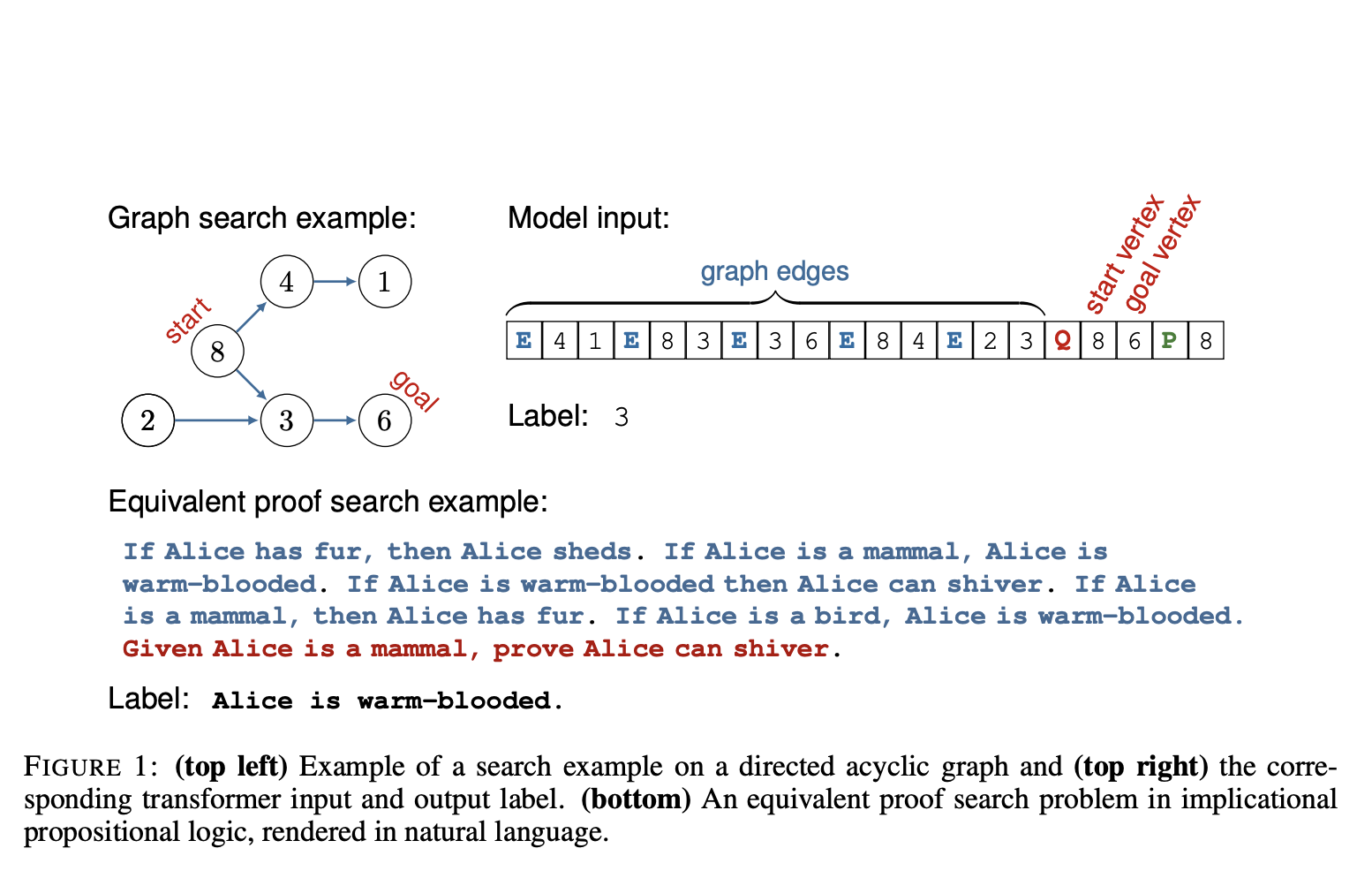

Transformers are essential for large language models (LLMs) and are now being used for graph search problems, which are crucial in AI and computational logic. Graph search involves exploring nodes and edges to find connections or paths. However, it’s unclear how well transformers can handle graph search efficiently, especially with complex datasets.

The Challenge of Graph Search

Graphs represent complex data but pose unique challenges. Searching within these structures can be computationally intensive, especially for large-scale graphs, which increase the complexity of the search space. Current transformer models often rely on heuristic methods, leading to potential issues in robustness and generalization. Their performance can falter as the graph size increases, making it hard to identify effective algorithms across diverse scenarios.

Innovative Solutions for Improvement

To tackle these challenges, researchers from multiple universities and Google proposed a new training framework for transformers aimed at improving their graph search capabilities. They used directed acyclic graphs (DAGs) to create comprehensive training datasets while avoiding heuristic reliance. This allowed models to learn robust algorithms through gradually increasing complexity in examples.

Key Strategies in Training

The training focused on a balanced variety of graph scenarios, ensuring different lookaheads and steps required for pathfinding were included. Using an “exponential path-merging” approach, transformers could systematically explore paths and encode detailed connectivity information. Mechanistic interpretability techniques were employed to understand how transformers process paths within graphs.

Results and Findings

The study yielded mixed results. While smaller graphs showed near-perfect accuracy, performance dropped significantly as the graph size increased, particularly with lookaheads beyond certain limits. The study also revealed that simply increasing the model size did not enhance performance in complex searches.

Alternative Methods and Insights

Researchers evaluated other methods, such as depth-first search and intermediate prompting, which did simplify tasks but failed to improve handling of larger graphs. Key findings include:

- Models trained on well-curated data outperformed those trained on naive datasets.

- The “exponential path-merging” algorithm needs to be understood for future improvements.

- Difficulty increased with graph size, indicating the need for architectural changes.

- Scaling parameters or training datasets didn’t solve the underlying issues.

Conclusions and Future Directions

This research highlights transformers’ capabilities and limitations in graph search tasks. The insights gained from tailored training distributions and understanding algorithmic behaviors provide valuable directions for enhancing scalability and robustness in graph reasoning tasks. New architectures or advanced training methods could be key to solving these challenges.

Unlock the Potential of AI for Your Company

Discover how AI can transform your operations with these practical steps:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI efforts positively impact business outcomes.

- Select an AI Solution: Choose tools that fit your needs and offer customization.

- Implement Gradually: Start small, gather data, and expand AI use carefully.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or @itinaicom.

Explore how AI can enhance your sales processes and customer engagement at itinai.com.

Check out the detailed study for comprehensive insights. All credit for this research goes to the dedicated researchers involved. Don’t forget to join our growing community on Twitter, Telegram, LinkedIn, and our 60k+ ML SubReddit!