Understanding LLM Hallucinations

Large Language Models (LLMs) like GPT-4 and LLaMA are known for their impressive skills in understanding and generating text. However, they can sometimes produce believable yet incorrect information, known as hallucinations. This is a significant challenge when accuracy is crucial in applications.

Importance of Detecting Hallucinations

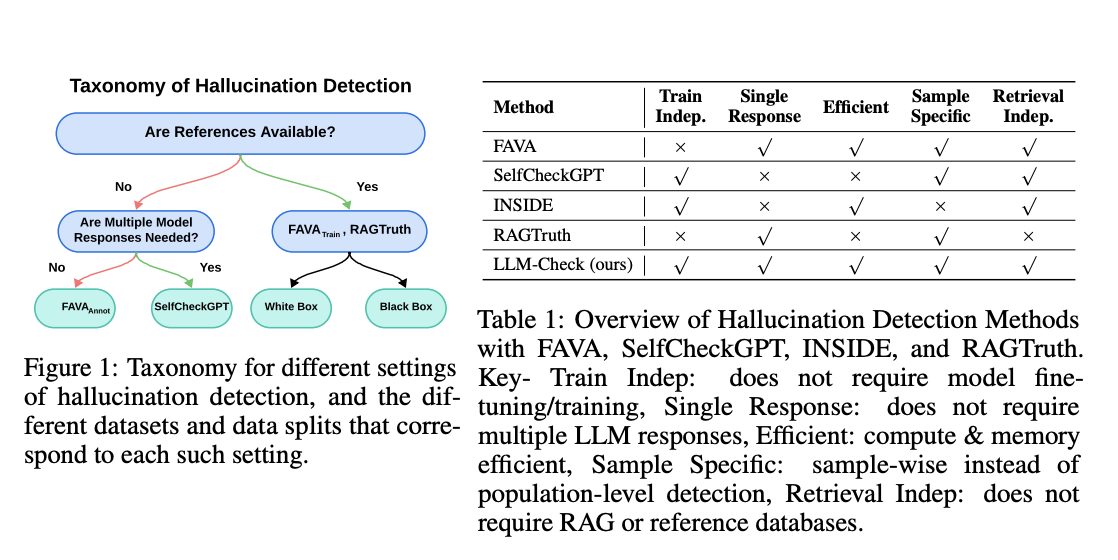

To use LLMs effectively, we need to identify and address these hallucinations. This detection can be complex, depending on whether the model is open (white-box) or closed (black-box).

Current Detection Methods

Several techniques exist to detect hallucinations, such as:

- Uncertainty Estimation: Using metrics like perplexity to measure reliability.

- Self-Consistency Techniques: Analyzing multiple responses to the same input to spot inconsistencies.

- RAG Methods: Combining LLM results with external data for fact-checking.

However, these methods often require multiple outputs or large datasets, which can be impractical.

Introducing LLM-Check

Researchers at the University of Maryland have developed a new method called LLM-Check. This solution effectively detects hallucinations in a single response without needing extensive resources. Here’s how it works:

Key Features of LLM-Check

- Single Response Analysis: It examines internal attention maps and output probabilities to identify errors.

- High Efficiency: LLM-Check is 450 times faster than previous methods, making it ideal for real-time applications.

- No Extra Training Required: It uses existing internal data without needing additional training or multiple model outputs.

Performance and Results

LLM-Check shows great results across various datasets. It utilizes metrics like Hidden Score and Attention Score to provide accurate detection of hallucinations. This method is effective in both white-box and black-box environments.

Conclusion

LLM-Check provides a practical solution for detecting inaccuracies in LLM outputs, enhancing reliability for real-time use. It simplifies the detection process, avoids the need for extensive resources, and significantly boosts accuracy.

Stay Connected

For more information on this research, check out the paper. You can also follow us on Twitter, join our Telegram Channel, and connect on LinkedIn. Don’t forget to join our community of over 60,000 members on our ML SubReddit.

Transform Your Business with AI

If you want to enhance your company’s performance with AI, consider LLM-Check for reliable outputs. Here’s how to get started:

- Identify Automation Opportunities: Find areas where AI can improve customer interactions.

- Define KPIs: Set measurable goals for your AI initiatives.

- Select an AI Solution: Choose tools that fit your specific needs.

- Implement Gradually: Start with a pilot program to collect data and expand carefully.

For advice on managing AI KPIs, reach out to us at hello@itinai.com. Stay updated with the latest AI insights on our Telegram and Twitter.

Explore how AI can enhance your sales and customer engagement at itinai.com.