Introducing Deepthought-8B-LLaMA-v0.01-alpha

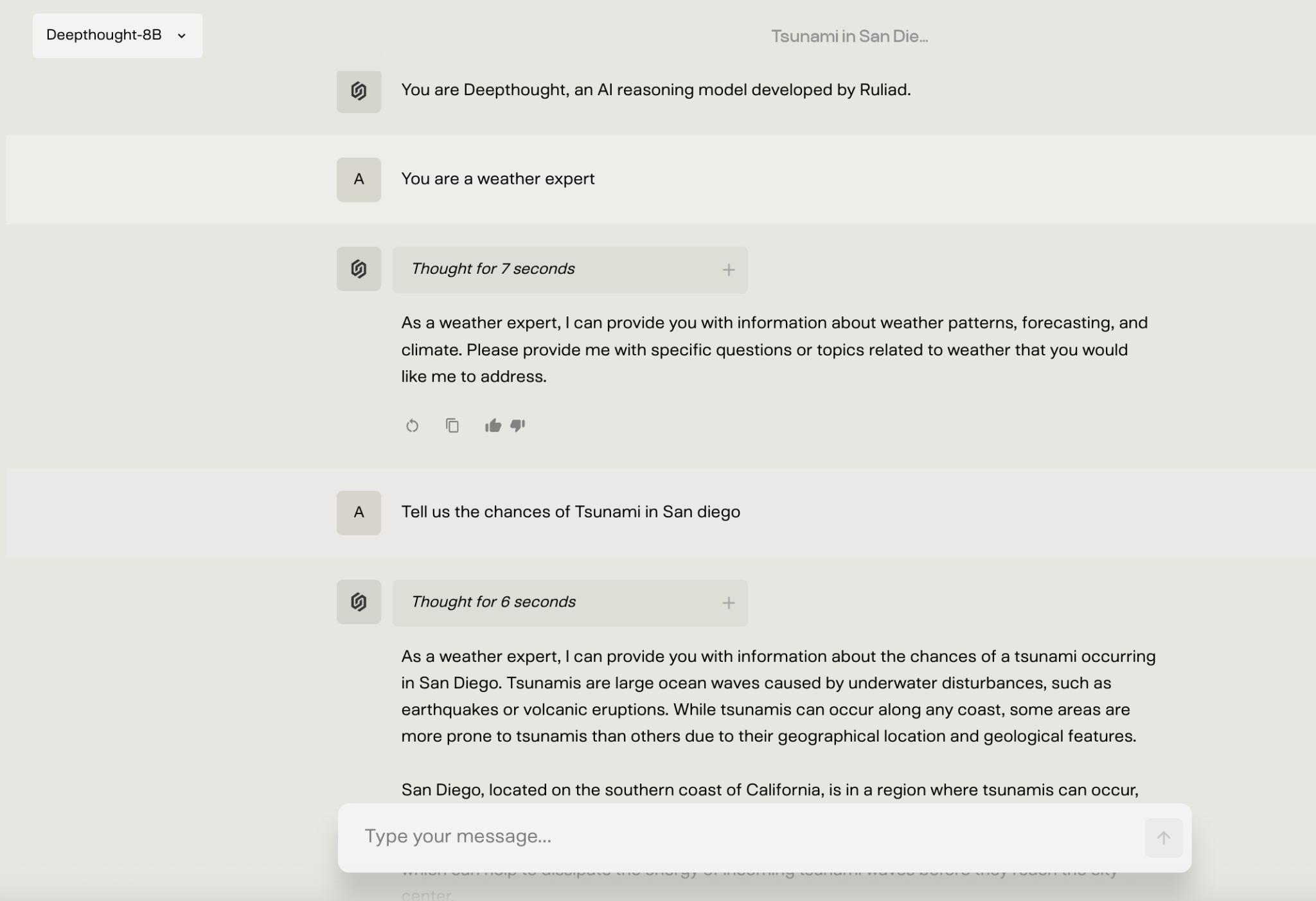

Ruliad AI has launched Deepthought-8B, a new AI model designed for clear and understandable reasoning. Built on LLaMA-3.1, this model has 8 billion parameters and offers advanced problem-solving capabilities while being efficient to operate.

Key Features and Benefits

- Transparent Reasoning: Every decision-making step is documented, allowing users to follow the AI’s thought process in a structured JSON format. This builds trust and makes integration into applications straightforward.

- Customizable Reasoning: Users can adjust reasoning patterns without retraining the model, making it adaptable for various tasks like coding and complex problem-solving.

- Scalable Performance: The model can adjust its reasoning depth based on task complexity, providing flexibility for different challenges.

- Efficient Operation: Works well on systems with 16GB or more VRAM and includes advanced features like Flash Attention 2 for improved performance.

- Clear Workflow: The reasoning process includes stages like understanding the problem, gathering data, analysis, and implementation, enhancing usability.

Performance and Limitations

Deepthought-8B performs well in various benchmarks, including coding and math tasks. However, it has some limitations in complex mathematical reasoning and long-context processing. Ruliad is transparent about these areas for improvement, fostering user trust and encouraging feedback.

Commercial Solution and Support

Deepthought-8B is positioned as a commercial solution with licensing terms that support business use. Comprehensive support options are available, including social media and email assistance. Detailed installation and usage guidelines are provided for user convenience.

Installation and Usage

To get started, you can install the necessary packages:

pip install torch transformers

# Optional: Install Flash Attention 2 for better performance

pip install flash-attnFollow these steps to use the model:

- Set your HuggingFace token as an environment variable.

- Initialize the model in your Python code.

- Run the provided example script.

Conclusion

Deepthought-8B, with its impressive capabilities, rivals larger models and offers features like JSON outputs and customizable inference paths. It is accessible for users with systems as low as 16GB VRAM, making it a valuable tool for many applications.

Stay Connected

For more insights and updates, follow us on Twitter, join our Telegram Channel, and connect on LinkedIn. Subscribe to our newsletter and join our 60k+ ML SubReddit community.

Transform Your Business with AI

Explore how AI can enhance your operations:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select the Right Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.