Recent Advances in Natural Language Processing

Recent developments in natural language processing (NLP), particularly with models like GPT-3 and BERT, have significantly improved text generation and sentiment analysis. These models are popular in sensitive fields like healthcare and finance due to their ability to adapt with minimal data. However, using these models raises important privacy and security issues, especially when handling sensitive information.

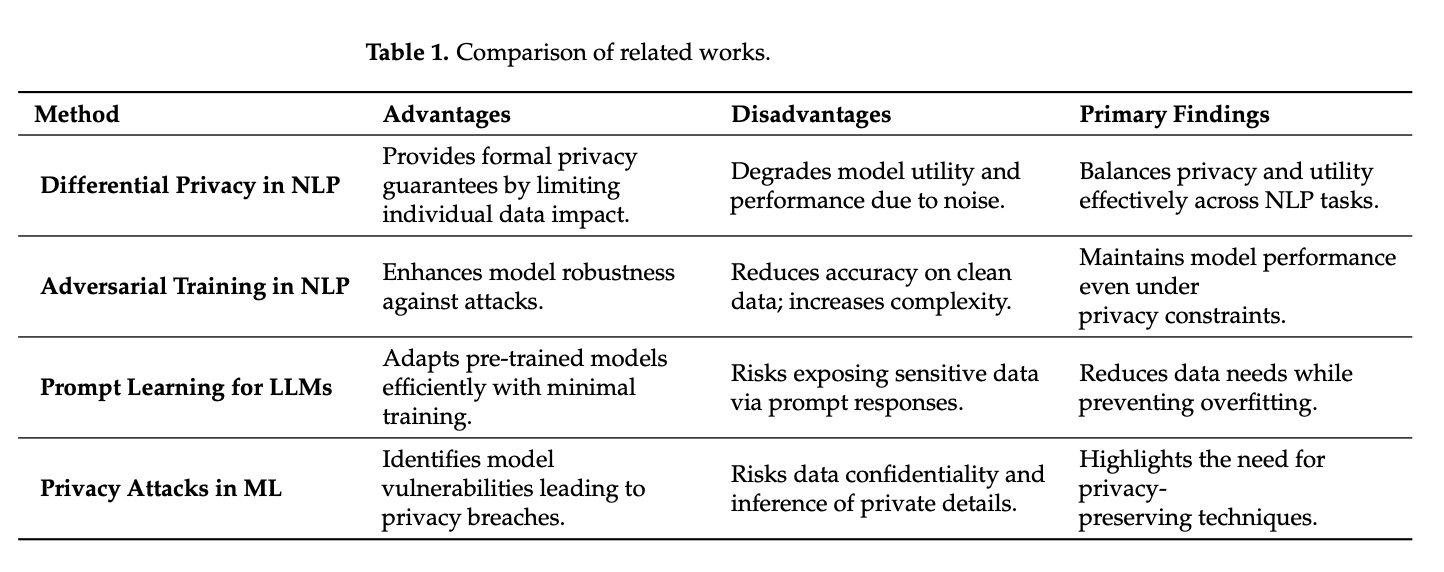

Practical Solutions for Privacy and Security

To tackle these challenges, two key techniques are used: Differential Privacy (DP) and Adversarial Training. DP protects individual privacy by adding noise to data, while adversarial training strengthens the model against harmful inputs. Combining these methods can effectively address privacy and security concerns in NLP applications.

Combining DP and Adversarial Training

Integrating DP and adversarial training involves balancing noise, utility, and robustness. Rapid learning methods can expose sensitive data, making it crucial to find solutions that ensure secure NLP systems in sensitive areas.

A Novel Framework for Enhanced Security

A recent study from a Chinese research team introduces a framework that merges DP and adversarial training. This approach aims to create a secure training environment that protects sensitive data while enhancing the model’s resilience against attacks. By combining these strategies, the framework addresses both data privacy and model vulnerability.

How the Framework Works

The framework applies DP during the model’s gradient updates, adding Gaussian noise to mask individual data points. This ensures that changes to a single data point do not significantly affect the model. For robustness, adversarial training creates altered versions of input data to prepare the model for potential attacks. The gradients from both training methods are combined to maintain a balance between privacy, robustness, and utility.

Validation Through Experiments

The researchers tested their framework on three NLP tasks: sentiment analysis, question answering, and topic classification, using datasets like IMDB, SQuAD, and AG News. They fine-tuned BERT with specific prompts and applied differential privacy by adjusting privacy budgets. Adversarial training was also included to bolster the model’s defenses.

Results and Insights

Findings revealed that stricter privacy settings can lower accuracy but improve robustness against attacks. For example, in sentiment analysis, accuracy decreased with tighter privacy, but adversarial resilience increased with higher training parameters. This demonstrates the framework’s effectiveness in balancing privacy, utility, and robustness.

Conclusion and Future Directions

The authors propose a new framework that enhances privacy and robustness in NLP systems. While stricter privacy may reduce performance, adversarial training improves resistance to attacks, which is vital for sensitive sectors like finance and healthcare. Future work will focus on optimizing these trade-offs and expanding the framework’s applications.

Get Involved

Check out the research paper for more details. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, subscribe to our newsletter and join our 60k+ ML SubReddit community.

Transform Your Business with AI

To stay competitive, consider how AI can enhance your operations:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, reach out to us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram t.me/itinainews or Twitter @itinaicom.

Explore AI Solutions for Sales and Engagement

Discover how AI can transform your sales processes and customer engagement at itinai.com.