Introduction to Graph Convolutional Networks (GCNs)

Graph Convolutional Networks (GCNs) are essential for analyzing complex data structured as graphs. They effectively capture relationships between data points (nodes) and their features, making them valuable in fields like social network analysis, biology, and chemistry. GCNs support tasks such as node classification and link prediction, driving progress in both scientific and industrial applications.

Challenges in Large-Scale Graph Training

Training GCNs on large graphs poses significant challenges. Issues arise from inefficient memory usage and high communication demands during distributed training. Additionally, when graphs are split into smaller segments for parallel processing, it can create an uneven workload and increase overall communication costs. Tackling these challenges is vital for effectively training GCNs on large datasets.

Current Methods and Their Limitations

There are two main approaches to GCN training: mini-batch and full-batch training. Mini-batch training uses smaller segments of data to save memory, but this can impact accuracy because it doesn’t use the full graph structure. In contrast, full-batch training maintains the graph’s integrity but struggles with scalability due to its high memory requirements. Furthermore, most existing solutions focus mainly on GPU optimization, neglecting CPU-based systems.

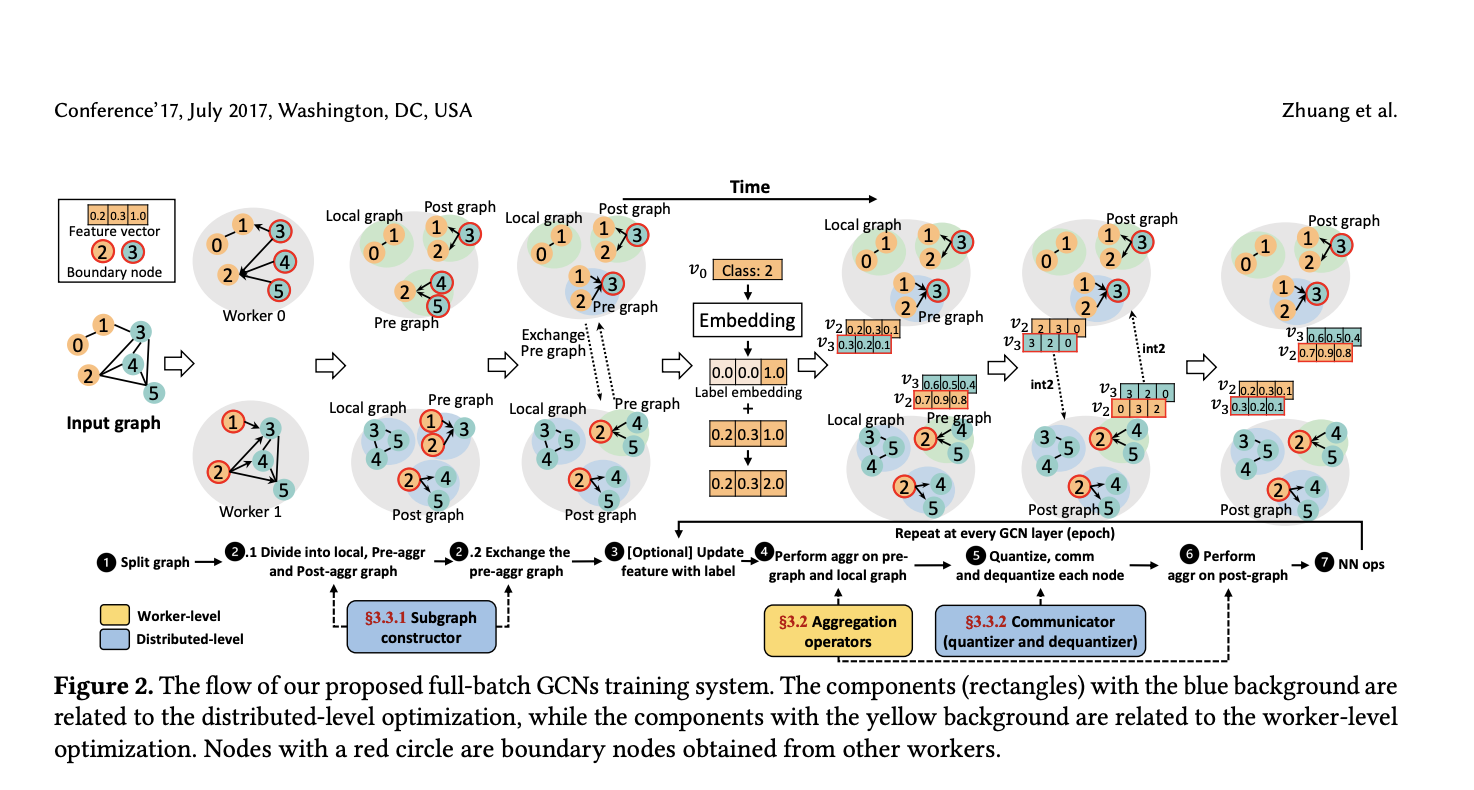

Introducing SuperGCN

A research team from prestigious institutions has developed a new framework called SuperGCN. This framework is specifically designed for CPU-powered supercomputers and aims to enhance scalability and efficiency in GCN training. SuperGCN improves distributed graph learning by optimizing graph operations and reducing communication needs.

Key Innovations in SuperGCN

- Optimized CPU Implementations: SuperGCN uses tailored graph operators for efficient memory usage and balanced workloads.

- Hybrid Aggregation Strategy: It applies the minimum vertex cover algorithm to minimize unnecessary communications.

- Int2 Quantization: This technique compresses data during transmission, significantly decreasing transfer volumes without losing accuracy.

- Label Propagation: Used alongside quantization, it helps maintain high model accuracy despite lower precision.

Outstanding Performance of SuperGCN

SuperGCN has demonstrated impressive results on datasets like Ogbn-products and Ogbn-papers100M. It achieved up to a sixfold speedup on Intel’s DistGNN for Xeon systems and effectively scaled on platforms like Fugaku with over 8,000 processors. It also matched the performance of GPU systems while being more energy efficient and cost-effective. Notably, it recorded an accuracy of 65.82% on Ogbn-papers100M.

Conclusion

With SuperGCN, major hurdles in distributed GCN training have been addressed. This advancement illustrates that effective and scalable solutions can be achieved on CPU platforms, offering a cost-efficient alternative to GPU systems. This research represents a significant leap forward in large-scale graph processing, promoting sustainability in computation.

For more insights, check out the Paper. Connect with us on Twitter, Telegram, and LinkedIn. Join our community with over 55k+ members on our ML SubReddit.

Explore AI Solutions for Your Business

Embrace AI to stay competitive and evolve your company:

- Identify Automation Opportunities: Find key areas that can benefit from AI.

- Define KPIs: Set measurable goals for your AI initiatives.

- Select AI Tools: Choose solutions that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, analyze the results, and expand thoughtfully.

For AI KPI management advice, reach out at hello@itinai.com. Stay updated with ongoing insights on Telegram and Twitter.