Understanding Graph Neural Networks (GNNs)

Graph Neural Networks (GNNs) are advanced machine learning tools that analyze data structured as graphs, which represent entities and their connections. They are useful in various areas, including:

- Social network analysis

- Recommendation systems

- Molecular data interpretation

Attention-based Graph Neural Networks (AT-GNNs)

Attention-based Graph Neural Networks (AT-GNNs) enhance predictive accuracy by focusing on the most relevant relationships in data. However, they face challenges due to their high computational complexity, particularly with GPU efficiency during training and inference.

Challenges in Training AT-GNNs

Training AT-GNNs is often inefficient because of:

- Fragmented GPU operations that involve multiple complex steps.

- Workload imbalances due to the heterogeneous nature of real-world graph structures.

- Super nodes that strain memory resources and hinder performance.

Current Solutions and Their Limitations

Existing frameworks like PyTorch Geometric (PyG) and Deep Graph Library (DGL) attempt to optimize GNN operations. However, they struggle with:

- Fixed parallel strategies that do not adapt to AT-GNNs’ unique needs.

- Inadequate thread utilization and kernel fusion benefits in complex graph structures.

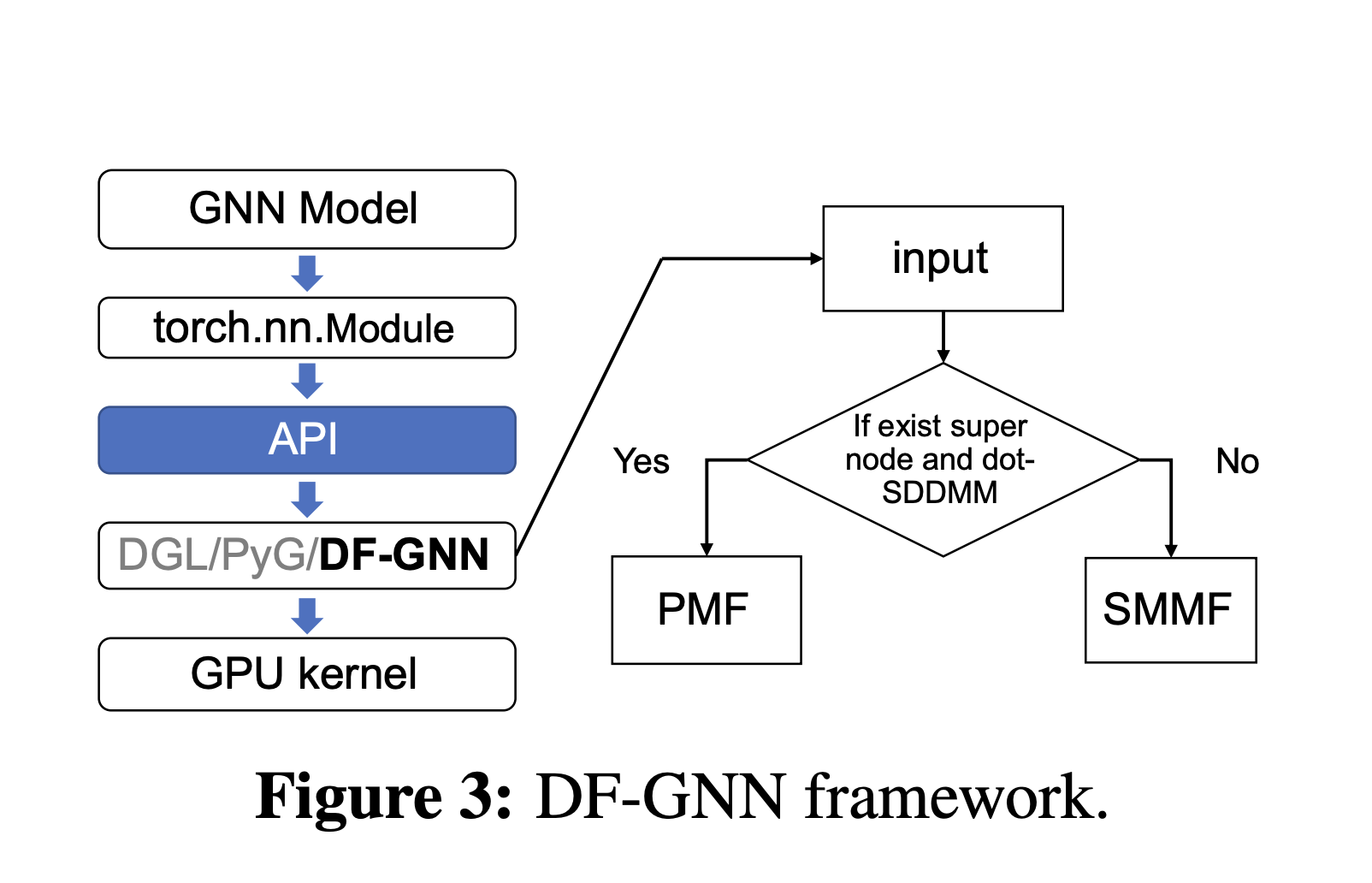

Introducing DF-GNN

The research team from Shanghai Jiao Tong University and Amazon Web Services developed DF-GNN, a dynamic fusion framework designed to optimize AT-GNN execution on GPUs. Key features include:

- Bi-level thread scheduling: Adjusts thread distribution for optimal performance.

- Dynamic kernel fusion: Allows different scheduling strategies for each operation.

Fusion Strategies of DF-GNN

DF-GNN utilizes two main fusion strategies:

- Shared Memory Maximization Fusion (SMMF): Combines operations into a single kernel to optimize memory use.

- Parallelism Maximization Fusion (PMF): Adapts strategies for graphs with super nodes for better performance.

Performance Benefits of DF-GNN

DF-GNN has shown impressive results:

- 16.3x speedup: On full graph datasets like Cora and Citeseer compared to DGL.

- 3.7x speedup: On batch graph datasets, outperforming competitors.

- 2.8x speedup: On super node-heavy datasets like Reddit and Protein.

Accelerating End-to-End Training

DF-GNN enhances overall training efficiency:

- 1.84x speedup: For complete training epochs on batch graph datasets.

- 3.2x improvement: For individual forward passes.

Conclusion

DF-GNN effectively addresses the inefficiencies of AT-GNN training on GPUs. Its dynamic adaptability, combined with robust memory utilization and thread scheduling, makes it a groundbreaking tool for large-scale GNN applications.

Stay Connected

Check out the full paper and follow our updates on Twitter, join our Telegram Channel, and connect with us on LinkedIn. If you appreciate our insights, subscribe to our newsletter and join our growing ML SubReddit community.

Explore AI Solutions for Your Business

To evolve your company with AI:

- Identify automation opportunities in customer interactions.

- Define measurable KPIs for your AI initiatives.

- Select AI solutions that meet your specific needs.

- Implement gradually, starting with pilot projects.

For AI management advice, contact us at hello@itinai.com, and stay updated through our Telegram and Twitter channels.