Introduction to GLM-Edge Series

The rapid growth of artificial intelligence (AI) has led to the creation of advanced models that understand language and process images. However, using these models on small devices is challenging due to their high resource demands. There is an increasing need for lightweight models that can function well on edge devices while still providing strong performance.

Introducing GLM-Edge Models

Tsinghua University has developed the GLM-Edge series, which includes models designed specifically for edge devices with parameters ranging from 1.5 billion to 5 billion. These models combine language and vision capabilities, focusing on efficiency and effectiveness for resource-limited devices.

Key Features and Benefits

- Multiple Variants: GLM-Edge offers various models tailored for different tasks and device needs.

- Efficient Design: Built on General Language Model technology, these models maintain high performance while being lightweight.

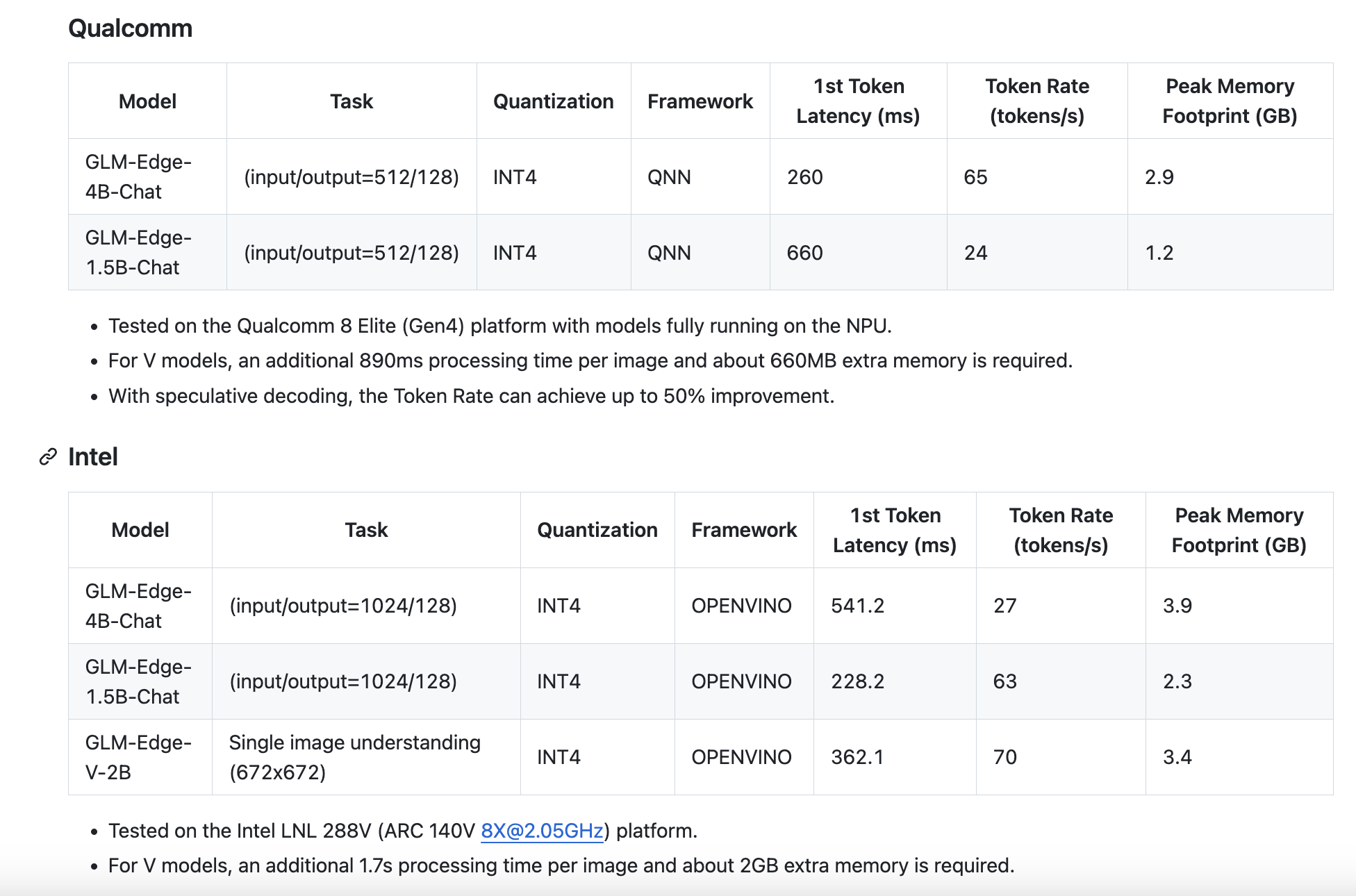

- Optimized for Edge: Uses quantization and pruning techniques to significantly reduce size and enhance efficiency.

Practical Applications

GLM-Edge supports conversational AI and visual tasks. It can handle complex dialogues quickly and perform real-time image processing tasks like object detection. The modular design allows it to merge language and vision functions into a single model, facilitating multi-modal applications.

Significance of GLM-Edge

The GLM-Edge series makes sophisticated AI accessible on more devices, reducing reliance on cloud computing. This supports cost-effective and privacy-friendly AI solutions, as data can be processed directly on the device.

Evaluation Results

Despite having fewer parameters, GLM-Edge models show strong performance. For instance, GLM-Edge-1.5B performs comparably to larger models in natural language processing and vision tasks. It effectively handles edge-specific tasks, ensuring a good balance of size, speed, and accuracy.

Conclusion

Tsinghua University’s GLM-Edge series marks a significant improvement in the field of edge AI, specifically addressing the challenges faced by resource-limited devices. By merging efficiency with advanced capabilities, GLM-Edge enables new, practical applications for edge AI, bringing us closer to widespread AI use. This development paves the way for faster, more secure, and cost-effective AI solutions in real-world scenarios.

Explore more on the GitHub Page and models on Hugging Face. Join us on Twitter, our Telegram Channel, and LinkedIn Group. If you’re interested in our insights, sign up for our newsletter and connect with over 55k followers on our ML SubReddit.

For AI-related inquiries, email us at hello@itinai.com. Stay updated with our latest insights on Telegram and Twitter.