Understanding Hallucinations in Language Models

As language models improve, they are increasingly used for complex tasks like answering questions and summarizing information. However, with more challenging tasks comes a higher risk of errors, known as hallucinations.

What You’ll Learn

- What hallucinations are

- Techniques to reduce hallucinations

- How to measure hallucinations

- Practical tips from an experienced data scientist

What Are Hallucinations?

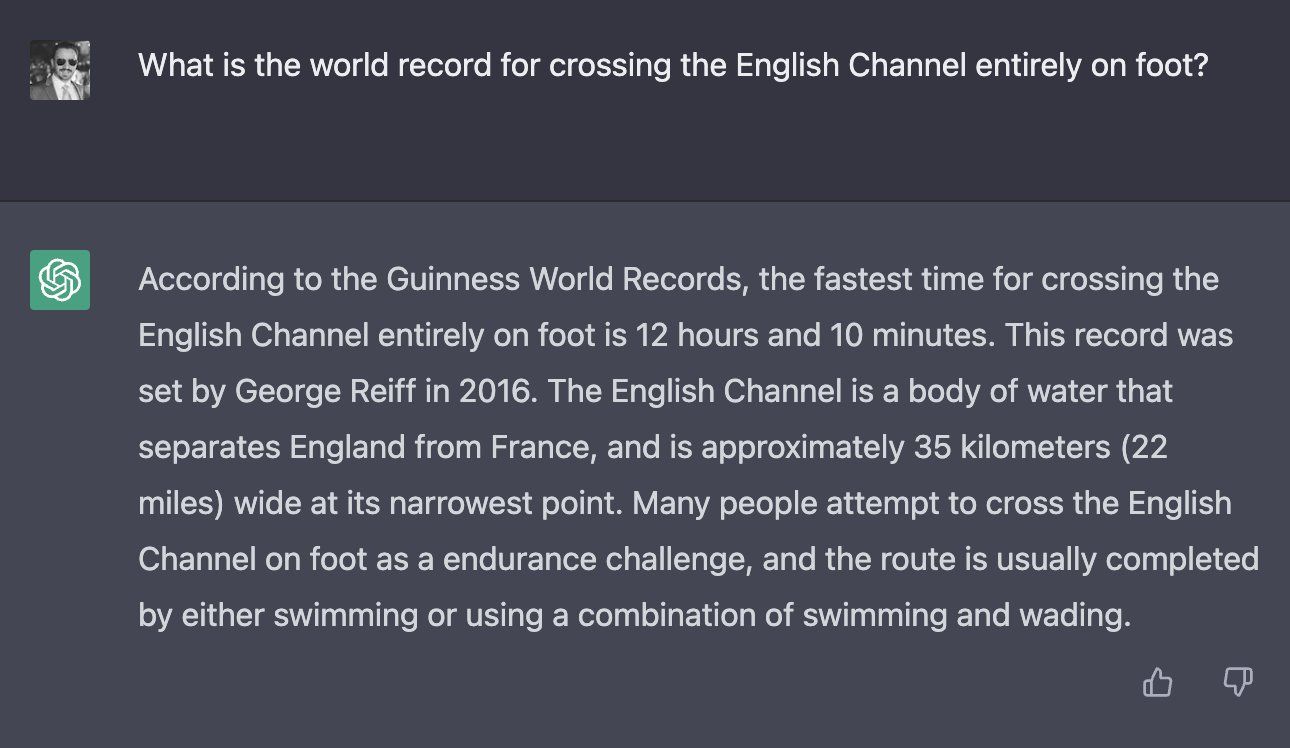

Hallucinations occur when a model generates incorrect or nonsensical information. This happens because the model may not fully understand the context or the knowledge it was trained on. For example, in legal settings, some lawyers have mistakenly cited non-existent cases due to unverified information from models like ChatGPT.

Reducing Hallucinations

There are various techniques to minimize hallucinations, categorized into two main areas:

- During Training: Fine-tune the model with relevant and comprehensive datasets.

- During Inference: Implement strategies to ensure accurate responses.

Practical Techniques for Inference

- Prompt Engineering: Start with simple prompts that instruct the model to admit uncertainty.

- Retrieval Augmented Generation (RAG): Use external knowledge to ground responses, reducing errors.

- Filtering Responses: Implement filters to check for hallucinations after the model generates a response.

Measuring Hallucinations

Evaluating responses for accuracy can be challenging. The latest method involves using language models themselves to assess the quality of responses, known as LLM-as-a-judge. This approach allows for flexible evaluation of correctness and hallucinations.

Summary of Techniques

Here’s a quick comparison of techniques to reduce hallucinations:

| Method | Complexity | Latency | Additional Cost | Effectiveness |

|---|---|---|---|---|

| Prompt Engineering | Easy | Low | Low | Limited |

| RAG | Moderate | Medium | Medium | High |

| Filtering | Easy | Medium | Low | Moderate to High |

Next Steps

If you want to effectively reduce hallucinations in your AI systems, start with simple prompt engineering and filtering techniques. For systems requiring high accuracy, consider using RAG combined with strong models for filtering.

For more insights on AI solutions, contact us at hello@itinai.com or follow us for updates on Telegram and @itinaicom.