Growing Need for Fine-Tuning LLMs

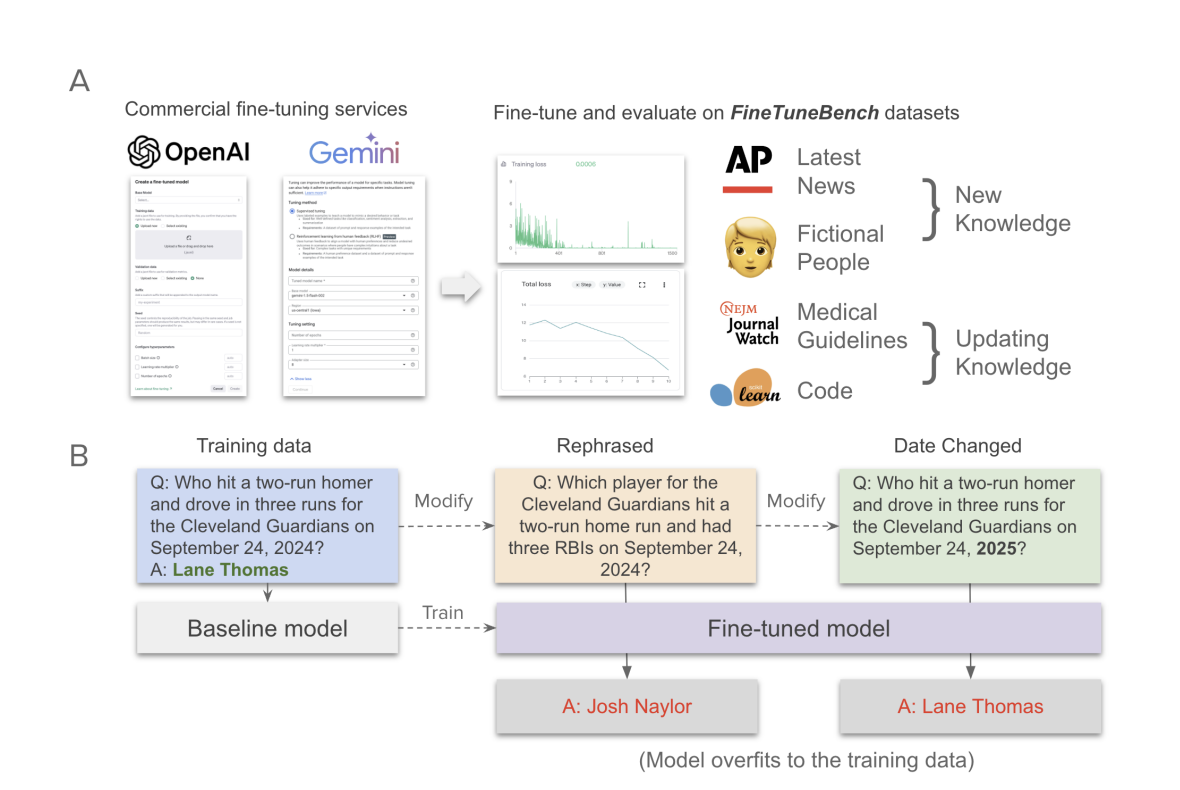

The demand for fine-tuning Large Language Models (LLMs) to keep them updated with new information is increasing. Companies like OpenAI and Google provide APIs for customizing LLMs, but their effectiveness for updating knowledge is still unclear.

Practical Solutions and Value

- Domain-Specific Updates: Software developers and healthcare professionals need LLMs that reflect the latest information in their fields.

- Adaptation of Closed-Source Models: Fine-tuning services allow companies to adapt proprietary models, although transparency and options are limited.

- Need for Standardized Benchmarks: There are currently no standardized ways to evaluate how well fine-tuning works.

Current Fine-Tuning Methods

Methods like Supervised Fine-Tuning (SFT), Reinforcement Learning from Human Feedback (RLHF), and continued pre-training are used to modify LLM behavior, but their effectiveness for knowledge updates is still being assessed.

Challenges with Knowledge Injection

- Retrieval-Augmented Generation (RAG): This method adds knowledge to prompts, but it often ignores conflicting information, leading to inaccuracies.

- Limited Understanding of Larger Models: More research is needed on fine-tuning larger commercial models, as past studies focused on classification and summarization.

FineTuneBench Framework

Researchers at Stanford University created FineTuneBench to evaluate how well commercial fine-tuning APIs help LLMs learn new and updated knowledge. They tested five advanced LLMs, including GPT-4o and Gemini 1.5 Pro, and found limited success.

Key Findings

- Models averaged only 37% accuracy for learning new information and 19% for updating existing knowledge.

- GPT-4o mini performed the best, while Gemini models showed minimal ability to update knowledge.

Unique Datasets for Evaluation

To assess fine-tuning effectiveness, researchers created two datasets: the Latest News Dataset and the Fictional People Dataset. These datasets tested models on information not present in their training sets.

Training Insights

- Fine-tuning OpenAI models showed high memorization but struggled with generalization for new tasks.

- Gemini models underperformed, indicating challenges in memorization and generalization.

Future Directions

The study emphasizes that relying on current fine-tuning methods is challenging due to limitations in existing models. Future research will explore how the complexity of questions affects model performance.

Get Involved

Check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and connect with us on LinkedIn. If you enjoy our work, subscribe to our newsletter and join our 55k+ ML SubReddit.

Webinar Opportunity

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions.

Enhance Your Business with AI

To stay competitive and leverage AI effectively, consider the following:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that meet your needs and allow customization.

- Implement Gradually: Start with a pilot program, gather data, and expand wisely.

Contact Us

For AI KPI management advice, reach out at hello@itinai.com. For continuous insights, follow us on Telegram or @itinaicom.

Revolutionize Your Sales and Engagement

Discover how AI can transform your sales processes and customer engagement at itinai.com.