Introduction to Large Language Models (LLMs)

Large Language Models (LLMs) are essential for many consumer and business applications today. However, generating tokens quickly remains a challenge, often slowing down these applications. For instance, as applications require longer outputs for tasks like searching and complex algorithms, response times increase significantly. To improve the efficiency of LLMs, we need faster token generation methods.

Challenges with Current Approaches

Current methods for speeding up token generation have their drawbacks:

- Dependence on Draft Models: These methods rely on the quality of draft models, which can be expensive to train or fine-tune.

- Integration Issues: Merging draft models with LLMs can lead to inefficiencies and memory conflicts.

- Resource Intensive: Additional decoding heads require fine-tuning and consume a lot of GPU memory.

Introducing SuffixDecoding

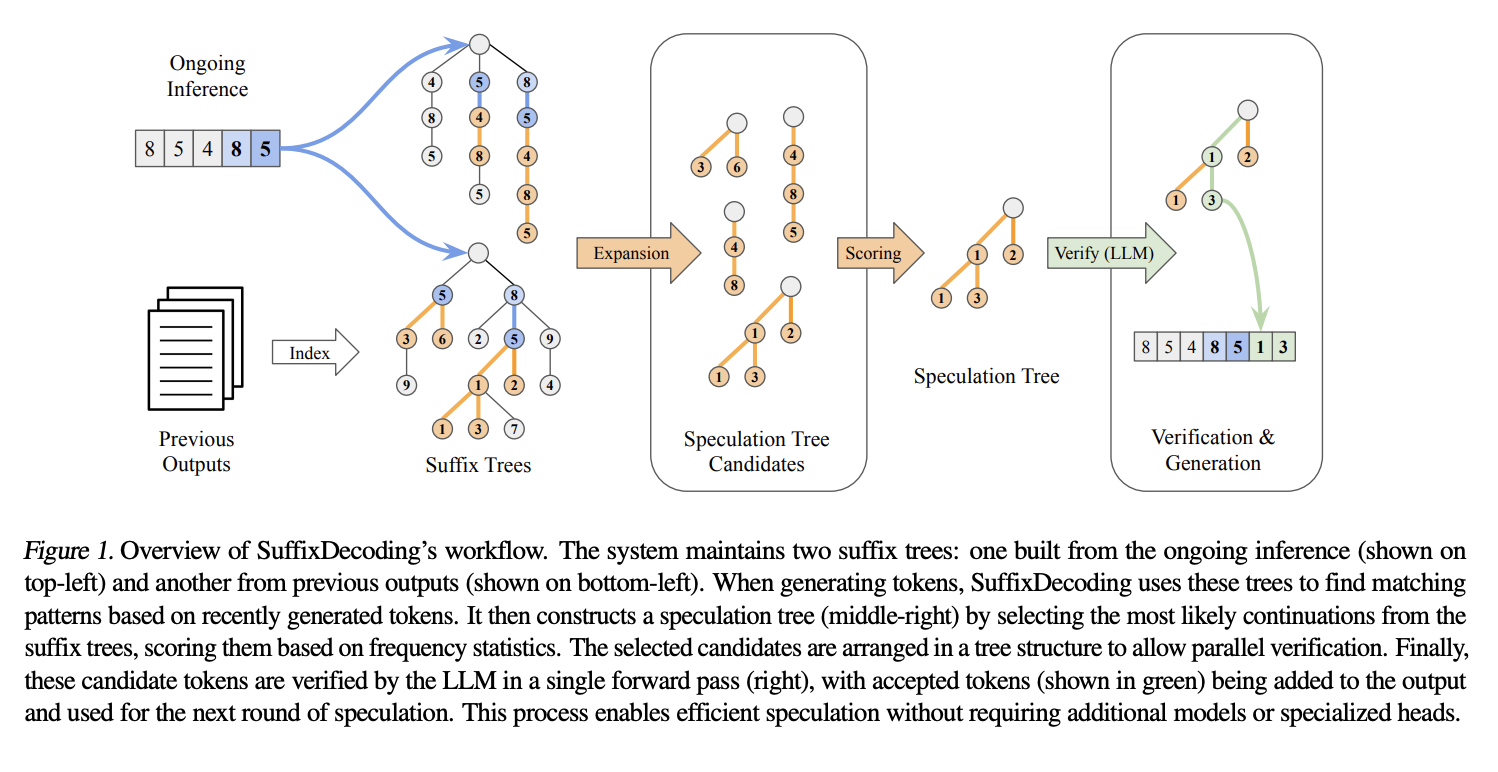

Researchers from Snowflake AI Research and Carnegie Mellon University have developed SuffixDecoding, a model-free method that eliminates the need for draft models or extra decoding heads. This approach uses efficient suffix tree indices built from previous outputs and ongoing requests.

How SuffixDecoding Works

- It tokenizes prompt-response pairs and creates a suffix tree structure from these tokens.

- This structure allows for quick identification of potential continuations based on past outputs.

- At each step, SuffixDecoding selects the best continuation tokens using frequency statistics, which are then verified by the LLM in one pass.

Benefits of SuffixDecoding

SuffixDecoding offers several advantages:

- Efficiency: It avoids the complications of integrating draft models, leading to faster token generation.

- Scalability: It uses a larger reference corpus, allowing for better candidate sequence selection.

- Performance: Experimental results show up to 2.9 times higher output throughput and 3 times lower time-per-token latency compared to existing methods.

Conclusion

SuffixDecoding is a game-changer for accelerating LLM inference. By using suffix trees from past outputs, it enhances token generation speed and accuracy without the overhead of traditional methods. This innovation paves the way for more efficient and robust LLM applications in various fields.

Get Involved

For more details, check out the original research. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you enjoy our insights, consider subscribing to our newsletter or joining our 55k+ ML SubReddit community.

Upcoming Webinar

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions

Unlock AI Potential for Your Business

To stay competitive and leverage AI, consider the following:

- Identify Automation Opportunities: Find key areas in customer interactions where AI can help.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select AI Solutions: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, collect data, and expand your AI usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram at t.me/itinainews or Twitter @itinaicom.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.